Pickle boasts that its first AR glasses can “learn how you live,” offering an assistant to watch, remember and aid in the moment. That claim ventures into the realm beyond smart glasses, into adaptive computing: a service that learns from your activity and tries to predict your next move. What that would actually take — and the evidence to watch for.

How ‘Learning How You Live’ Might Work in Practice

To know a user’s life, the device requires ongoing context. In practice, this means combining inputs from head-worn cameras, microphones and IMU sensors — and perhaps eye tracking. The raw stream is fragmented into “events” (commute, cooking, meeting) via time/location/people/task cues — a method used in lifelogging literature from institutions such as Carnegie Mellon’s HCII and Stanford’s HAI.

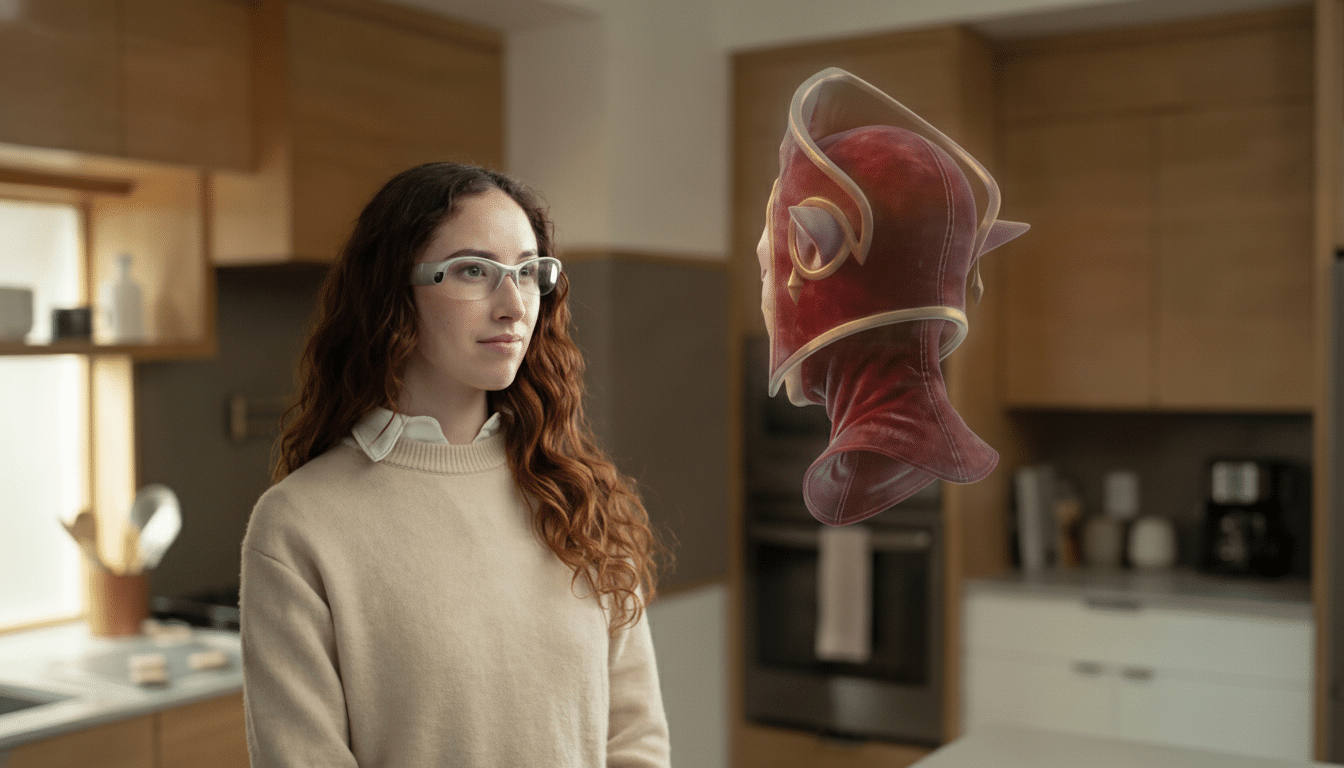

Each event is encoded into short embeddings and stored in a personal memory bank — think a searchable timeline of what you did, where and with whom. A compact on-device language model picks memories relevant to answering questions or prompting proactive nudges: produce your grocery list when it knows you’ve walked into a store, show you a recipe when it notices ingredients on your countertop or remind you of an intention when a familiar face comes into view.

The glue is retrieval-augmented generation. Rather than functioning as a chatbot guessing, the assistant mines from discrete past instances and mates them to what its sensors see now. When executed well, suggestions feel spookily contextual; executed poorly, they can make an app feel nosy or random instead of wonderfully helpful.

The Probable Tech Stack Behind the Pitch

Today’s NPUs in mobile offer double-digit TOPS, sufficient for on-device speech, scene classification and some vision-language tasks. Anticipate a pipeline that involves: wake-word ASR, a vision-language encoder of objects and texts, an agile LLM for reasoning, and a vector database for memory. Spatial understanding relies on SLAM to anchor graphics in the world; gaze or head-pose estimations use where you’re looking.

All consumer-grade AR glasses use waveguides with microLED or LCoS-based projectors. Most have a dutifully modest field of view, usually 30 to 50 degrees, and need to hit high brightness levels in order to stay visible out in the open. That display budget is in direct competition with AI compute and radios, so power management is a first-class problem.

Can This Be Done in Actual AR Glasses Form Factor?

That’s the key to the skepticism. Real AR glasses manage optics, compute, battery, cameras and thermals before the user gets weighed down — or overheated. For context, Ray-Ban-style smart glasses with cameras (but no see-through AR display) weigh in around 48–50g; display-forward glasses like the Xreal Air 2 are approximately 70g; headsets that provide full spatial mapping or more (HoloLens 2, Apple’s latest) are hundreds of grams because they pack larger batteries and do more processing.

Running multimodal AI 24/7 is hungry work. To make matters worse, despite the efficacy of the models we experiment with, a vision pipeline + SLAM approach to inside-out tracking alongside a bright display can push power draw well past 2W; with a thin temple battery, that would be hours and not days unless the system offloads aggressively to cloud or duty-cycles sensors or dims its display. Next, heat becomes a comfort and hazard limit.

Weight, bulk and field of view are the trade-offs to watch. A product promising full-color, wide-FOV AR; multi-camera sensing and all-day AI in a sunglasses-like frame? Independent teardowns and public demos should help establish where the compute lives, how heat is handled, what the effective FOV looks like (and how bright) in direct sunlight.

What ‘Learning’ Really Looks Like in Real-World Use

Expect a period of onboarding where the system creates a baseline: home/work, typical contacts, usual tasks and visual anchors in your environment.

Over time, it should get smart and recognize that “boiling pasta” is a scene as frequently visited as your kitchen, or objects (your meds), or sequences (you make coffee then call your sister) and track interventions: you cook but boil over a lot, you said — want me to start a timer for a pot of water?

Reliable anchoring is key. Unless the glasses can reliably recognize that same countertop, doorframe, or whiteboard under differing lighting conditions, intelligent overlays will wander off the screen or simply vanish. Memory quality matters too: Are reminders tied to concrete memories of past events, or generic guesses? Heading the list of personal recollection tricks is one that may seem quite simple: “Enhanced retrieval clearly improves performance remarkably, provided memory can be cleanly segmented and frequently refreshed,” leading labs report.

Privacy and Safety Must Be Built In from the Start

Such a helper, which sees all, must demonstrate some modesty. Best practice would involve on-device processing by default, clear indicators for camera/mic use (my computer has a small light near the lens but I want something big and bright), physical kill switches, and granular retention controls (“keep this moment,” “forget this,” “auto-delete after 24 hours”). NIST’s and GDPR’s frameworks emphasize data minimization and purpose limitation — store summaries, but not virgin video; be sensitive to raw capture, and certainly never store faces of passers-by without consent.

Cloud offloading can boost performance, but it raises policy questions: Who has access to your memories? How are they encrypted and what if a server is breached?

Providers are increasingly offering end-to-end encryption and transparency reports; any “life-learning” product should be released with a technical privacy white paper, not just marketing materials.

How to Vet the Claims and Spot Real-World Proof

Look for live, unscripted demos featuring:

- Continuous AR overlays while walking

- Strong object recognition in bright sunlight and indoor light

- Hands-free voice commands in noisy spaces

- Immediate, relevant memory recall based on specific past events

- Multi-hour battery life using the display

Independent reviewers should be able to test out weight on a scale, measure field of view and check what actually runs on-device versus in the cloud.

If Pickle can serve up an assistant that actually learns your daily rhythm — without turning you into someone else’s data source — that would mark a watershed moment for AR. Until we’ve seen independent testing of these claims with figures, consider excited skepticism regarding the optics, optical stack, power draw and AI pipeline. The bar for “learning how you live” is high; the evidence ought to be, too.