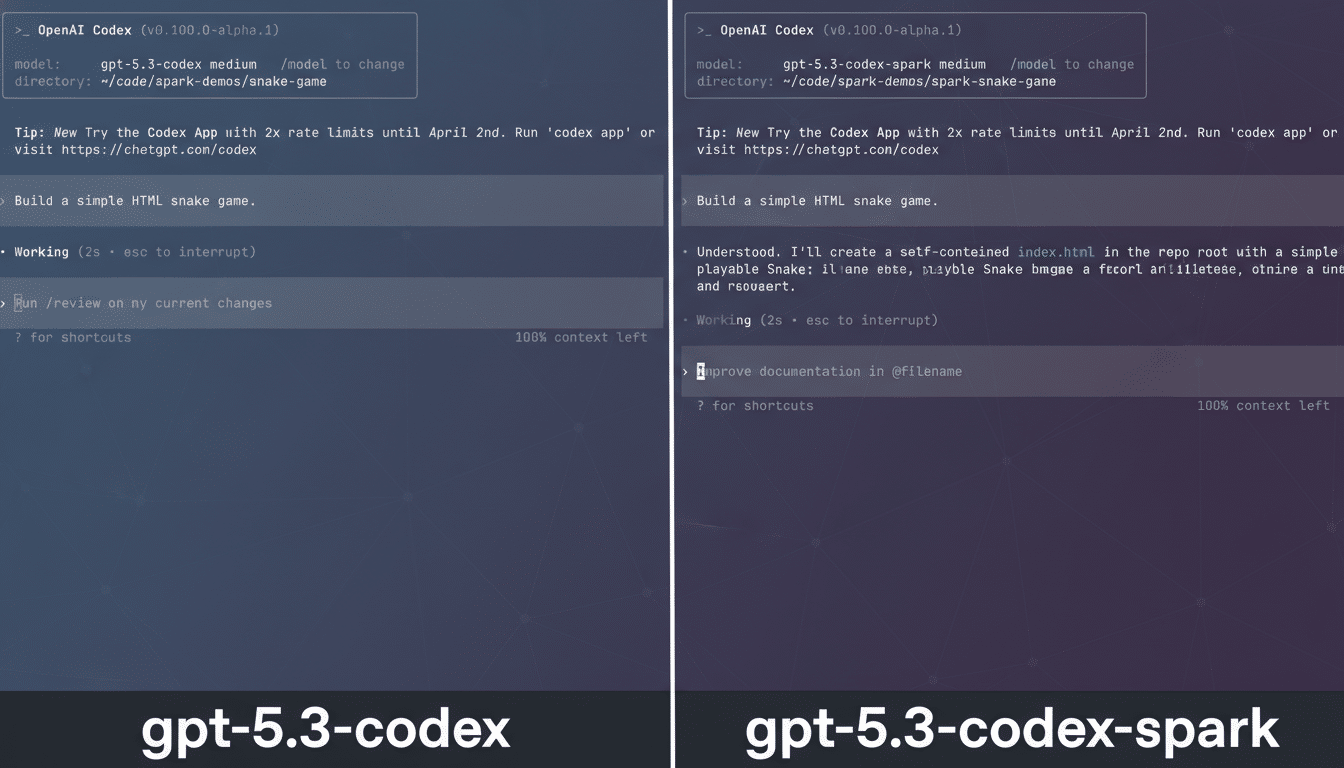

OpenAI is introducing GPT-5.3-Codex-Spark, a lighter, speed-tuned edition of its agentic coding model that runs on a dedicated Cerebras accelerator. The move pairs a new software variant with specialized silicon to slash response times for code generation and in-IDE collaboration—an explicit bid to make AI feel instantaneous for developers.

Positioned as a complement to heavier-duty Codex builds, Spark targets ultra-low latency and rapid iteration rather than marathon refactors or repository-wide reasoning. It arrives as a research preview for ChatGPT Pro users in the Codex app, and OpenAI is calling it the first milestone in a deepened hardware partnership with Cerebras.

OpenAI has framed the goal plainly: keep developers in flow. Executives teased the release ahead of time, hinting at a “special” addition to Codex that “sparks joy,” underscoring the emphasis on feel and speed over raw benchmark brawn.

Why A Dedicated Chip Matters For Modern Coders

Coding agents don’t just answer one question and stop; they loop. They propose a fix, run tests, read errors, revise, and try again. Each round-trip hits both model compute and networking, so latency compounds quickly. For pair programming, the line between delightful and distracting is tight—industry UX guidance often cites the 100–200 ms window as the point where interactions feel “instant.” In practice, large models can exceed that once you account for first-token delay, decoding, and tool calls.

That’s why OpenAI is steering Spark to dedicated silicon. The aim is to cut tail latency (p95 and p99) as much as the median, because the occasional multi-second pause is what breaks concentration. Internal studies from developer platforms have consistently found that faster, more fluid suggestions drive higher acceptance and throughput; GitHub, for example, has reported task completion speed-ups up to 55% with AI pair programming in controlled evaluations. Lowering model and I/O jitter translates directly to fewer breaks in a developer’s flow.

Inside The Cerebras Wafer Scale Engine 3

Spark runs on Cerebras’ third-generation Wafer Scale Engine (WSE‑3), a single, wafer-sized chip carrying about 4 trillion transistors. Rather than stitching together dozens of discrete GPUs over external links, the wafer-scale approach keeps compute and on-chip memory in one massive, tightly connected fabric. The design minimizes interconnect overhead and reduces the need to move activations and weights off chip—factors that can meaningfully trim both average and worst-case latency for inference.

For compact and mid-size coding models, the ability to hold more of the working set on-chip helps avoid expensive trips to external memory. For agents that interleave short bursts of generation with tool use, the payoff is felt as faster first-token times and smoother, more predictable response curves. OpenAI says Cerebras’ architecture is particularly well-suited to workflows where low latency, not just peak throughput, is the primary design goal.

Two Modes For Codex Workflows, Built For Speed

OpenAI is positioning Codex along two complementary tracks: Spark for real-time collaboration and rapid prototyping, and heavier Codex builds for long-running tasks that demand deeper reasoning, extended context, or large-scale codebase changes. Think of Spark as the conversational co-pilot that autocompletes functions, drafts unit tests, or refactors a class on the fly—while the larger sibling handles multi-file migrations, design exploration, or complex, tool-rich plans that can run for minutes.

This split mirrors how developers actually work in modern IDEs and terminals: frequent, low-latency taps to stay in flow, punctuated by occasional heavyweight jobs. By anchoring the fast path on dedicated silicon, OpenAI is trying to make those micro-interactions reliably snappy without sacrificing the depth available when you need it.

What It Signals In The AI Silicon Race Today

The Spark launch deepens a multi-year OpenAI–Cerebras agreement valued at over $10 billion, signaling that major AI providers are willing to diversify beyond general-purpose GPUs when the workload demands it. Cerebras, which recently raised $1 billion at a reported $23 billion valuation and has discussed IPO ambitions, joins a field where hyperscalers and chipmakers are converging: Google with TPU, Amazon with Inferentia and Trainium, Microsoft with Maia, and Nvidia with its training and inference platforms.

The bet is straightforward: for interactive AI—coding assistants, copilots, and agentic tools—latency is product. Stack Overflow’s annual Developer Survey indicates that AI coding assistants are now part of mainstream workflows for a large share of professionals, and the providers that can sustain faster, steadier responses at scale will enjoy both usage and retention advantages.

Early Access And What To Watch As Spark Rolls Out

Spark is rolling out as a research preview to ChatGPT Pro users inside the Codex app. OpenAI describes it as a “daily productivity driver” rather than a replacement for its larger Codex builds. On the roadmap, expect iterative tuning around first-token latency, tokens per second, and tail behavior under tool use and network load, as well as evaluations that look beyond speed: compile success, unit-test pass rates, code security, and reproducibility.

Cerebras leaders have framed the collaboration as a proving ground for new interaction patterns made possible by faster inference, and OpenAI calls Spark the opening step in that direction. The watch item now is whether dedicated accelerators can consistently deliver the kind of real-world responsiveness—and cost per token—that shifts daily developer habits at scale.