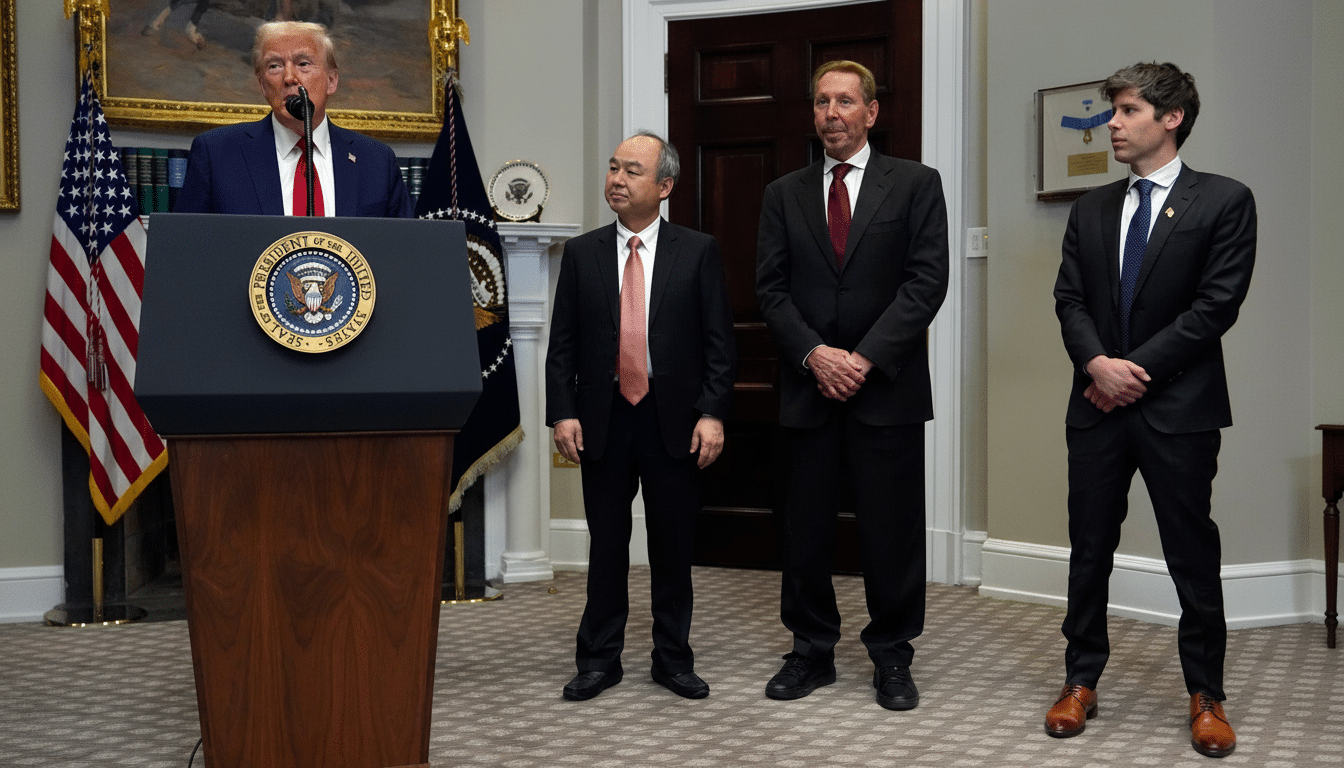

OpenAI is not done making game-changing partnerships, Sam Altman says. Fresh on the heels of headline agreements associated with its Stargate initiative and new chip pacts with Nvidia and AMD, the chief executive indicated that there are more big deals to be had, underscoring an arms race for compute, power, and capital that is reshaping the AI supply chain.

Altman Hints At A New Wave Of Partnerships

On a recent episode of the a16z Podcast, Altman hinted the company will be announcing more large deals, explaining an ambitious infrastructure approach for advancing this next generation of models and products. His point was clear: To meet demand for more capable systems, a bigger coalition of partners is needed — from the “electrons” on up to distribution.

- Altman Hints At A New Wave Of Partnerships

- Why OpenAI Is Pursuing Gigawatt-Scale Infrastructure

- How The Recent OpenAI Deals Add Up And Interconnect

- Why OpenAI’s Financing Structure Is Drawing Scrutiny

- What Might Be Next For OpenAI’s Partnership Strategy

- What This Means For The AI Market And Enterprise Users

The remark comes following discussion over how OpenAI is financing and sourcing its capability. The company has been working to stitch together complex, multi-pronged arrangements that include cloud access, direct hardware procurement, long-term buildouts and — crucially — capital that aligns incentives across vendors and investors.

Why OpenAI Is Pursuing Gigawatt-Scale Infrastructure

Nvidia CEO Jensen Huang recently discussed OpenAI’s progression towards being a “self-hosted hyperscaler” on CNBC, saying Nvidia will offer, for the first time, full systems directly to them as well as to cloud providers. The move, which goes beyond GPUs to full-stack systems and networking, aims to give OpenAI more control over performance and cost at an unparalleled scale.

Huang also slapped eye-watering numbers on the buildout, suggesting that one gigawatt of AI data center capacity might run into tens of billions of dollars when the cost of land and power to cool everything was factored in, as well as servers, networking, and storage itself. At those levels, only multi-stakeholder financing and long-dated commitments can make the math work, especially for a company whose revenue — by media estimates — is in the mid-single-digit billions and rising sharply.

How The Recent OpenAI Deals Add Up And Interconnect

OpenAI’s Stargate effort, in which Oracle and SoftBank were involved, is a giant U.S. infrastructure project measured in multiple gigawatts of capacity, with a multiyear cloud commitment to Oracle said to top hundreds of billions of dollars. The company also has a direct deal with Nvidia for at least 10 gigawatts of AI data centers, as well as something separate with AMD involving multiple gigawatts of capacity.

Concurrently, the company has lined up expansions in the U.K. and across Europe, while continuing to draw on capacity from Microsoft Azure and specialized providers like CoreWeave. The through-line is straightforward: diversify your compute sources, lock up energy and land in bulk, and thread the near-term cloud needle with a glide path to shouldering more of the stack.

Why OpenAI’s Financing Structure Is Drawing Scrutiny

Not everybody is cheering the architecture of these deals. Bloomberg has reported criticism in recent days that there is a circular set of deals — chipmakers investing in OpenAI and providing the gear that it will buy. In practice, the mechanisms are variations on well-trod models in other capital-intensive tech sectors: vendor financing, capacity pre-pays, revenue-sharing, and equity sweeteners — all techniques used to align incentives when demand is real but supply is thin.

There is precedent. Hyperscalers have been signing PPAs a full 10+ years in advance; major device makers are pre-paying for foundry capacity at TSMC; cloud customers regularly sign up for billions in reserved spend. OpenAI is applying a similar playbook to an extremely power- and network-intensive frontier workload domain (which means it requires high-bandwidth memory, ultra-dense interconnects, advanced liquid cooling).

What Might Be Next For OpenAI’s Partnership Strategy

If we see more OpenAI news shortly, I’d expect those deals to focus on bottlenecks:

- Long-term power and grid interties with utilities, renewable developers, or transmission operators

- Deeper relationships with memory suppliers like SK Hynix or Micron

- Networking relationships with players like Nvidia, Broadcom, Arista, or Cisco

- Colocation/build-to-suit commitments from edge- or core-focused operators such as Digital Realty or Equinix (EQIX)

Government-supported incentives in certain regions also might emerge as part of a strategic push for localization of compute and talent.

For chips, a multi-sourcing policy can be strategic. Combining Nvidia’s platform with AMD’s accelerators lowers single-vendor risk and increases bargaining leverage, even if software tuning complicates the picture. The public shock-and-awe exhibit from Huang over the AMD deal is a reminder of how aggressively OpenAI has been trying to hedge supply in order to ensure that model development can proceed unfettered.

What This Means For The AI Market And Enterprise Users

OpenAI locks in gigawatt-scale capacity. If OpenAI continues to gobble up gigawatt-scale capacity, the ripples will be felt far and wide. They’ll also face tighter component supply and higher power prices in regions where they’re capacity-constrained, while cloud providers must strike a balance between serving OpenAI and their own AI efforts. For enterprises, the upside might be more rapid access to more powerful models; the downside is potential volatility in pricing as infrastructure costs and power markets change.

The larger bet, as Altman puts it, is that yet-to-be-developed models will unlock sufficient economic value to amortize exceptional upfront costs. And, with demand spiking and partners stacking in from the chip to the electron, OpenAI is signaling that the dealmaking cycle has only just begun.