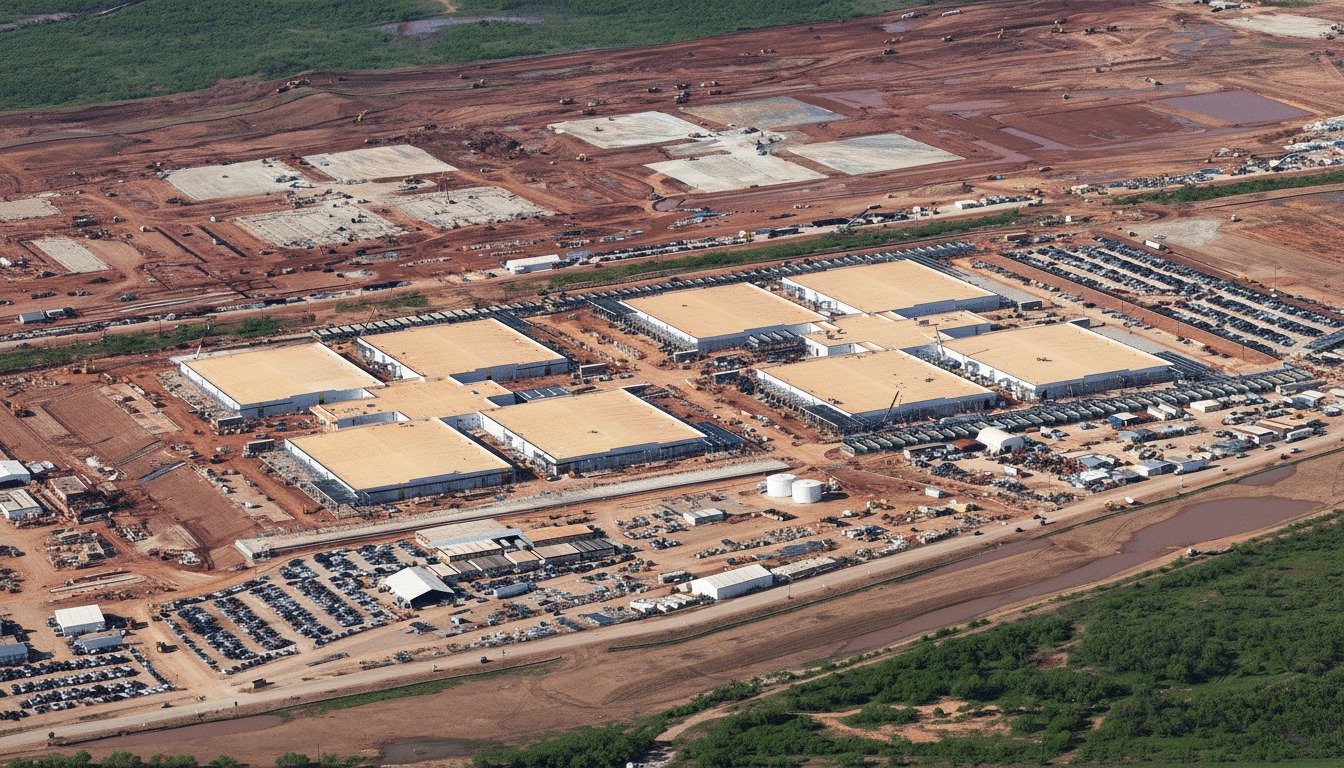

OpenAI is fast-tracking its Project Stargate buildout with five new AI data centers across the United States in a move the company claims will provide 7 gigawatts of power capacity toward a 10-gigawatt target. The biggest buildout in the company’s history will focus on training and serving frontier models, bringing OpenAI into the utility-scale compute footprint and spotlighting how the AI arms progression is altering American energy, occupations, and infrastructure.

OpenAI is scaling a new infrastructure hub that serves as a simple reality: current AI systems demand a lot of compute, and inference requirements are growing faster than training needs. Due to increasing model sizes and context windows, as well as enterprise usage, OpenAI is dashing to secure dependable, sub-millisecond latency capacity for testing and production workloads.

While centralization can reduce per-token costs and increase stability, it requires power, land, water, and a large group of suppliers – thus the multi-site approach. The organization portrays the expenditure as foundational infrastructure rather than a wager on short-term results. While GPUs are the most apparent bottleneck, power, network latency, and cooling are increasingly becoming the limiting factors. Building campuses simultaneously minimizes risk, expedites deployment, and places OpenAI to localize computation near users and enterprise data.

Seven gigawatts announced, targeting ten by phase end

OpenAI is planning a site in Shackelford County, Texas; Doña Ana County, New Mexico; Lordstown, Ohio; Milam County, Texas; and an undisclosed Midwestern location. According to OpenAI, the five facilities together measure 7 gigawatts of capacity – Project Stargate is “ahead of schedule,” and the newest developments should bring it “on the path to 10 gigawatts by the end of our current build phase.” To put that in context, 1 gigawatt can power roughly 750,000 U.S. homes. The announced average site, therefore, is well over 1 gigawatt – well outside of the power capacity typical today for hyperscale campuses, which currently peak in the low hundreds of megawatts.

Realizing those numbers likely involves a combination of phased buildouts, long-term power purchase agreements, significant grid interconnections, and, possibly, onsite generation. OpenAI projects more than 25,000 onsite jobs among the five locations, with additional work flowing through the engineering, construction, and local services ecosystems.

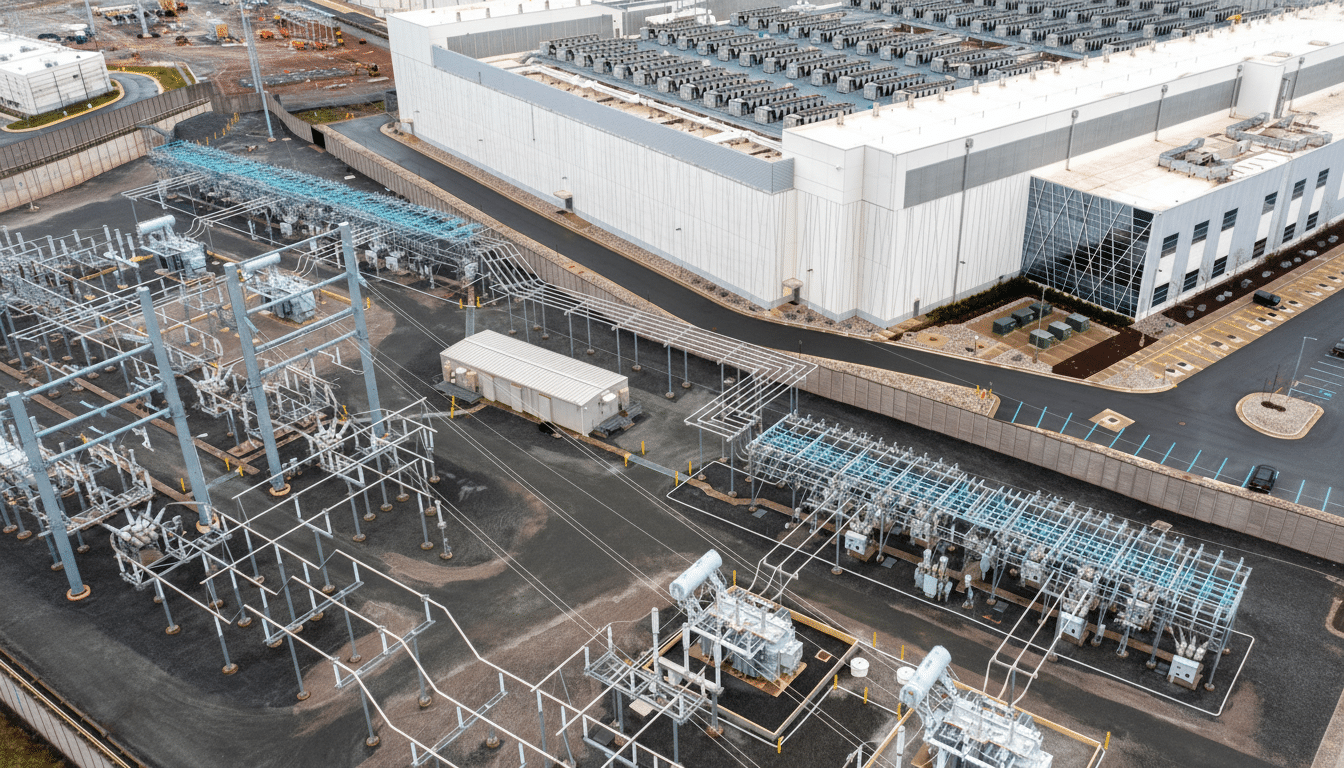

The impact on the supply chain is equally great: high-voltage transformers, switchgear, chillers, advanced cooling systems, fiber backbones, and backup power all confront tight lead times. Rivaling this new infrastructure investment is an increase in the regional grid, which can stimulate more general business development. Sufficient skilled labor, water rights, transmission capacity, and cooperative permitting will all be important factors in the site selection process. Collaboration with public utilities and regional grid operators will be important in scheduling power delivery with building goals and minimizing stranded capital.

- High-voltage transformers

- Switchgear

- Chillers

- Advanced cooling systems

- Fiber backbones

- Backup power

- Skilled labor availability

- Water rights

- Transmission capacity

- Cooperative permitting

Power, grid, and water constraints shape data centers

AI’s power appetite is seeing more limelight. The International Energy Agency expects that data centers, AI, and crypto’s electricity demands will hit almost 800 terawatt-hours globally by 2026 — nearing the current usage of an advanced economy. In the U.S., the lengthy interconnection arrays exacerbate complexity. Research by Lawrence Berkeley National Laboratory indicates that generation and storage investments may wait a few years to be granted access to the grid, confirming the importance of timely and coordinated planning.

Water is another pressure point. Many high-density data halls utilize evaporative cooling, which might slash electricity use while increasing freshwater use. Academic studies estimate that training large models could use around 300,000 liters of water when you factor in upstream cooling at power factories and data centers. Some operators are already using warm-water liquid cooling, heat reuse, and non-potable water systems, but local hydrology and climate risks remain significant considerations.

While OpenAI has yet to release information on the exact generation exchange for its new area, the industry is moving toward long-term renewable contracts supplemented by offset, around-the-clock generating capacity. That could mean portfolio-broadened emission-free power, battery storage, and perhaps advanced nuclear or other dispatchable low-emission resources that assure physical power supply matches real-time demand to address reliability deficiencies without increasing emissions.

What OpenAI’s expansion means for AI infrastructure

The power-hungry nature of AI is coming into greater focus.

Massive, geographically distributed compute is fast becoming table stakes for frontier AI. Beyond raw GPU supply, leadership depends on data-center-grade networking, high-bandwidth memory availability, and power-dense cooling — all anchored by dependable, scalable energy. OpenAI’s Stargate expansion is a bid to lock in those inputs at a national scale, reducing latency for enterprise deployments and building resilience against supply shocks.

But the broader ecosystem, from chipmakers and cloud platforms to utilities and regulators, will shape how quickly these campuses come online. If OpenAI hits its 10-gigawatt goal, it will set a new bar for AI infrastructure; along the way, it will raise expectations on efficiency, transparency, and community benefits.