OnePlus has temporarily blocked its AI Writer after users said that the feature wouldn’t generate text relating to politically sensitive subjects such as Tibet, the Dalai Lama, and India’s Arunachal Pradesh. The company also recognized the issue through a post on its Community forum and described it as a technical problem that they are investigating and trying to resolve.

What Exactly Was Blocked And How It Played Out

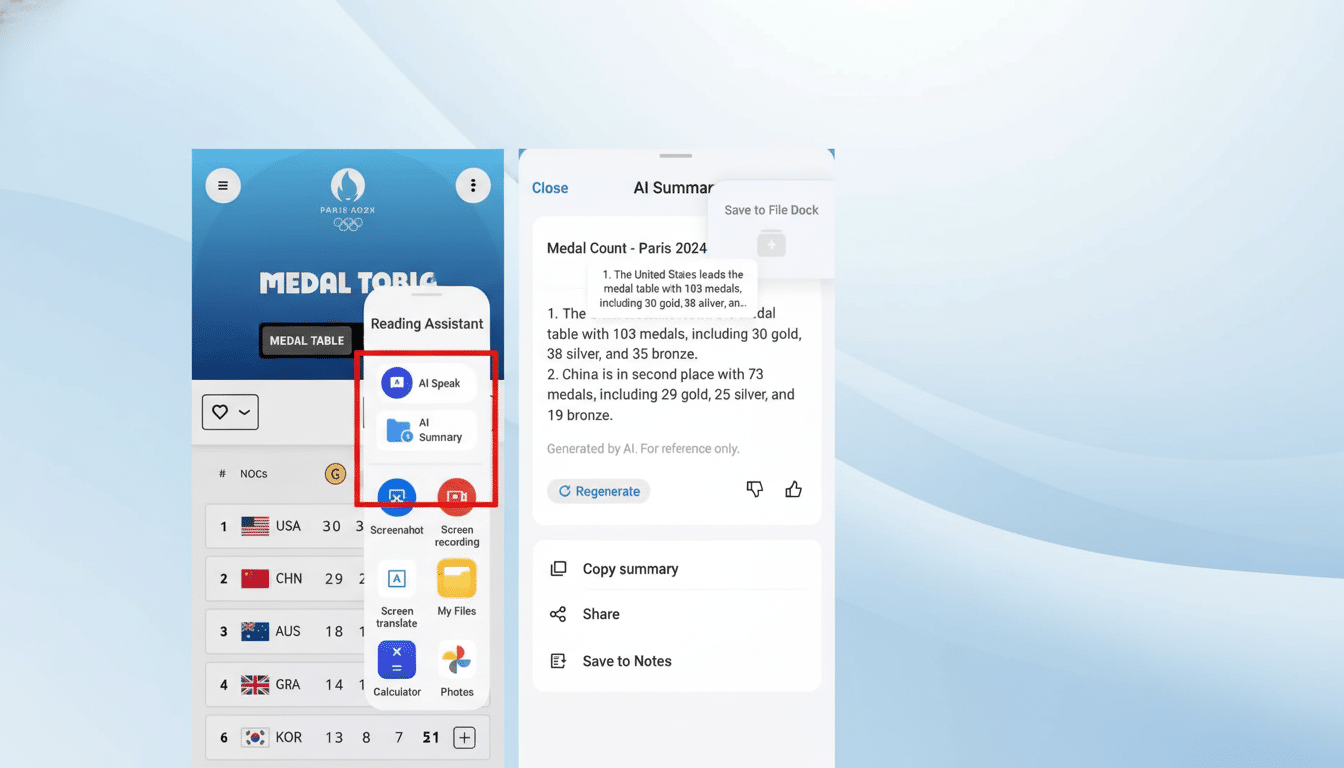

Complaints appeared from users on Reddit and X who posted screenshots of the AI Writer rejecting prompts with a message to try typing something else: Your prompt is a dud. Tests indicated that the rejection was not confined to a specific market, suggesting that region-specific content filters were either being applied universally or inconsistently.

Other users said that they’ve used the same prompt with similar copywriting in the past, suggesting either a recent change to settings or a subtle shift on the moderators’ side. This was a “smell” that indicated filtering instead of poor modeling, since the tool returned a refusal message and not an error or off-topic response.

How Local Rules Collide With Global Smartphones

AI features shipped on international devices must often navigate a variety of legal and cultural guardrails. Tech platforms in mainland China must adhere to strict content rules, while international markets look for more leeway on political discourse. If a product is developed across more than one region, it can develop restrictions that cause issues.

The same kind of discord has been witnessed in the smartphone industry. Apple suppresses the Taiwan flag emoji on iPhones in China, and TikTok has come under repeated scrutiny for what politically sensitive content is visible. In enterprise software, Zoom faced criticism because meetings to commemorate Tiananmen were disrupted. The common thread here is that global services have the potential to bear the imprint of local rules in ways that confound users beyond those jurisdictions.

Why AI Guardrails Can Fail And Block Legitimate Use

Today’s AI writers are built on top of large language models, which have layers of safety features. Common stacks include keyword classifiers, semantic filters, country or locale detection, and policy engines that decide when to respond, refuse, or redirect. If any of those layers is too broad, mislabels prompts, or applies the wrong regional policy, it is possible to block legitimate queries.

There are actually two technical situations that can produce this kind of behavior. First, a list-based filter can sometimes be tuned too aggressively so that speech like “Dalai Lama” gets caught up in hard blocks no matter the context. Second, geo- or language-based cues can route requests to the wrong policy profile—for example, when China’s moderation set is inadvertently applied in India, Europe, or North America.

It’s also where processing on-device versus in the cloud comes into play. If prompts are centrally checked on the server for policy updates, one config change could render millions of devices accommodating or denying access instantly. On-device models, by contrast, need to be updated in firmware or apps, so such accidental shifts are less likely but slower to correct. It’s notable that the AI Writer has suddenly stopped functioning on some OnePlus phones, which indicates the process is likely server-side. While there is no official word on what pipeline the AI Writer uses, it does rely in large part on a server cluster.

Trust And Transparency In The Crosshairs

Optics can be as damning as reality for AI rollouts. If users perceive that an assistant is censoring topics to fit the preferences of a government or a corporation, confidence can collapse rapidly. According to research from Pew, 52 percent of Americans say they are more worried than enthusiastic about the rise of AI, underscoring how missteps can deepen skepticism.

The solution here isn’t just technical. Providing clear release notes, policy summaries, and region toggles helps users understand whether a refusal is a bug or compliance-related. Developers should publish safety cards, model system prompts, and refusal rationales to provide guardrails that are visible and predictable.

What OnePlus Needs To Do Next To Restore Trust

The first thing to do would be to fix the policy routing to restore the AI Writer. Further to that, OnePlus might implement a transparent rejection flow describing which guidelines were triggered, an option to report false positives, and a regional policy selector—or at least show when region-based rules apply. Independent audits—or even a brief technical report—would also provide further assurance to users everywhere that the tool is not being hard-coded to suppress certain points of view beyond what the law requires in their respective territories.

The company’s wider AI strategy is also important. OnePlus has been providing AI smarts like AI Eraser and writing assistance in an attempt to match its rivals. They may be packed with features, but it’s reliability that will decide whether these tools are adopted as part of our everyday workflows. Common behavior across markets—and honest talk when things go awry—will be the difference between a valuable partner and a headline liability.

For now, the AI Writer is currently offline as OnePlus tries to correct the problem. Users can expect a staged return, which probably means first in some regions or in beta, along with clearer guidelines on what’s off-limits and why.