Nvidia is informing customers in China to pay for its H200 artificial intelligence accelerators in full upfront, a radical change from normal trading terms and a sign of the geopolitical risk and supply issues encircling top-tier AI chips. The orders are nonrefundable and cannot be amended despite unsettled approvals from U.S. and Chinese authorities, Reuters reported.

The company did not have an immediate public comment, but the move underscores a desire to avoid a situation like one that Nvidia faced when Washington began enforcing export controls on its China-specific H20 products, leading to what it described as a $5.5 billion inventory write-down. Still, in a world where demand for training-grade GPUs outpaces supply, the stricter terms shift regulatory and cancellation risk toward buyers (from Nvidia), while still keeping Nvidia’s production cadence at top of mind.

Why Nvidia Is Making Payment Terms Stricter

Upfront, nonrefundable policies are rare for most mainstream enterprise hardware, but they aren’t unheard of in constrained, high-stakes markets where supply is rationed and the risk of policy changes is pronounced. Chinese customers may still rely on commercial insurance or asset collateral — structures that can even include trade credit insurance or bank guarantees — to fulfill prepayment requirements, but partial deposits they previously had access to “are no longer the most common,” Reuters reported.

The thinking is simple: if export licenses or local approvals are not granted, the financial risk rests with buyers, not the supplier. For Nvidia, this means less of a risk in stranded inventory and haggling with pricing, while getting production slots filled to those customers most interested in taking delivery under the changing rules.

Intensity Leaves No Room For Rest In Demand

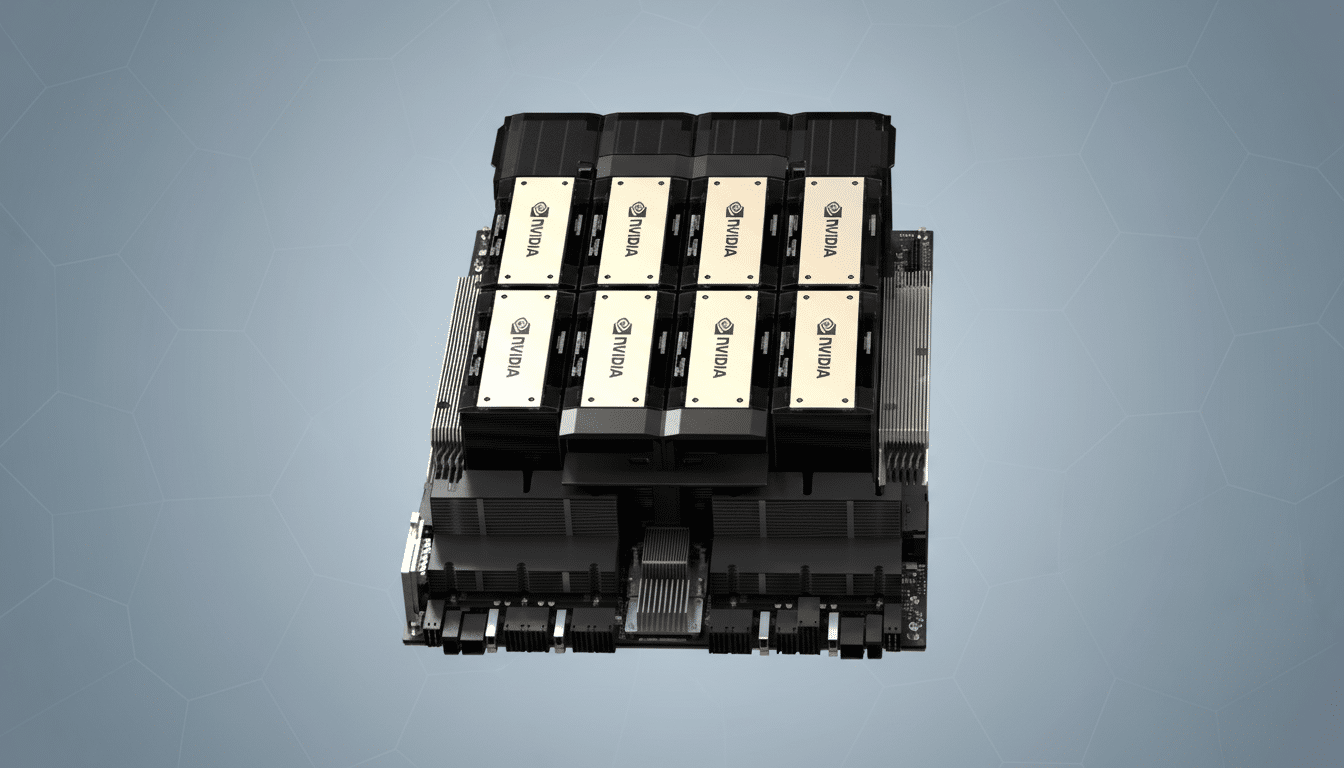

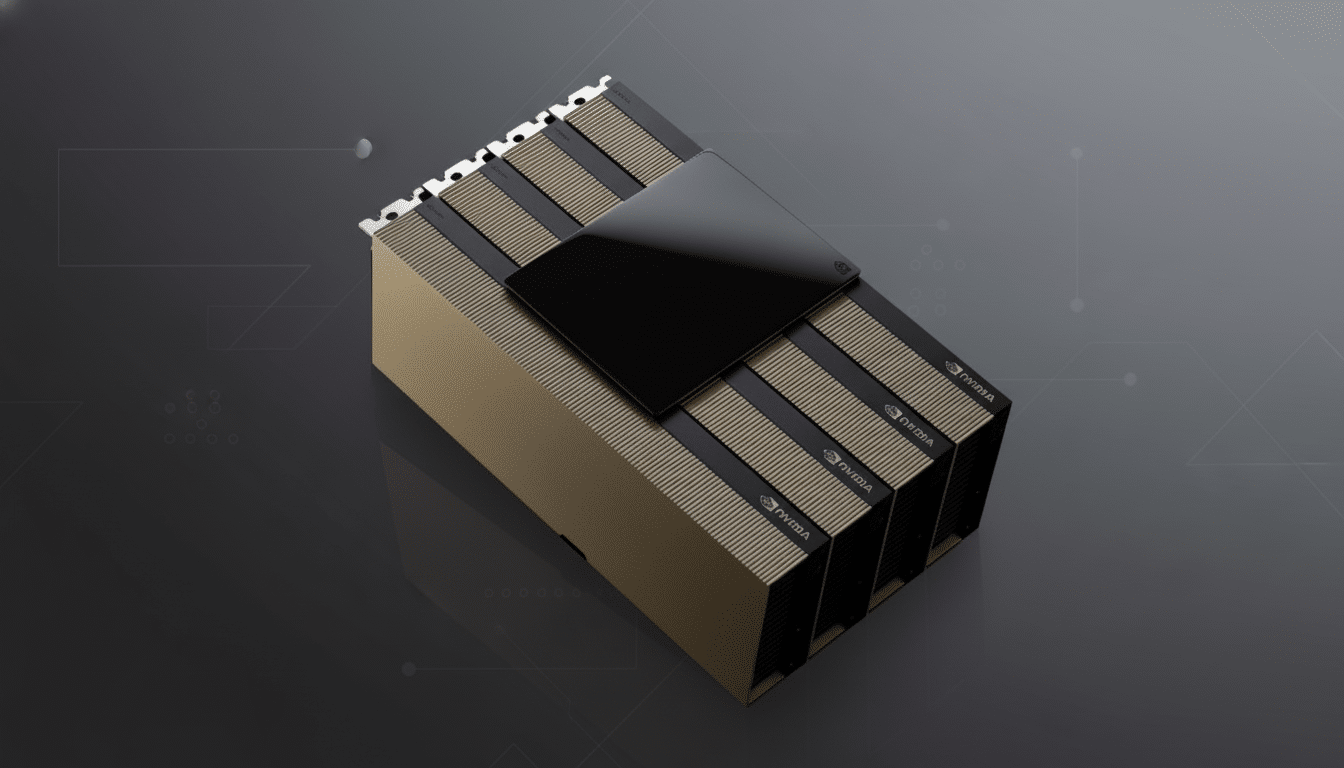

Appetite for the H200 is robust, even with the tougher terms. Nvidia has been ramping output after Chinese companies placed orders for over 2 million units to be delivered in a year, the people said. The H200, based on the Hopper architecture with HBM3e memory, is prized for workloads such as large language model training, where memory bandwidth and capacity are just as important as raw compute.

“The hyperscalers and internet platforms in China—names like Alibaba, Baidu, Tencent, and ByteDance—are also continuing to scale up their AI infrastructure as they deploy foundation models and inference services,” he added. H200 package quotes are said by brokers to be above previous H100 prices, for reasons of both enhanced performance as well as tight supply. On the other hand, TrendForce has stressed that HBM supply is still on the tight side, with SK hynix, Samsung, and Micron all continuing to expand their capacity—another ingredient for long lead times.

Beijing’s Conditional Greenlight for H200 Sales in China

China is expected to green-light sales of the H200 with restrictions on use in an attempt to prevent its products from powering the military, state-owned entities and critical infrastructure, Bloomberg has said. That caveat raises the bar for compliance: buyers may now be required to certify the ultimate use and support chain-of-custody evidence (records), and prepare for audits. Such guardrails reflect worldwide attempts to keep frontier AI compute out of sensitive applications while letting commercial innovation go forward.

The breakup is trickier for Nvidia. The company would need to convince regulators in both countries that it can police end markets while still selling to commercial customers racing to build AI capabilities. Its clause for an upfront payment suggests Nvidia is turning its focus to orders that are predictable for compliance, even if it creates some friction in the sales process.

What Upfront Payments Mean for Chinese H200 Buyers

Pre-paying for expensive accelerators locks up capital and increases currency exposure. Companies may have mitigating alternatives — such as letters of credit, collateralized facilities or insurance (from companies like Sinosure) — to help mitigate cash flow and delivery risk. Others could hedge by making some purchases with several companies across different product lines.

Alternatives exist but carry trade-offs. Domestic chips like the Huawei Ascend line are getting better (as are software stacks around them), but Nvidia’s CUDA ecosystem and tooling continue to be the reference standard for leading-edge training. Export controls on competing accelerators from international competitors further reduce substitution at comparable performance.

Strategic Outlook for Nvidia, Buyers, and Supply Risks

If cross-border approvals harden and logistics firm up, Nvidia could relent on its terms; if not, high-risk destinations might see prepaid, no-cancellation orders become standard issue.

What to look for:

- Regulatory guidance from the U.S. Commerce Department

- The nature of China’s end-use restrictions

- HBM capacity expansions

- Nvidia’s commentary around channel health and lead times on upcoming earnings calls

For now, the message is pretty clear: The most sought-after AI chips on the planet are formally available for purchase to commercial Chinese buyers, but only on terms that offer no wiggle room and reflect a world where tech supply lines are bumpy and appetites for computing are insatiable.