Nvidia is bringing out a fresh set of “physical AI” research tools, including Alpamayo‑R1, an open reasoning vision‑language model designed specifically for autonomous driving, along with a developer toolkit it’s referring to as the Cosmos Cookbook. The releases, announced at the NeurIPS AI conference, work to help advance how researchers prototype, test, and deploy end‑to‑end driving systems that perceive complex scenes and make decisions based on them while acting safely in the real world.

The company describes Alpamayo‑R1 as a first‑of‑its‑kind vision‑and‑language action model for driving. It runs on Nvidia’s Cosmos Reason family, intended to “think before it answers” by splitting tasks into multiple intermediate steps (an increasingly popular strategy in high‑stakes autonomy, where brittle heuristics have trouble).

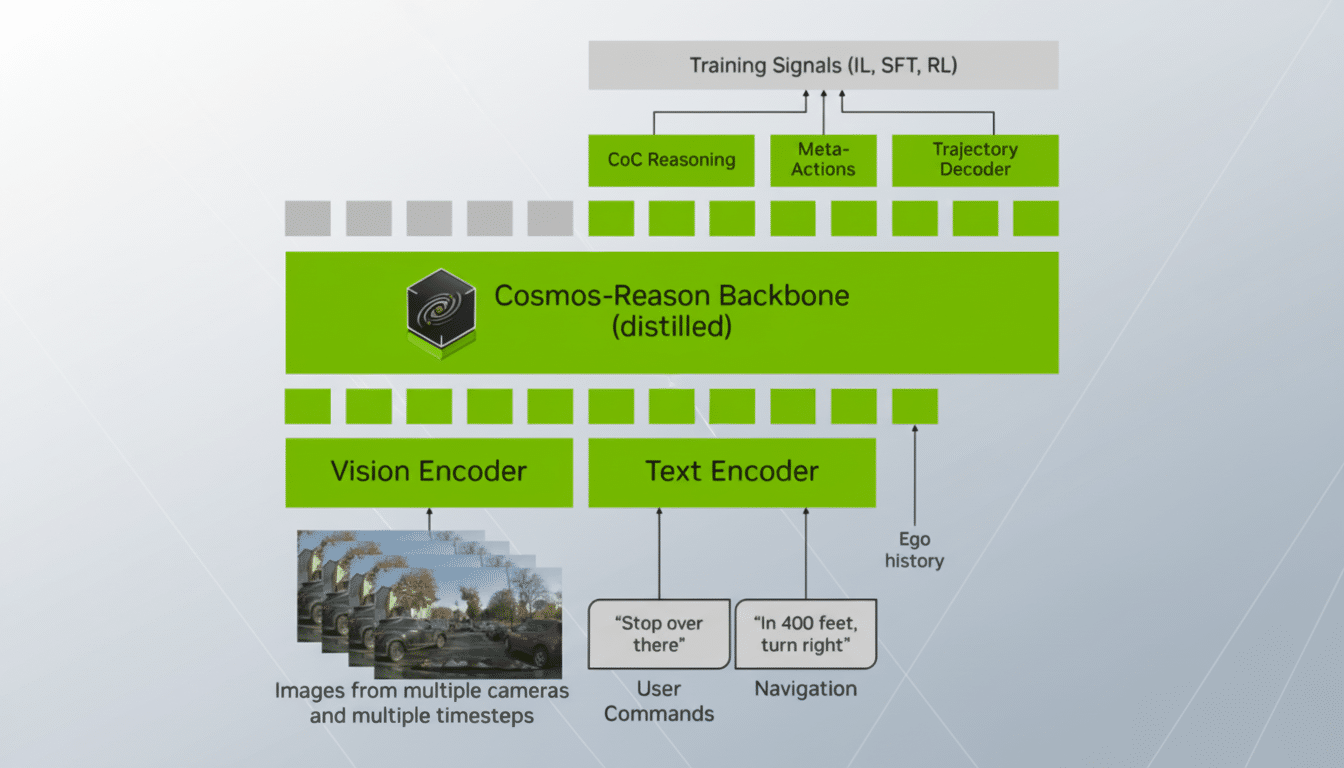

Inside Alpamayo‑R1’s reasoning stack for autonomous driving

Conventional perception stacks extract lanes, traffic lights, and actors, then offload to a planner. Alpamayo‑R1 integrates images, textual prompts, and scene context into a unified model that is capable of generating both natural‑language rationale and an action (e.g., high‑level maneuver or trajectory hint). It is that coupling—of grounding “why” with “what to do”—that many labs see as important to addressing the long‑tail of rare, nuanced scenarios.

By inheriting the Cosmos Reason scaffolding, the model can chain intermediate observations (e.g., pedestrian is occluded by van, crosswalk ahead is partially blocked) before proposing a suggestion for action. In a research context, this facilitates easier debugging than black‑box policies and provides a structured way to evaluate perception fidelity and decision quality.

According to Nvidia, Alpamayo‑R1 and reference checkpoints are accessible through GitHub and Hugging Face, along with inference notebooks for easier bring‑up on common GPU instances. Open‑source driving datasets and simulators (in both academia and industry) cited by the company may also be referenced.

Why this matters for Level 4 autonomy and safety

SAE Level 4 capabilities are those that deal with all driving within a specified operational domain in the absence of human fallback. The problem is not so much raw perception as it is “common‑sense” judgment—inferring intended actions, reasoning about occlusions, and striking the right balance of assertiveness versus hesitance. Open reasoning models allow researchers to explore these decisions, examine what’s in the model’s “mind,” and iterate more quickly on edge‑case handling.

The approach mirrors a growing push in the field. Wayve has introduced language‑grounded driving models that tell stories of scene understanding; Tesla’s been pioneering end‑to‑end neural planners trained on fleet video; and academic benchmarks like nuScenes and Argoverse are increasingly looking to integrate closed‑loop metrics that incentivize safe, comfortable driving rather than only bounding‑box accuracy. Nvidia’s foray adds a popular GPU and tooling ecosystem to that emerging model.

Data scale remains decisive. A single development car can produce multiple terabytes a day, and you need to synthesize rare things. Reasoning‑first architectures combined with synthetic data generation are emerging as a common recipe for closing the coverage gap without accumulating endless road miles.

The Cosmos Cookbook and open tooling for autonomous systems

In addition to the model, Nvidia’s Cosmos Cookbook offers step‑by‑step workflows for data curation, synthetic scene generation, post‑training alignment, and evaluation. The guides incorporate recipes for filtering long‑tail events, balancing datasets in order to discourage policy over‑caution, and stress testing with adversarial perturbations—practices that many labs already take as table stakes for AI safety when the applications are safety‑critical.

Crucially, the company is promoting standardized assessment. The Cookbook describes how to report reasoning traces, intervention‑free km in simulation, and comfort metrics such as jerk and lateral acceleration. This aligns with work by SAE International and MLCommons to standardize autonomy benchmarks so results can be directly compared among different teams using model families.

On the infrastructure front, the tooling is optimized for Nvidia’s latest datacenter stack, including support for multi‑GPU training and mixed‑precision inference. For teams who are already building with Omniverse for digital twins or Isaac Sim for robotics development, the release fits into established workflows for scenario authoring and replay.

Safety, transparency and the research loop for autonomous AI

Releasing models and recipes to the community means they are open to criticism by a wide audience in this field, which is critical when opaque or badly understood failure modes can translate into real life. Regulators and academics are among those demanding transparent self‑driving performance reporting; NHTSA’s continued crash reportage of hands‑free systems demonstrates that level of scrutiny is only getting more intense.

Robots and other autonomous systems will be a major computing domain, Nvidia’s head of research has said, adding that creating their “brains” requires powerful hardware as well as interpretable learning systems.

By sending out an open model of reason and a playbook itself—both repeatable and subject to power laws of collective validation being applied to it—the company stakes its hope on the field moving faster than closed stacks can.

What to watch next in open reasoning models for driving

In the near term, look out for third‑party benchmarks of Alpamayo‑R1 on public driving datasets, ablations that measure reasoning trace quality versus action reliability, and integrations with closed‑loop simulators. That it’s a tool used in academic labs and by the world’s more out‑there AV startups will be one early measure of that practical traction.

If the model and Cookbook can shrink iteration cycles on the edge of the pyramid—merges in aggressive traffic, steering through ambiguous work zones, negotiation with cyclists—then expect rapid follow‑ons: bigger checkpoints; multi‑sensor variants that fuse lidar and radar; tight integration with high‑fidelity simulation. That’s why, for the autonomous driving research community, which has long been constrained by tooling, this open release from Nvidia is a gentle push in the right direction.