Nvidia is weighing the production of more H200 data center GPUs as Chinese tech giants place orders to flood order books, people familiar with the matter tell Reuters. The change would signal a quick shift to tap pent-up demand in the world’s No. 2 market for AI gear after unveiling a new way to release licensed H200 models into China.

Why The H200 Is Getting A Stampede Of Orders

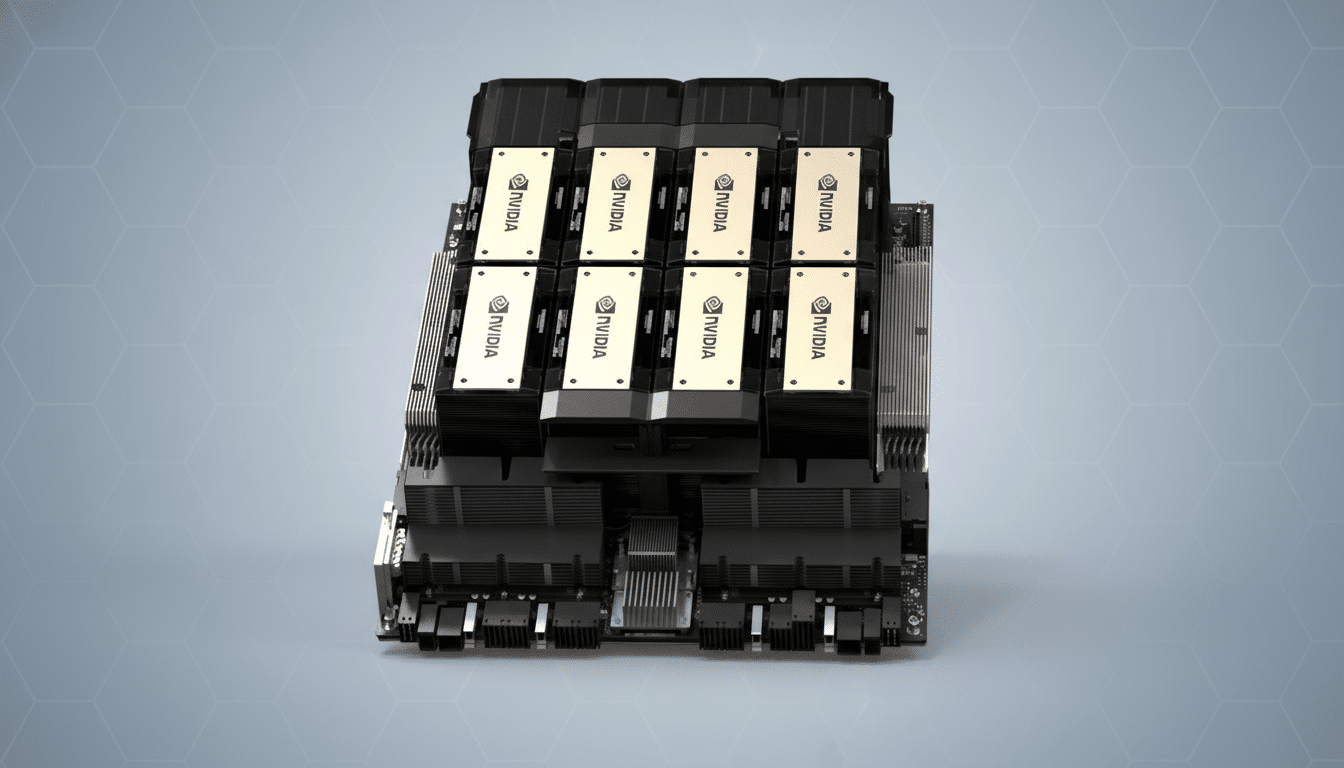

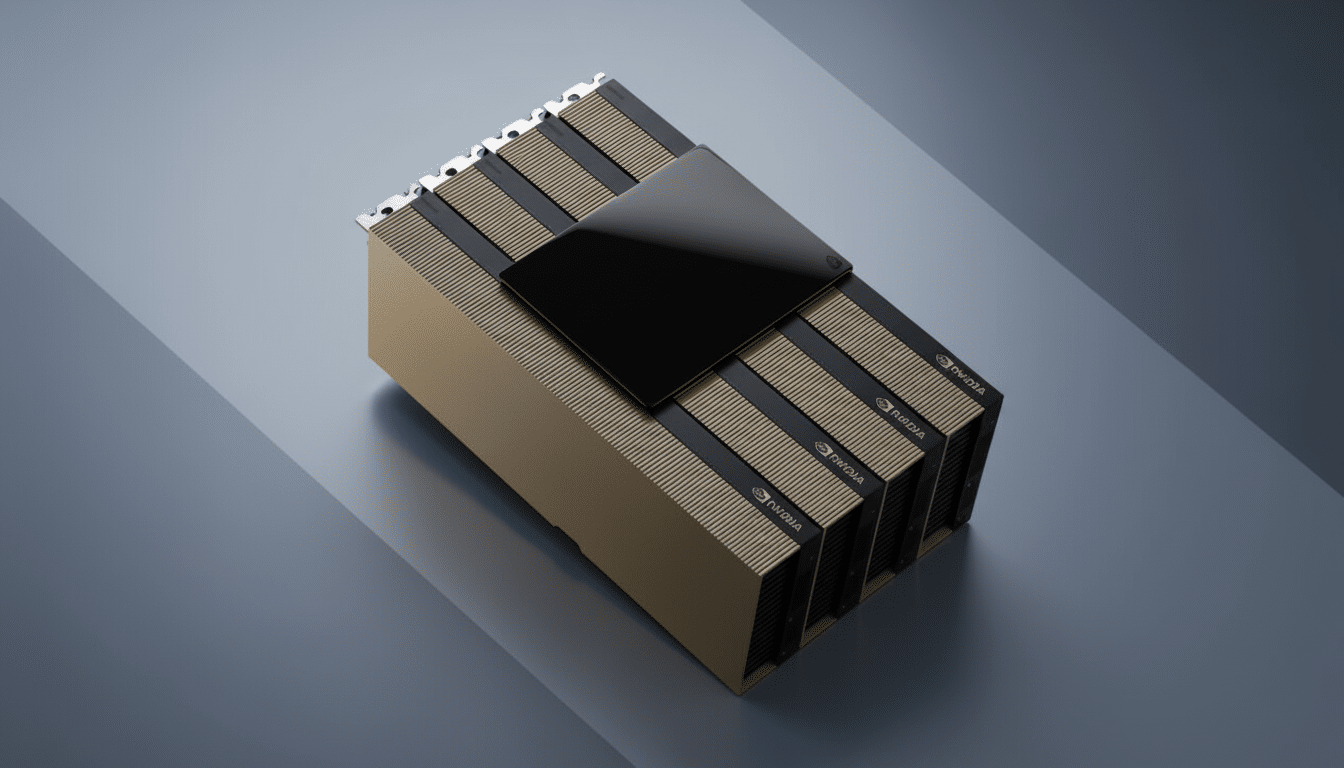

The H200 is the most powerful chip in Nvidia’s Hopper dynasty, marrying the architecture of its predecessor, the H100, with next-gen HBM3e memory. Nvidia has announced as much as 141GB of HBM and around 4.8TB/s of memory bandwidth, important for training and serving large language models, with internal benchmarks indicating significant gains versus H100 on inference throughput on popular models. That will result in faster training cycles and lower cost per token for inference-heavy workloads.

Those capabilities help explain why Chinese cloud and internet companies, including Alibaba and ByteDance, have been considering big H200 purchases, according to the Reuters account. To many, the H200 is a step-change above compliance-tuned solutions previously aimed at China (e.g., the H20), especially in memory bandwidth and interconnect flexibility that are important for scaling clusters.

The Policy Backdrop and a Notable Twist for H200

H200 units previously were unattainable for China as tighter U.S. controls on advanced accelerators made exports impossible. The Commerce Department green-lit a framework for licensed H200 sales in China. No clear shape of that structure has been publicly detailed by U.S. officials, and Chinese authorities are still deliberating import approvals, adding another layer of uncertainty.

Nvidia, for its part, said that it is controlling supply in the channel so that licensed H200 shipments to authorized customers in China do not interfere with availability for U.S. buyers. That balancing act is significant: in an April analysis, Omdia estimated that Nvidia has >80% market share of the AI accelerator market, and any regional reallocation could send a ripple effect through hyperscalers chasing capacity.

Capacity and the CoWoS Bottleneck for Nvidia’s H200

Even if Nvidia gives the green light to a peak production ramp, it all comes down to packaging capacity. High-end chips such as the H200 use TSMC’s CoWoS technology, a limiting factor for the past two GPU generations. TSMC has added new CoWoS output, and industry trackers including TrendForce have reported a steady quarterly uptick, but global cloud providers’ demand continues to be strong.

It would require careful balancing of substrate, HBM3e supply, and CoWoS lines to tack on that extra H200 volume in China. Any additional capacity could also act as a stopgap until more abundant deployments of subsequent-generation platforms arrive, and reduce the gaps for customers who can’t wait for future nodes.

What Chinese Buyers Are Looking to Run on H200

Chinese companies are creating multilingual foundation models, video-generation systems, and recommendation engines with big appetites for memory and bandwidth. The larger HBM of the H200 may make it an ideal candidate for large-context LLMs and networks with a mixture-of-experts that would gain from fast parameter access, but, due to its good efficiency, there is no significant power-density pressure at data center scale.

If import approvals come through, some buyers may simply shift away from interim chips like the H20, which was optimized to meet previous thresholds on performance and interconnect. That would condense migration timelines and perhaps even raise near-term AI service quality on mainland platforms—assuming, of course, enough volume ships.

Competitive and Strategic Implications for Nvidia

Local alternatives are improving—Huawei’s Ascend line is catching on in its domestic clouds, and several startups are designing training-class accelerators—but performance, software ecosystem depth, and supply continuity still favor Nvidia for many high-end workloads. Historically, analysts at firms including Morgan Stanley estimated that China accounted for about 20–25% of Nvidia’s revenue from data center customers before export rules were tightened—highlighting the strategic importance of this market.

For Nvidia, a moderated ramp for H200 in China could further unlock revenue that may be displaced by or delayed through alternate solutions, while strengthening its CUDA and software moat. The risk is policymaking volatility: licensing terms could change, and Beijing might push state-linked buyers toward silicon made at home. Nvidia will have to navigate them both, while demonstrating that it can increase supply without squeezing customers elsewhere.

What to Watch Next as H200 China Plans Take Shape

Key signals to watch include formal import clearance into China, signs of increased CoWoS allocations to H200 at TSMC, and purchasing commitments from leading Chinese clouds. If and when those pieces come together, we anticipate faster buildouts of H200 clusters for training and inference, a more competitive stance with domestic accelerators, and a more crowded near-term market for anyone holding out on the top-tier GPUs.