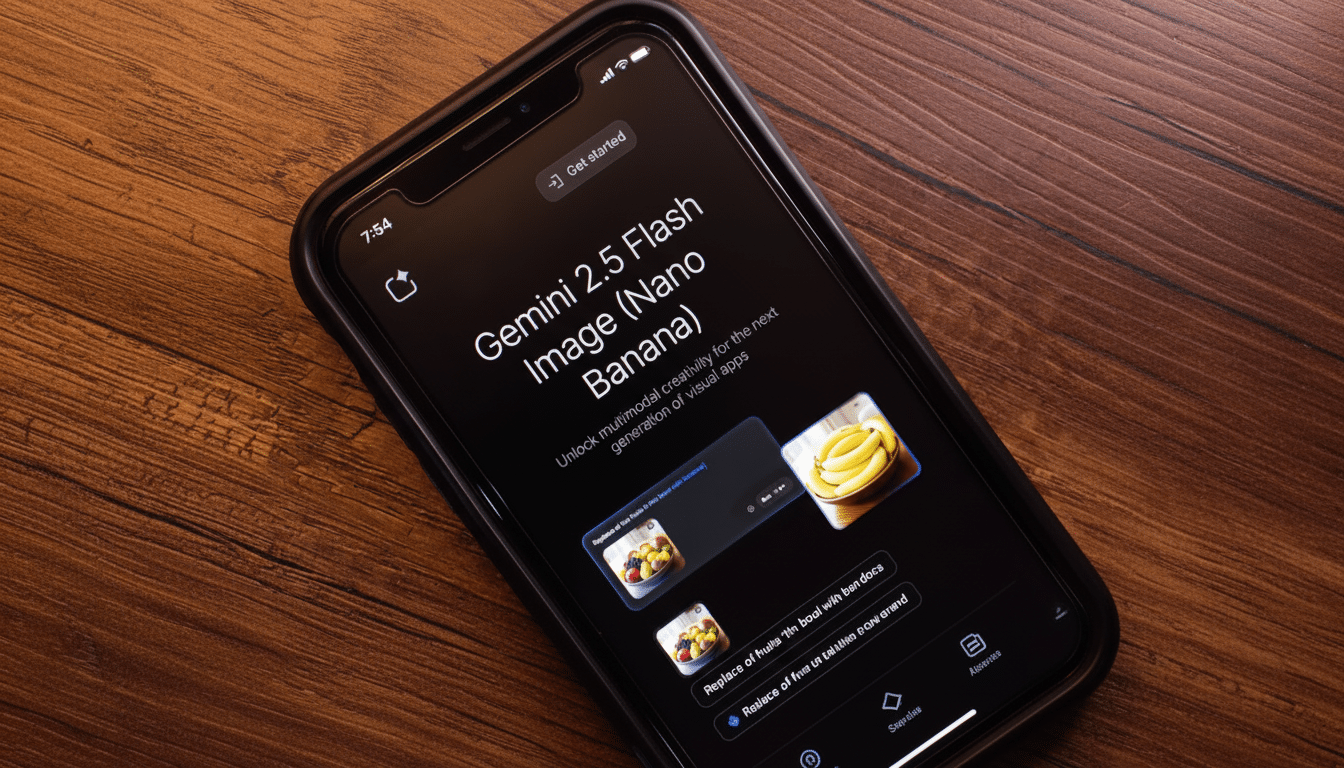

The company’s next-gen image model, allegedly codenamed Gempix and nicknamed Nano Banana 2 internally, might offer a noticeable leap in fidelity if a leak obtained by Google Leaks is to be believed. Early samples shown on the site formerly known as Twitter suggest that details are sharper, there are fewer rendering artifacts, and the geometry is cleaner—advancements that would push AI image generation past the point where “good enough” work is good enough.

What the Leak Claims About Nano Banana 2 and Gempix

Alongside recognition programs is Traditional & Modern Chinese intelligence. NANO BANANA 2 / GEMINI — Gemini Nano Banana 2 is based on Gemini 2.5 Flash Image and optimized for speed and expandability, with an emphasis on what we believe users will find most important: image quality.

Source: checksum posted by OPENBHTEAM — according to a third-party AI media platform as well as reporting at WinFuture (posts checked and verified 4 June). Basic information: Infomir-service (no Facebook): update news/news/index.php?news

A couple of key moves:

- A multi-stage generation pipeline that schedules, verifies, then refines images before output.

- High-resolution support with native 2K rendering plus upscaling to 4K.

The supposed preview images reveal that there’s improved line accuracy at work as well as less visual bugginess when things get difficult, such as hands, text, and fine textures. The samples look promising, but there’s no official word here and we don’t know the source of this demo—so it’s okay to be skeptical.

Why Multi-Stage Generation Might Matter for Image Quality

The majority of state-of-the-art generators still work in a forward pass where an image is produced and subsequently either passed to an upscaler. A plan–verify–refine loop, by contrast, may catch errors before they crust over as a final frame. Think of it as a kind of quality-control stage, one that asks: “Is this image consistent with the prompt or question, at least in its details?” The model does not make the final decision until all checks have passed.

This direction is part of a larger trend in AI, that of verifiers and self-refinement. There are already similar feedback loops in place in large language models that help reduce errors. In imaging, diffusion systems like SDXL have added a refiner to polish high-frequency detail; adding a verify step as well also helps further eliminate warped anatomy, incorrect perspective, and broken typography—all prevalent pain points in gen art.

Resolution and Fidelity Improvements in Nano Banana 2

Resolution still matters. Most consumer-facing systems are restricted to around 1K-square outputs, and then they use some AI upscaling. Native 2K generation leads to a global gain in coherence—edges, architectural lines, hair strands, and fabrics stop looking wind-swept (as the model has more pixels to allocate structure and texture). If Nano Banana 2 does, in fact, support native 2K and upscale to 4K in a pipeline, creators would no longer have to go back and forth between multiple tools to get print-friendly assets.

The trade-off is compute. A higher native resolution generally results in heavier inference. The “Flash” designation in Gemini’s family name implies the models are optimized to be fast and inexpensive, so the bet here is that Google is holding out for quality as well as speed. If it can stay snappy with low latency while moving to 2K-plus outputs, that is a serious competitive advantage for production workflows.

How It Stacks Up Against Midjourney, DALL·E, and Others

Midjourney’s most recent models still lead the pack in terms of aesthetics and stylization, while DALL·E 3 is well-known for its oftentimes surprisingly accurate text handling and prompt compliance. SDXL and its community ecosystem have open tooling and strong upscalers, with Adobe’s Firefly providing content safety and enterprise controls. The differentiator implied by this leak is systematic verification baked into generation, rather than bolted on—an architectural decision that could limit errors that are hard to correct once they have occurred.

If Google combines this with robust provenance capabilities, like giving everyone C2PA creds by default, it could make Nano Banana 2 more appealing to publishers and brands that need traceability—an area of fast-growing enterprise demand if the last round of industry studies and standards-setting bodies are accurate.

Caveats and What to Watch Next Before Official Details

This is still an unverified leak. Until Google shares more about the model, take any specs and sample photos that follow as being subject to change. Signals to watch include:

- Uniform hand and text accuracy across a range of prompts.

- Consistent rendering of the same cars, even at an angle.

- Even skin textures or fabrics at 2K.

- 4K upscales that maintain micro-detail rather than just sharpening edges.

Also monitor latency, price, and the availability of an API. A multi-stage verifier loop is only useful if the verification of its proof goals is fast and interactive, as well as inexpensive at scale. If Google can pull those off, Nano Banana 2 could give the most popular creative programs a run for their money and lift the floor on photorealism, product renders, and design mockups everywhere.

Bottom line: if that Gempix leak is representative, AI images should see a quality bump where it counts—less artifacting, cleaner lines, and more accurate prompt alignment—without losing speed. Such a mix is what creators and businesses have demanded.