Alibaba-backed AI startup Moonshot has unveiled Kimi K2.5, a multimodal model that turns a single video or image upload into working front-end code. The feature—what the company calls “coding with vision”—pushes vibe coding from a novelty into a practical tool, promising to spin up interactive web interfaces without a traditional design-to-implementation handoff.

How Video-to-Code Works in Moonshot’s Kimi K2.5

Moonshot says Kimi K2.5 was pretrained on 15 trillion text and visual tokens, making it natively multimodal rather than a text model with a vision add-on. Feed it a scrolling screen capture or a set of screenshots, and it emits runnable HTML, CSS, and JavaScript—complete with scroll effects, animations, and clickable components. In the company’s demo, the model reconstructed the look and flow of an existing site from a short video, even if some fine details (like map outlines) landed a bit impressionistic.

- How Video-to-Code Works in Moonshot’s Kimi K2.5

- Why It Matters for Developers Building Web Interfaces

- Performance Claims And Open Source Positioning

- Agent Swarm Orchestration and Speed Improvements

- Access, pricing, and integrations for Kimi K2.5 and Kimi Code

- What to watch next as video-to-code tools mature

Where most mainstream assistants (ChatGPT, Claude, Gemini) can generate code from an image but still require manual assembly, K2.5 aims to collapse the steps: infer layout, produce components, wire up interactions, and hand back something you can preview in a browser. The model can also perform visual debugging, spotting discrepancies between a desired layout and the rendered result.

Why It Matters for Developers Building Web Interfaces

Vibe coding—expressing intent with sketches, screenshots, or short videos—reduces the upfront expertise needed to prototype. For product teams, that means faster mockups, instant A/B variants of landing pages, and quicker stakeholder feedback. A designer could record a competitor’s scroll experience, ask K2.5 to generate a functional baseline, and then iterate on copy, components, and accessibility.

The catch is fidelity and fit. Auto-generated UIs can drift on spacing, responsiveness, and semantics, so teams will still need to enforce design systems, accessibility rules, and performance budgets. But if K2.5 reliably gets you to a “90% there” prototype, the productivity upside is significant—especially for non-engineers who previously leaned on lengthy dev queues.

Performance Claims And Open Source Positioning

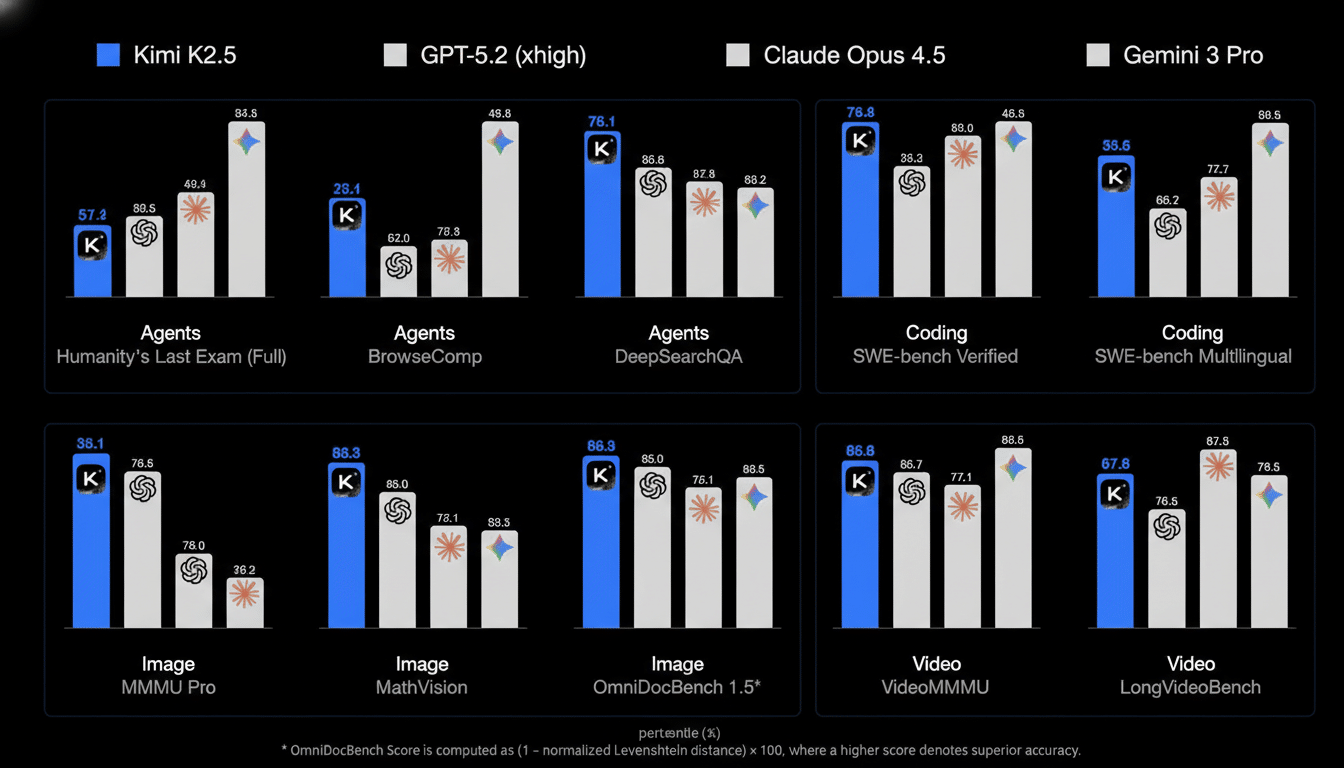

Moonshot reports that K2.5 posts competitive results on SWE-Bench Verified and SWE-Bench Multilingual, coding benchmarks widely used by the research community to gauge end-to-end software problem solving. According to the company’s published data, its scores are in the range of leading proprietary models from OpenAI, Google, and Anthropic.

The company characterizes K2.5 as the most powerful open-source model to date and exposes its coding stack through Kimi Code, an open platform that slots into popular IDEs. As always, “open” spans a spectrum—from weights and code availability to licensing terms—so developers will want to review the release materials before adopting it in production workflows.

Agent Swarm Orchestration and Speed Improvements

Alongside the core model, Moonshot introduced an “agent swarm” research preview that orchestrates up to 100 sub-agents on multistep tasks. By dividing work and running subtasks in parallel, the system is designed to cut wall-clock time on complex jobs. The company says internal evaluations show up to 80% reductions in end-to-end runtime compared with sequential agents—promising for builds that mix code generation, refactoring, testing, and documentation.

Parallelism introduces its own challenges—state management, contention, and error recovery—but the approach mirrors broader industry momentum toward multi-agent tooling for software engineering.

Access, pricing, and integrations for Kimi K2.5 and Kimi Code

Kimi K2.5’s coding features are available through Kimi Code with integrations for Cursor, VS Code, and Zed. The model is also accessible via the Kimi website, mobile app, and API. The agent swarm beta is currently offered to users on paid tiers, with Allegretto at $31/month and Vivace at $159/month.

What to watch next as video-to-code tools mature

If video-to-code gains traction in real teams, expect rapid imitation: incumbents have image-to-code, but not many deliver a ready-to-run interface from a screen recording. Enterprises will probe three pressure points: IP and ethics (recreating third-party designs), governance (controlling what the model ingests), and reliability (consistent component quality across devices and languages).

Still, the shift is clear. As models learn to reason over pixels as fluently as tokens, the UI spec itself becomes a visual artifact—sketched on a whiteboard, recorded in a quick scroll, then compiled into a live experience. With Kimi K2.5, Moonshot is betting that the fastest way to ship is to show, not tell.