Microsoft inked a five-year, $9.7 billion pact with Australia’s IREN to secure incremental AI cloud capacity, highlighting how the company is aggressively building out capacity for Azure’s next-generation generative and agentic AI services. The capacity is anticipated to arrive in stages through 2026 from IREN’s campus in Childress, Texas—a campus that has been conceived to accommodate up to 750 megawatts of infrastructure.

Why Microsoft is locking in more compute capacity now

High-end GPU demand continues to overwhelm supply as enterprises accelerate to train heavier multimodal systems and run Copilots into the wild. Microsoft has signaled record AI spend on its recent earnings calls, pairing rapid in-house data center builds with multiannual capacity pacts to shorten time-to-availability and erase customer jams. This IREN bargain crosses that approach, guaranteeing dedicated racks tethered to Azure’s well-known reliability while avoiding reliance on Microsoft’s existing campuses, a strategy echoed in other exclusive bargains with GPU experts.

- Why Microsoft is locking in more compute capacity now

- Inside the IREN build and Childress, Texas campus plan

- Deal centers on Nvidia GB300 and Azure NVL72 systems

- How crypto-era infrastructure evolved into AI utilities

- Texas emerges as an AI data center hub and power nexus

- What this means for Azure AI customers and developers

Inside the IREN build and Childress, Texas campus plan

Australian operator IREN, which slashed its way into high-density compute through cryptocurrency, has engineered similar large AI-oriented data centers as peers like CoreWeave. At the Childress site, it is shifting toward utility scale, bringing land and electricity provisions forward for rapid expansion—a critical edge when GPU delivery times and colocation queues lengthen.

IREN obtained the Microsoft deal with another $580 million run rate for purchasing GPUs and equipment from Dell. Bloomberg reports that CEO Daniel Roberts assumes that the Microsoft contract will deploy less than 10% of IREN’s total power and generate $1.94 billion in annualized revenue. Hyperscaler contracts can reset the economics of former mining operators.

Deal centers on Nvidia GB300 and Azure NVL72 systems

The contract centers on infrastructure built around Nvidia’s GB300 GPUs in IREN’s systems, implemented in stages. Microsoft started up its first production cluster of Nvidia GB300 NVL72 systems for Azure. This cluster powers reasoning-heavy performance, enables AI pipelines, and supports multimodal—in other words, the functions that propel today’s enterprises.

A second Microsoft purchase covering approximately 200,000 Nvidia GB300 GPUs over NScale includes several European locations and one US location. Both deals show an orderly ramp to distribute state-of-the-art capacity around the world for latency, data jurisdiction, and resilience.

How crypto-era infrastructure evolved into AI utilities

Operators like IREN and other upstarts have transformed mining land, power contracts, and cooling experience for AI. The high-plug AI clusters flourish on the electricity, planning, and building required to stand up renewable modules. The business model holds reserved capacity business and ICE agreements with hyperscalers that will provide predictable revenues to help big GPUs.

For Microsoft, tapping these specialists accelerates buildout without waiting for every internal data center to complete expansion cycles, a crucial advantage when model sizes and inference loads are compounding every quarter.

Texas emerges as an AI data center hub and power nexus

Texas has become a magnet for AI data centers due to scalable land parcels and access to the ERCOT grid. A 750-megawatt target signals truly industrial-scale infrastructure, with layouts and cooling tuned for dense GPU clusters. While rigorous operators are increasingly adopting advanced air and liquid cooling to improve efficiency, the practical constraint remains power—securing, integrating, and timing capacity additions to match GPU deliveries. Large buyers also emphasize sustainability metrics as part of their procurement. While details on the energy mix were not disclosed, Microsoft has publicly committed to aggressive carbon and water goals, a pressure that typically carries through to partner campus designs and procurement choices.

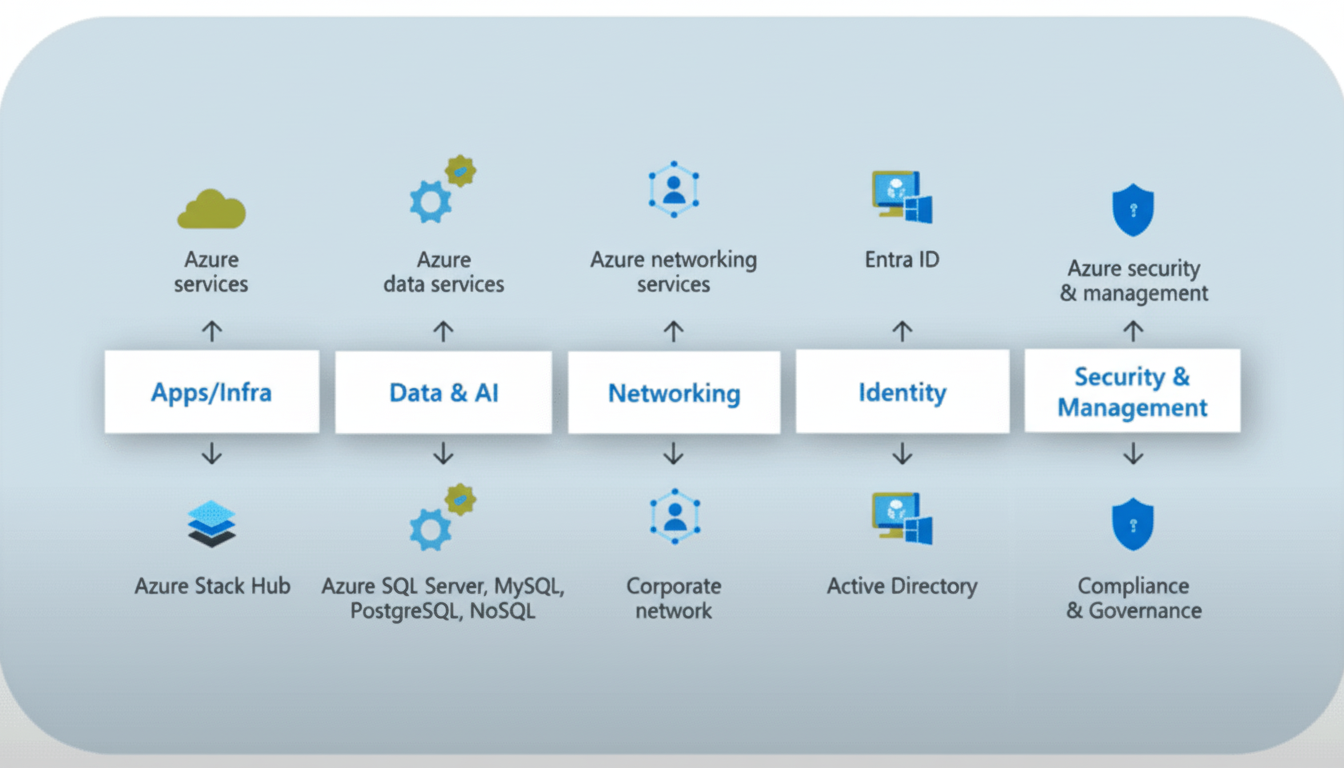

What this means for Azure AI customers and developers

For developers and enterprises, the headline benefit is more predictable supply of leading-edge training and inference tiers. As GB300 capacity comes online, expect shorter queue times for large-scale experiments, expanded regional availability, and improved throughput for complex, multi-agent workflows that strain prior-generation stacks. The broader takeaway is clear: hyperscalers are moving from sporadic GPU allocations to long-horizon, utility-like supply. In summary, by stitching together internal builds with external partners like IREN, Microsoft is aiming to ensure Azure has the headroom to support today’s surging AI workloads—and the even larger ones that are already on the horizon.

- Shorter queue times for large-scale training runs

- Expanded regional availability across key markets

- Higher throughput for complex, multi-agent workflows