Meta has provided a pretty direct postmortem for the onstage stutter attacks that marred its live AI demos at Connect: the company “DDoS’d” itself. A single command—“Hey Meta, start Live AI”—suddenly woke up all the Ray‑Ban Meta smart glasses in the room and pummeled the back-end, kneecapping a live demo on stage at his employer’s request. Another bug prevented the new neural wristband from answering a WhatsApp call. “I don’t love [the demo fails] obviously, but I know the product works,” Bosworth said, saying that bugs have been discovered and corrected.

What Happened During the Live Onstage Performance

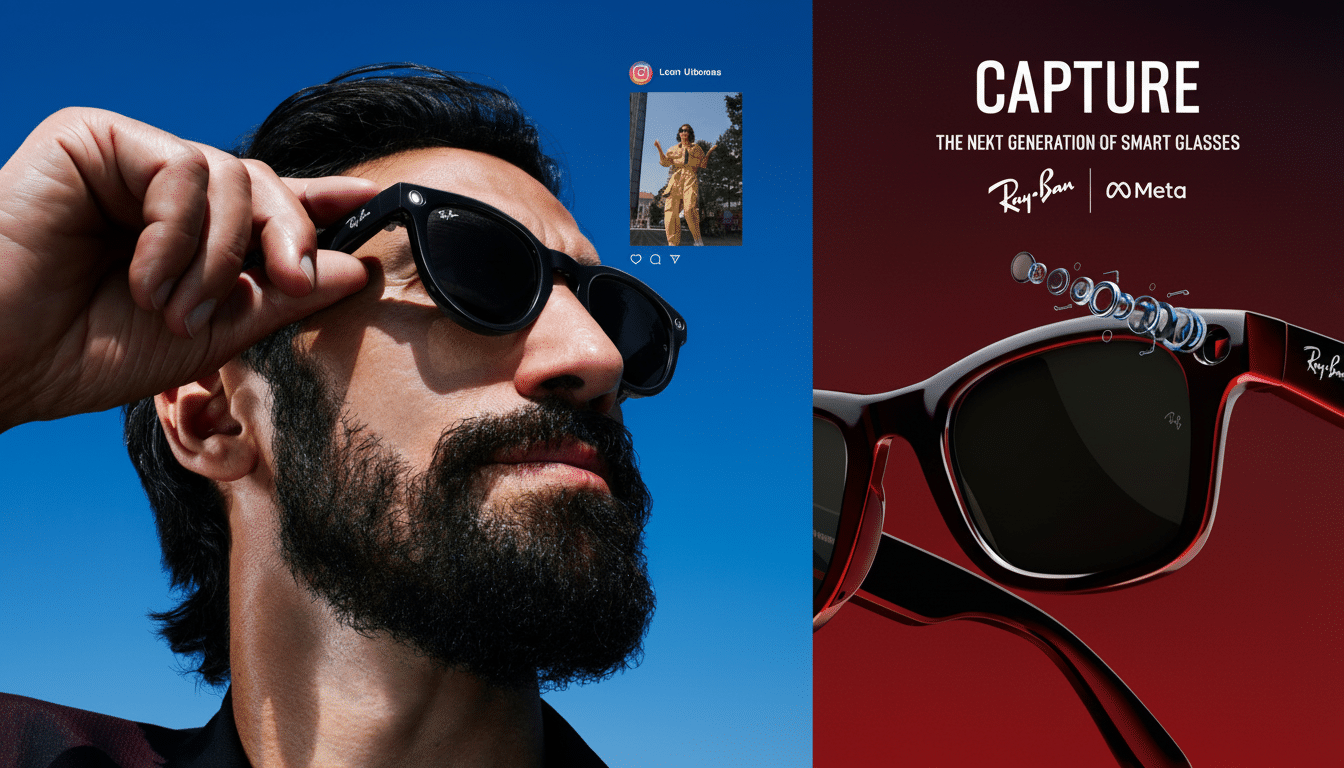

The first fail came during a cooking segment in which an influencer invited the Live AI assistant — the feed ran through Ray‑Ban Meta glasses — to guide him through preparing a Korean-inspired sauce. The system was able to correctly interpret the scene when receiving passive commands from a human, but had trouble responding after being interrupted by the user halfway through, misreading the status of ingredients and giving instructions in the wrong order. The presenter threw the Wi‑Fi under the bus then tossed back to Mark Zuckerberg.

A second hiccup came during a wristband demo designed to demonstrate finger-tap control for answering a WhatsApp call within the glasses’ interface. It would not connect by gesturing, despite multiple tries. That same wristband later did succeed in summoning a song — noting that the failure wasn’t universal — but the interaction was another demonstration of how precarious live, multiple-device AI demos can be.

Meta’s Explanation: A Self‑Inflicted Traffic Surge

That a classic systems problem — unintended broadcast activation — seems to have played a part in Bosworth’s exchange. The “start Live AI” prompt made not one but “every single Ray‑Ban Meta’s Live AI in the building start.” Making matters worse, Meta temporarily routed the demo traffic to a development-tier environment as part of isolation. It meant an unexpected spike — possibly of hundreds if not thousands of concurrent, low-latency streams — fell on a cluster that wasn’t provisioned like production. “So we DDoS’d ourselves,” he added.

In cloud vernacular, this doesn’t have the same profile as a textbook Internet-level DDoS and feels more like an internal “thundering herd.” Voice assistants are used to stream audio for ASR, visual frames for scene understanding, and state updates for agentic orchestration. Multiply that across an entire room of devices and you can swamp your CPU, GPU, or network budgets in seconds if you’re not careful about throttling, backoff, and enforcing per-device rate limits. That’s why production systems use hotword fencing, device targeting, and guardrails that can ignore the same command observed across many clients all at once.

The Call Bug: A Sleep State Edge Case Explained

The gesture-based call glitch, Bosworth explained, was rooted in a deeper-seated UI timing issue. The screen was put to sleep the moment the incoming call came through and upon waking, the answer control hadn’t re-rendered, resulting in gesture rejection. “We had never found that bug before,” he said. “It’s fixed now.” These are the kinds of race conditions that pop up when you mix low-power wearable displays with always-on communications and new input modalities.

Why It’s Important for Reliable Real-Time AI Systems

Live AI on glasses is a tough stack: wake word detection, doing vision on-device, cloud inference and network variation all have to act together in unity. The most highly perceived experiences — continuous dialogue and context awareness — are also the costliest in terms of resources, though. The industry’s peers haven’t fared much better. A high-profile search challenge last fizzled when an AI answer listed an incorrect astronomy fact, and voice assistants across the field have been stumped by interruptions and quick turn-taking. It’s more than just capability; it is reliability that sells ambient computing in the end.

Meta’s framing also mirrors a shift happening architecturally across wearables: pushing more perception to the edge. Running slimmer vision-language models on-device for first-pass reasoning, offloading heavier lifting to server-side models and adding per-venue limits all can dull the herd’s edge. For example, randomized backoff, per-AP quotas, and deduplication of simultaneous identical requests are standard practice in large-scale messaging and should generalize to voice-triggered AI.

What’s Next for Meta’s Glasses After Connect Demos

To rebuild that trust, expect Meta to amp up its handling and enforcement of the hotword, so a stage command would only target one device instead of every head near or in earshot. Better load isolation of demo vs attendee devices, and canarying prior to live segments would cut down on surprises. On the UX side, wake-lock policies and state restoration done by notifications ought to stop the call-answer blind spot from popping up again.

Bosworth argues the product hasn’t been shit and that Connect’s problems were executional not formational. If Meta can use the assessment to build live sessions that are demonstrably steadier — and, more crucially, daily for early adopters — it will have turned an embarrassing lesson into a roadmap for hardier AI wearables.

Context helps: modern cloud stacks have been designed to absorb global traffic spikes, but live demo environments are by definition event‑sequestered to minimize exposure of production payloads. It’s a reminder that even amid segmentation, when an unplanned surge in hotwords fills a room, it can tip a tech giant over. The solutions are less a matter of raw horsepower and more about smarter orchestration — the invisible plumbing that makes ambient AI feel, well, ambient.