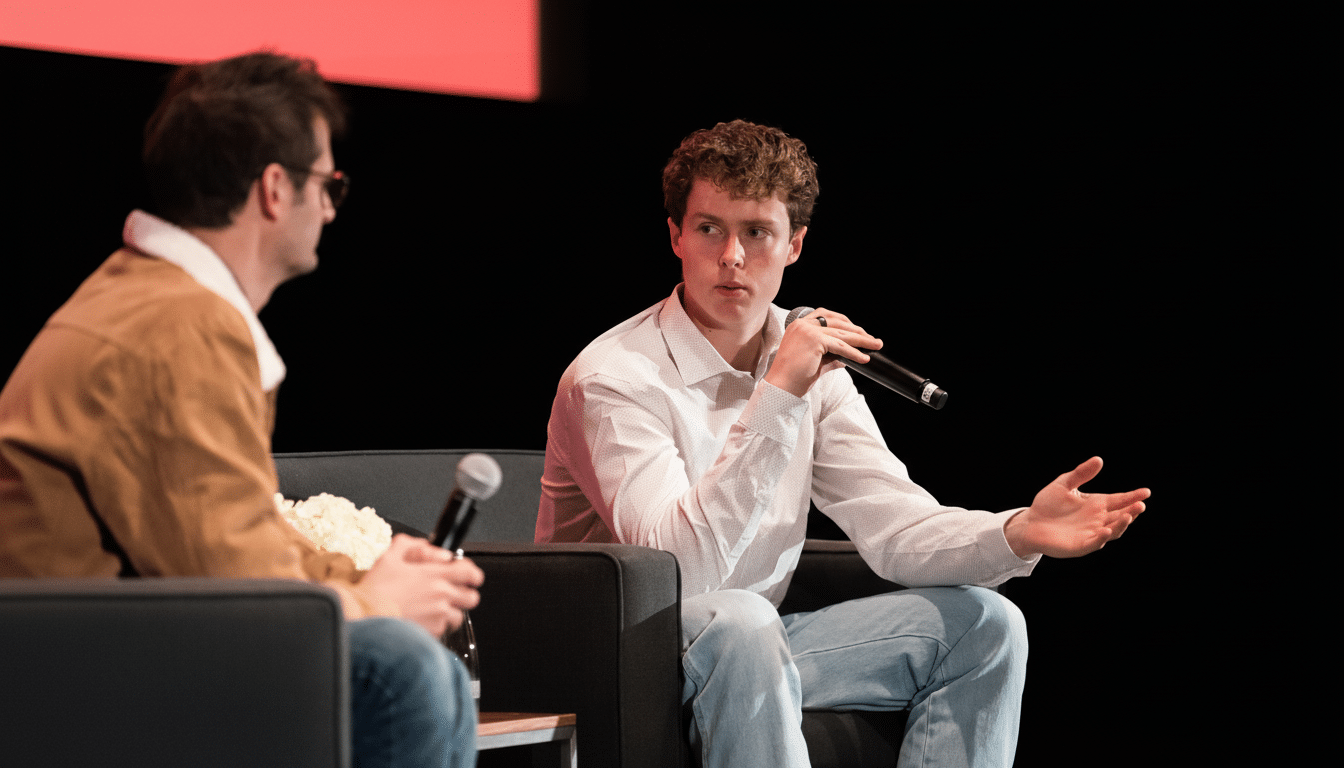

Ethan Thornton, the MIT-trained founder at the helm of Mach Industries, will deliver sharp insights on the AI Stage at Disrupt.

His ideas are straightforward, but tend toward radical when applied to defense:

- For AI, he argues, the action is at the edge.

- Autonomous functionality must be built from inception with contested environments in mind.

- Startup pace can move faster than a legacy acquisition cycle without compromising safety or requiring a lack of oversight.

Why this matters for defense AI and military readiness

Defense buyers are now integrating AI into their core capabilities, not as an extra. While a conflict between great powers would tax or break that communication, global military spending has hit record heights above $2.4 trillion, and an increasing slice of that is going into software-defined systems, autonomy, and resilient networks, according to assessments by SIPRI. U.S. policy drivers such as the Pentagon’s Replicator incentives and enhanced pathways through the Defense Innovation Unit are further broadcasting that fast fielding AI-enabled systems is not just desirable — it’s strategic doctrine.

Mach Industries is in a new cohort that includes companies such as Anduril, Shield AI, Palantir and Applied Intuition — startups creating vertically integrated stacks of sensing, decision software and mission execution. Thornton’s thesis: you need to push capability down so units can fight when satellites go down and links get jammed, or access to the cloud is spotty.

Inside the stack: autonomy instead of cloud

The principal technical challenge is moving robust AI inference to forward units with limited power, compute and bandwidth. That means model compression, quantization, robust perception pipelines that can run on ruggedized edge modules — not hyperscale GPUs in a data center. Thornton expects to profile architectures that mix onboard navigation, target tracking and cooperative behavior from swarms, without persistent connectivity.

Operational experience from today’s conflict has emphasized the utility of attritable, autonomous systems capable of adapting on the fly in electromagnetic tumult. Think swarming drones that replan routes when GPS is spoofed, or ground robots that share environment maps peer-to-peer. Again and again, both CSIS and RAND analyses have underscored that there are concerns about how the speed of one’s OODA loop changes when AI compresses sensing-to-action latency from minutes to seconds — and edge autonomy is its lever.

Still, “human on the loop” is the practical and policy baseline. Thornton has made the case that autonomy should decrease cognitive burden as well as increase span of control while preserving clear lines of authority. That jibes with Department of Defense guidance, like Directive 3000.09 that establishes autonomy limits on weapon systems and mandates rigorous testing, verification, and validation.

Funding and policy realities for defense AI startups

Venture financing for dual-use and defense software has been on the rise, with PitchBook tracking annual totals in the tens of billions. But capital doesn’t equal deployment, at least not on its own. Startups succeed or fail on the transitions from prototype to program of record — a gap that DIU and service labs are working to narrow through Other Transaction Authority deals and rapid acquisition pathways.

Regulatory friction is real. Export controls on advanced semiconductors reverberate through AI supply chains; ITAR and EAR rules complicate scaling overseas. Safety and transparency mandates for AI have been debated alongside defense carve-outs in Europe. The likes of Mach need to be focusing on compliance from day one — model provenance, audit trails, containment and continued evaluation kind of thing — so that deployment can withstand a congressional subpoena or allied interoperability testing.

The dual-use line and safety-by-design in autonomy

Much of the battlefield stack has civilian counterparts; so there’s computer vision for inspecting infrastructure, autonomous navigation for moving goods and anomaly detection for the security of industry. That dual-use reality is a strength — bigger markets, faster iteration — but it requires disciplined guardrails. NIST’s AI Risk Management Framework, the Defense Innovation Board’s responsible AI principles and emerging test-and-evaluation playbooks provide templates for achieving measurable safety without freezing innovation.

Look for Thornton to emphasize red-teaming and adversarial testing — jamming, spoofing, and data poisoning are not theoretical points of concern. The most promising defense AI startups now treat cyber-electromagnetic resilience as a first-class product requirement, combining scaled simulation with instrumented in-the-field experimentation. Echoing and extending that point, leaders like Skydio and Shield AI have demonstrated how incremental autonomy increases can transition from experimental to operational when that discipline is baked in.

What to watch from Thornton’s hearing at Disrupt

- First, a definition of Mach’s edge-first architecture and how it manages the tradeoff between autonomy, comms degradation, and human controllability.

- Second, actual examples of how a distributed approach yields reduced kill-chain latency without causing unwanted escalation.

- Third, an honest assessment of procurement realities — what the transition from demo to fielded capability really requires and how small teams can manage milestone-driven contracts.

If the broader trend holds, Thornton’s outing will be another reminder — and perhaps a jarring one — of how the sector has continued to change: defense is becoming as much about software as about hardware. Those winners will combine credible AI engineering, mission literacy, and an operating model that moves at startup speed — provided they meet the bar set by policymakers, allies and the warfighters who’ll depend on those same systems.