Google DeepMind’s latest system, SIMA v2, is a major leap for embodied AI: Gemini’s language understanding and reasoning capabilities are brought into agents that perceive, decide, and act in rich 3D environments.

In a research preview, the team demonstrated an agent that doesn’t merely follow scripts — it reads scenes, interprets intent, and plans multi-step actions with less human guidance than before.

What SIMA 2 changes in long-horizon, general agents

The original SIMA, from 2024, was trained for hundreds of hours in gameplay and could follow basic commands across different games. But the progress on longer-horizon goals was less promising: it had approximately a 31% completion rate for complex tasks, compared with about 71% for human players according to benchmarks DeepMind itself published. Led by the Gemini 2.5 subject model, SIMA 2 — powered up with a new Flash Lite model — almost doubled that performance in internal assessments, according to researchers, while retaining generality of operation across games and synthetic worlds it has never seen.

That improvement is significant: most “agents” are able to either parse text or interact in an environment, but not both with good generalization. The key contribution of SIMA is the synergistic mix of language-driven reasoning and embodied task control, which enables our agent to ground natural instructions in visual context (including game mechanics) and decompose tasks into executable sub-tasks.

How Gemini elevates embodied reasoning in complex worlds

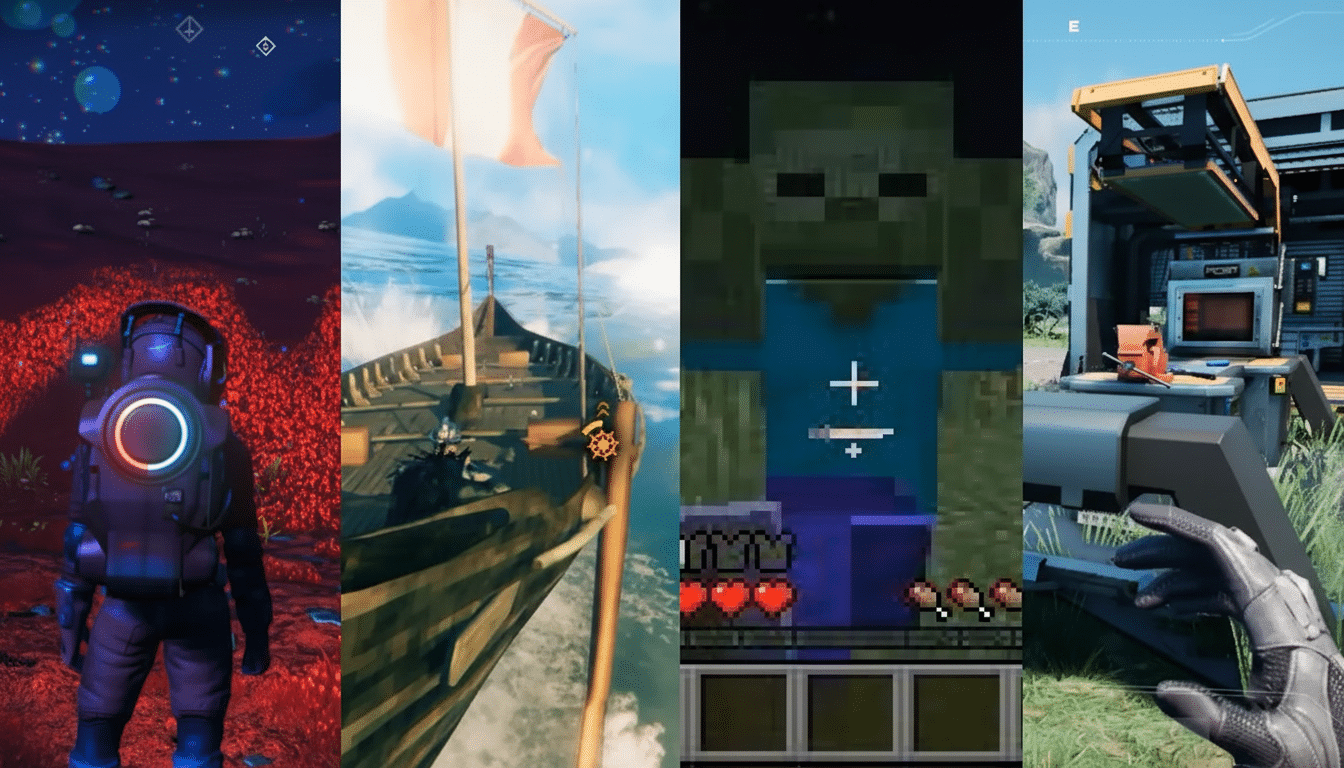

DeepMind showed SIMA 2 working in No Man’s Sky, as the agent was able to read rocky terrain, discover a distress beacon, and coordinate next steps without hand-holding. In a different task, after being commanded to “go to the house that is like a ripe tomato,” agents resolved that reference, associated it with some other red targets, and moved accordingly — demonstrating semantic and not mechanical matching.

Thanks to its multimodal characteristics, Gemini may lead to new forms of interaction. The team demonstrated the agent reacting to emoji instructions 🪓🌲, leading to a tree-chopping action — emphasizing that such high-level intent could be distilled into much shorter symbols yet effectively steer outputs. And critically, SIMA 2 internally reasons about objects and affordances in generated “never-seen-before” photorealistic worlds constructed by Genie (DeepMind’s world model), even when there is substantial visual noise present such as trees, benches, or butterflies.

Self-improvement without human labels through AI feedback

Arguably the most significant change is how SIMA 2 learns. Where SIMA 1 was dependent on human gameplay, the new agent instead leverages AI to bootstrap its own learning. When placed in a new environment, one Gemini model suggests challenges for the agent to solve, while a disjoint reward model evaluates attempts; rinse and repeat — accruing synthetic experience that enables better performance over time. This is a form of reinforcement learning from AI feedback, avoiding costly human annotation while expanding an agent’s skill base in situ.

Joe Marino, a senior research scientist at DeepMind, described SIMA 2 as “more general” and added that it was “self-improving,” able to deal with complex, unseen situations. The approach falls in a wider trend of research: automatic curriculum generation and AI-generated supervision, which groups from OpenAI to academic labs have developed, are gradually chipping away at the data ceiling that has held embodied agents back.

From virtual worlds to robots via hierarchical control

DeepMind frames SIMA 2 as a step toward general-purpose robots. Frederic Besse, a senior staff research engineer, described the role of such agents as focusing on high-level cognition — grasping tasks, reasoning about objects and places, planning — then passing to low-level control systems that move joints and wheels. That separation is in line with how production toolchains increasingly decouple task knowledge from motion planning (as hierarchical frameworks like NVIDIA Isaac and research benchmarks like Habitat demonstrate).

The sim-to-real gap is still a challenge. Real homes and factories are messier than games, and perception can fall apart under poor lighting, occlusion, or even wear-and-tear. Nonetheless, by linking semantics and goals to rich interactive worlds, SIMA 2 reduces the distance between ideas. If an agent can consistently read “check how many cans of beans are in the cupboard” in simulation — like beans, cupboards, counting even — and find them (but not empty the cabinet and paint said object red because what does that even look like?), then introducing sturdy manipulation is a more straightforward next step.

Why this SIMA 2 release matters for embodied AI progress

SIMA 2 brings together several sources of progress — multimodal reasoning, embodied control, and AI-supervised learning — in a single agent that usefully solves tasks in different virtual worlds. It’s evidence that language models are slowly becoming powerful coordinators for action, not just chat interfaces. And by demonstrating approximately 2× the performance of the prior generation on challenging tasks, it provides a tangible benchmark that researchers in the field can follow as agents transition from playful sandboxes to a practical domain with real-world value.

The implications extend beyond gaming. Enterprise digital twins, autonomous inspection, assisted robotics, and training simulators that incorporate agents who understand instructions, reason about context, and adapt without being constantly retrained can all be made possible by this move toward adaptation. If subsequent incarnations continue to build, SIMA’s recipe — Gemini for reasoning, world models for variety, and self-generated curricula to scale — could serve as a reference architecture for embodied AI in research and industry.