Google is pushing a new preview of Gemini 3.1 Pro, positioning the model as a sharper, more reliable problem-solver for everyday users, developers, and enterprise teams. The company says the upgrade significantly strengthens multi-step reasoning, and it is framing 3.1 Pro as a sturdier default model for complex tasks rather than a niche, high-end option.

The headline claim is straightforward: Gemini 3.1 Pro doubles reasoning performance compared to Gemini 3.0 Pro. While Google has not published full benchmark tables alongside the announcement, the company is explicitly targeting better outcomes on multi-hop instructions, math and logic, and structured problem decomposition—areas where large language models typically stumble under pressure.

What’s New in Gemini 3.1 Pro: Reasoning Upgrades

Reasoning is the focus. In practical terms, that means 3.1 Pro should be more consistent at breaking big problems into manageable parts, sticking to constraints, and following through to a final, defensible answer. These are the capabilities that matter when a model is asked to help plan a product launch, reconcile data from multiple sources, or turn a rough prompt into working code with proper edge-case handling.

Google describes the model as a more “capable baseline” for problem-solving. Expect tighter chain-of-logic on tasks like:

- Converting messy spreadsheets into clean schemas

- Drafting SQL to answer specific questions against a known dataset

- Generating test cases after reading API docs

- Outlining multi-week schedules with dependencies that actually line up

Although the preview does not spell out token limits or latency targets, the emphasis on reasoning typically pairs with improvements to context handling and tool use. In past industry updates, better reasoning often correlates with fewer dead ends, fewer retries, and more stable outputs when the prompt grows longer or the task requires several steps of planning.

Availability and Access for the Gemini 3.1 Pro Preview

Gemini 3.1 Pro is rolling out in preview to consumers via the Gemini app. Google notes higher limits for Google AI Pro and Ultra subscribers—an indicator that heavier users and teams can push the model harder before throttling kicks in. The model also appears in NotebookLM for those same subscription tiers, positioning the upgraded reasoning engine inside Google’s source-grounded note-taking and research tool.

Developers and business customers are included in the preview phase, though Google has not provided a firm date for broad general availability. The company says wider access is coming “soon,” which typically signals a short runway while feedback is gathered and capacity is tuned.

Why Better Reasoning Matters for Real-World AI Tasks

Many generative AI failures are not about knowledge gaps—they’re about poor reasoning under constraints. When a model forgets an instruction halfway through, hallucinates a step, or misapplies a rule, the output can look plausible but be unusable. A sturdier reasoning core reduces those failure modes and makes the model more predictable in production-adjacent work.

Consider a few common workflows:

- Reconciling inventory across regions with slightly different tax rules

- Converting business requirements into robust acceptance criteria

- Transforming a dense policy PDF into a decision tree

- Stitching together multiple APIs to automate a back-office process

Each requires the model to hold many details in mind, follow structured logic, and resist misleading shortcuts. That is precisely the territory Google is targeting with 3.1 Pro.

How It Stacks Up Against Other Advanced AI Models

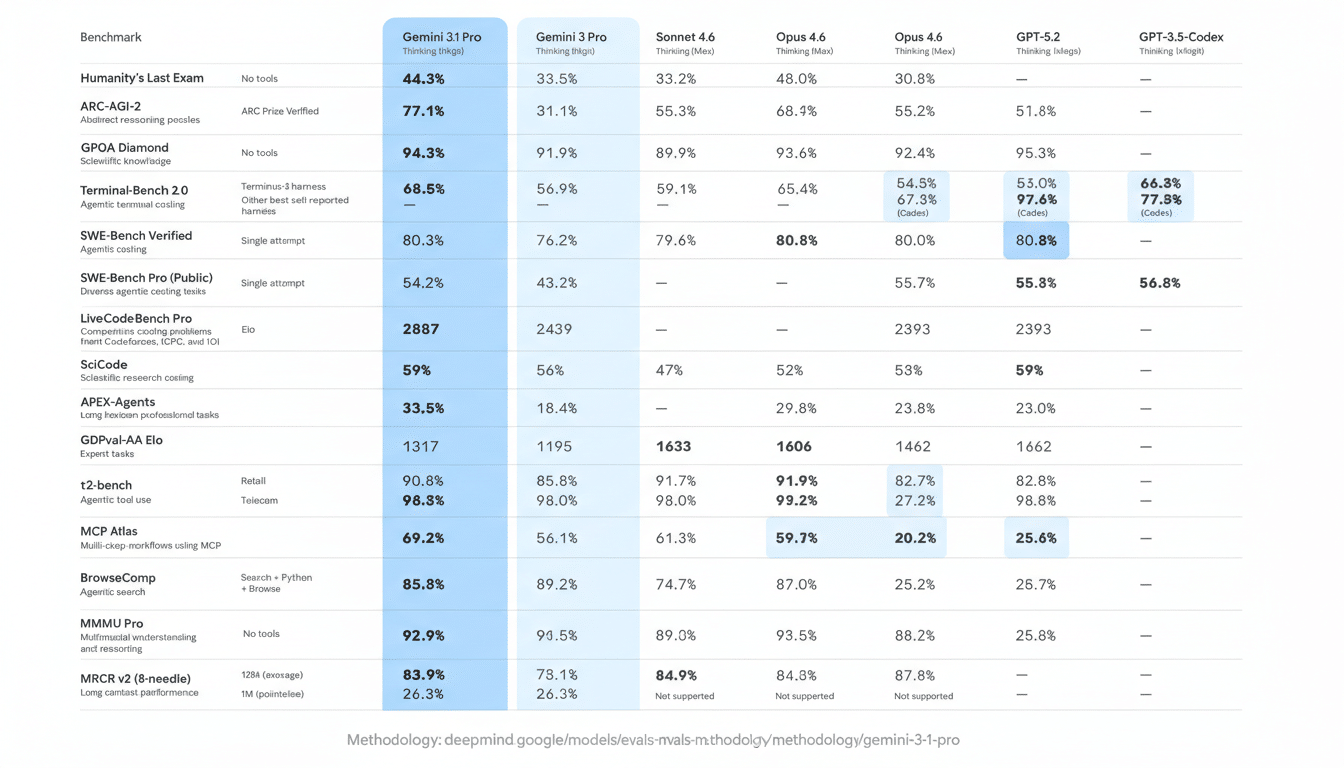

This release lands amid a broader industry push to strengthen reasoning over raw fluency. Advanced models from OpenAI and Anthropic have similarly emphasized multi-step problem solving and planning. Independent evaluations such as Stanford’s HELM and tasks like MMLU, GSM8K, and GPQA are commonly used to validate progress. While Google has not shared a benchmark rundown for this preview, the “2x” reasoning claim sets clear expectations about where users should notice gains.

Enterprises will be watching reliability, not just demos. If 3.1 Pro can cut retries and produce more stable first-pass outputs, it can reduce operational costs and help teams move prototypes into day-to-day workflows with fewer guardrails and fewer human edits.

What to Watch Next as Gemini 3.1 Pro Rolls Out

Three signals will tell the story from here:

- Whether Google expands usage limits as demand scales

- Whether developers see tangible improvements on multi-step tasks without extra prompt engineering

- How quickly the model exits preview into general availability

NotebookLM access for AI Pro and Ultra users also suggests Google wants to showcase reasoning gains in grounded, source-cited workflows where accuracy matters.

For now, Gemini 3.1 Pro arrives as a pointed upgrade aimed squarely at the hardest part of LLM performance: getting the steps right, not just sounding right. If the real-world results match the 2x reasoning promise, this preview could mark a meaningful step toward more dependable AI assistance across consumer, developer, and enterprise use cases.