Google is seemingly prepping its Translate app for a future where real-time language help lives in your field of vision. A breakdown of the latest version of the app suggests new Live Translate controls that route audio output to individual devices and a system-level method for keeping translations running in the background—both useful improvements that would certainly make sense on smart glasses. Add some Gemini-powered speech understanding, and Translate is looking like the killer everyday app for wearables you don’t want to cringe at.

Live Translate Gets Glasses-Aware Controls

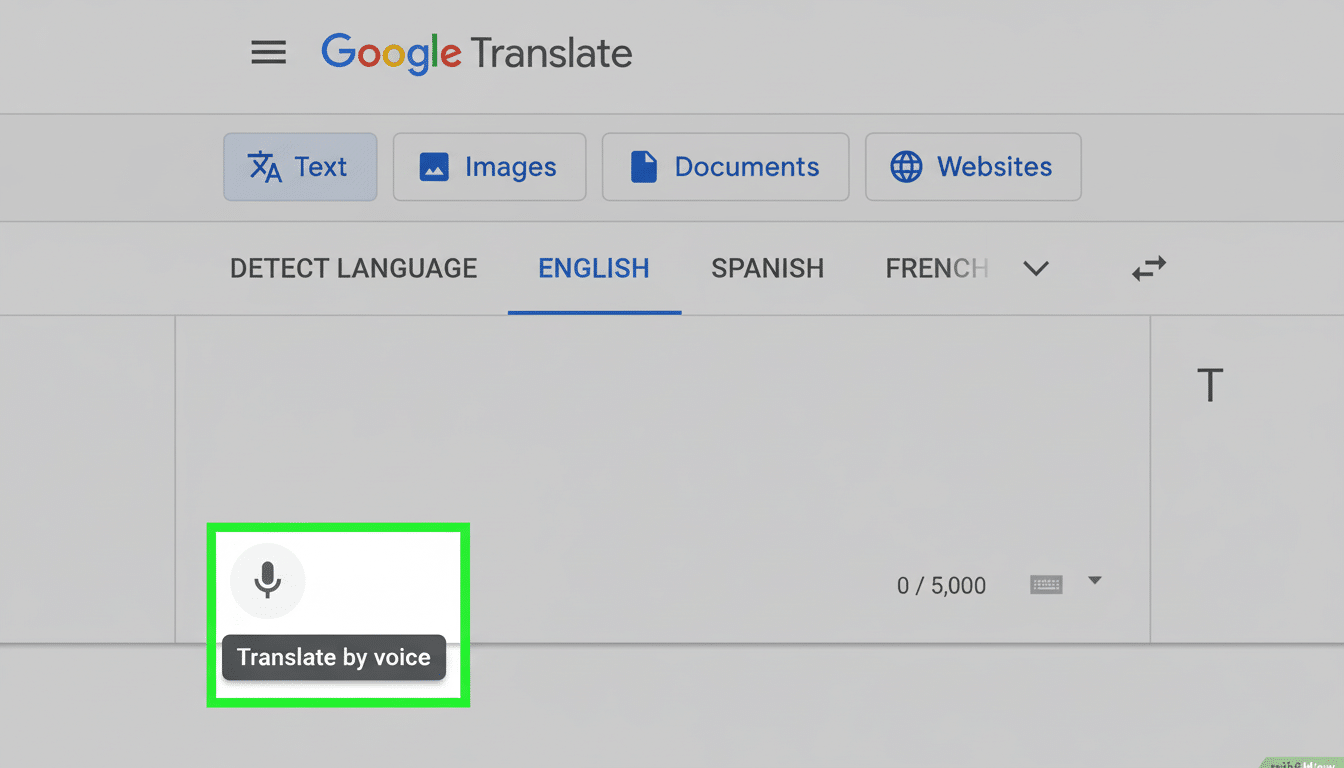

Within the current Translate experience, Live Translate features on-screen text and an optional spoken translation. Once spoiled by Android’s audio routing alongside the rest of your phone and apps, you’ll find Google has taken the new interface even deeper with per-language audio routing—muted or played on the phone speaker, headphones, or choosing a future “glasses” option for each side of a conversation. In theory, that might mean you’d pipe your language privately to a headphone or glasses (or simply use them yourself) while playing the other person’s translated speech out loud through your phone speaker—no feedback, no crosstalk, and both conversationalists able to stay comfy.

This small difference radically shifts actual real-world use. Imagine a traveler haggling over the price of a taxi, what a teacher does when accommodating new students, or how an intake nurse records your information. Splitting audio by language eliminates the headache of toggling volume or sharing a phone. It also expects to add a heads-up display, so you silently hear your prompts and the other person hears the translation.

Background Translation Points To Hands-Free Use

The app also plans to include a persistent notification that will let you keep Live Translate on while changing apps, including pause and resume buttons. That’s something bigger than a convenience; it is table stakes for any wearables workflow. If you’re looking at a map, checking out a menu, or responding to a message, translation can’t let you down. Google also already does something similar with Gemini Live, and bringing that to Translate fits into this era of hands-free, glanceable interactions.

Between the background operation and device-level audio routing, these two aspects solve two of Live Translate’s greatest limitations—it no longer has to monopolize your phone screen and sound can now be directed over to where it should go.

That’s base functionality for glasses in which your phone serves as the brains and the frames are a stealthy input and output mechanism.

Why Translate Is Optimized For Smart Glasses

Translate is already familiar and indispensable, an unusual feat for any early wearable ecosystem. It is already available in more than 130 languages, and leverages the latest advances in Google’s multimodal models with Gemini. With glasses, the trade-off rises further still: subtitles stuck to your world, less social cost than staring at a phone screen, and faster back-and-forth when you can hear your language in-ear while the other party hears his or hers.

Google previously publicly demoed translation on glasses, showing off how live captions show up overlaid across your view. These new app changes appear to be the plumbing that must be rewritten in order to have made that demo repeatable at scale—routing audio out of the thing correctly, keeping sessions alive as you multitask, and having a device picker that specifically contains “glasses” as an endpoint.

Competitive Pressure And Signals From An Ecosystem

And now rivals are flocking to the same chance. Meta’s newest Ray-Ban styles include an on-device assistant that can both translate speech and text, while a number of new or niche brands have also been trying to make AI captions work. In the wearable and XR categories also, we have seen some momentum in this space. Industry estimates from IDC and Counterpoint Research have pointed to renewed interest in these categories as AI evolved out of novelty to utility. If Google can combine Translate’s reach with reliable, low-latency audio and legible on-glass captions, it has a compelling consumer hook.

Hardware readiness matters. Throwing reliable translation over glasses will require the use of beamforming microphones, low-latency Bluetooth—read: LE Audio with LC3—and battery-friendly speech processing. Google’s maneuvering room to flex between on-device and cloud models, which is what it already does across Pixel features and Gemini, could end up reducing lag even without harming quality.

What To Watch Next for Google Translate on Glasses

On the software side, look for Google to flip the switch on the device picker’s “glasses” target and continue to refine latency as it chases an efficient pipeline between phone and wearable. On the hardware front, watch for any announcements around Android XR partnerships that focus on audio, captions, and privacy-first capture indicators—all of which are critical to mainstream adoption.

The destination is clear: make translations ambient, private as needed, and always available. If Google can get handles on those specifics, Translate won’t just be a killer app for smart glasses—it might be the reason everyone decides they need to have a pair.