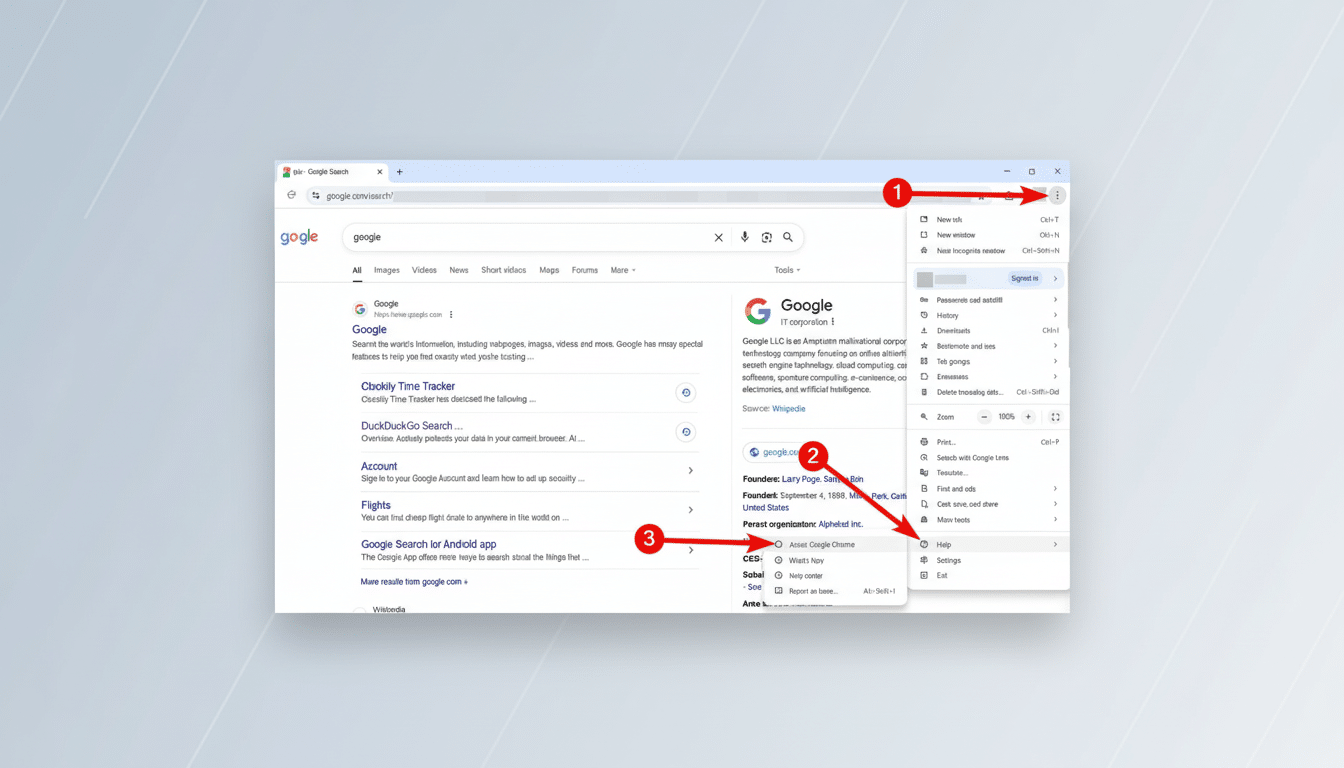

Google has revealed its approach to tightening its leash on Chrome’s next generation of “agentic” features, or tools that perform tasks for a user. The company described a layered approach, which combines model-level oversight with browser-enforced restrictions and explicit user permission, in an attempt to show how common browsers could use AI agents without paving new paths for fraud, data leaks, or unwanted behavior.

Agentic capacities portend convenience such as booking travel, price-checking, and authoring messages directly in the browser. They also raise novel risks. Chrome’s design addresses this with observer models that oversee agent plans, origin-level restrictions that constrain what the agent can read, write, or take over, and guardrails that enforce user consent for sensitive actions.

How Chrome Protects Itself With Its Agent

Google favors a “planner” model complemented with a Gemini-trained “User Alignment Critic,” which evaluates the planned actions by the planner against users’ stated intentions. And if the critic spots steps that deviate from desired paths or introduce unnecessary risks, it motivates the planner to modify the sequence before anything occurs.

Crucially, the critic model is scoped to metadata about candidate actions — not full page content — reducing exposure of browsing data, yet still providing enough signal to catch off-target behavior. This feedback loop is akin to a real-time code review for agent plans: it advises safe, goal-aligned actions, and rejects everything else.

Boundaries at the Origin Restrict Information Flow

In order to restrict where the agent is able to read or write data (from/to), Chrome introduces Agent Origin Sets. The agent can consume content (via clicks or text entry) from certain origins that are marked as read-only, and it can perform mouse clicks and send text only on origins marked as read-write. That separation limits what cross-origin data can be exposed — the kind of exposure attackers use to cajole through ads, embedded widgets, or hostile iframes.

Take a shopping site: product listings could be readable because they are relevant to the task at hand, while ad slots or analytics frames are off-limits. Chrome is able to enforce this separation at the browser level, so it can restrict which data even gets sent to the model and which on-page elements the agent is allowed to manipulate.

Navigation and Credential Safeguards for Agents

Chrome also has a model in the loop to vet navigation targets, so that any malicious or pattern-generated URLs are caught before the agent lands on them. That’s important because being able to passively browse could increase phishing or drive-by risk, as all links might be assumed to be safe.

Some examples of this are sensitive surfaces — such as banking portals or health care sites — especially any flow that would cause authentication, where Chrome intentionally adds friction. The agent must request permission from the user to initiate injection, and if sign-in is required, Chrome gates injection through the browser’s password manager. Google stressed that the agent model does not receive or process passwords directly. The same consent gate applies to high-impact actions like purchases or messages.

In application, that would mean an itinerary-booking task could continue to completion in the background as specific options are made, with comparisons and filters automatically applied — but pausing to ask “Are you sure?” at checkout (including cart details, total, and merchant) before confirming.

Blocking Prompt Injection and Red Teaming

To defend against prompt injection — the approach of embedding adversarial commands in web content — Chrome uses a specific classifier and continues to test possible adversarial examples. This also follows industry recommendations: the OWASP Top 10 for LLM Applications names prompt injection as one of the top risks, and the NIST AI Risk Management Framework states that “controls, including layered defenses and testing of autonomous behaviors, shall be provided.”

Google says it is using these systems against attacks crafted by researchers to verify defenses before they are rolled out broadly. The ecosystem at large is trending in this direction; Perplexity recently released an open-source model designed to detect injection attempts against agents, and academic and industry red teams continue to release test suites that evaluate the reliability of models under adversarial conditions.

Why the Browser Wars Matter for AI Agent Safety

Browsers are the new operating layer for artificial general intelligence agents, and Chrome’s stance on this matter could play a big role in what sort of AI we’re using. Chrome accounts for something like 65 percent of worldwide browser usage, according to StatCounter; so the potential for any little screw-up on Google’s part to become very big is quite large — and a strong start could lay down the de facto standard as far as safety is concerned.

Competitors are playing, too — Edge works agent-like Copilot actions into the browser, Brave’s Leo does some on-page tasks for you, and upstart AI browsers are racing to automate your daily workflow. The key differentiator is not just what the agents can do, but how securely the user can allow them to do it.

The strategy Google outlines is cautious by design: keep the agent on a short leash, limit what it can see and do, and leave control in the hands of the user at crucial junctures. And if done right, that balance might help shift agentic browsing from flashy demos to day-to-day dependability.