General Motors is ripping the guts out of its vehicles to make artificial intelligence the centerpiece of the car’s driving experience. The strategy turns GM’s vehicles from cars with an estimated 30,000 or more discrete parts, supplemented by dozens of isolated electronic control units, to software-defined vehicles—much like your smartphone or laptop computer—with one purpose-built system running high-performance computing to power critical safety automation features, like a next-generation autopilot of sorts; rich infotainment capabilities tuned for hardcore gamers; plus enough processing power so that every passenger can continuously use their own video screen without taxing the GPU that runs life-critical utilities.

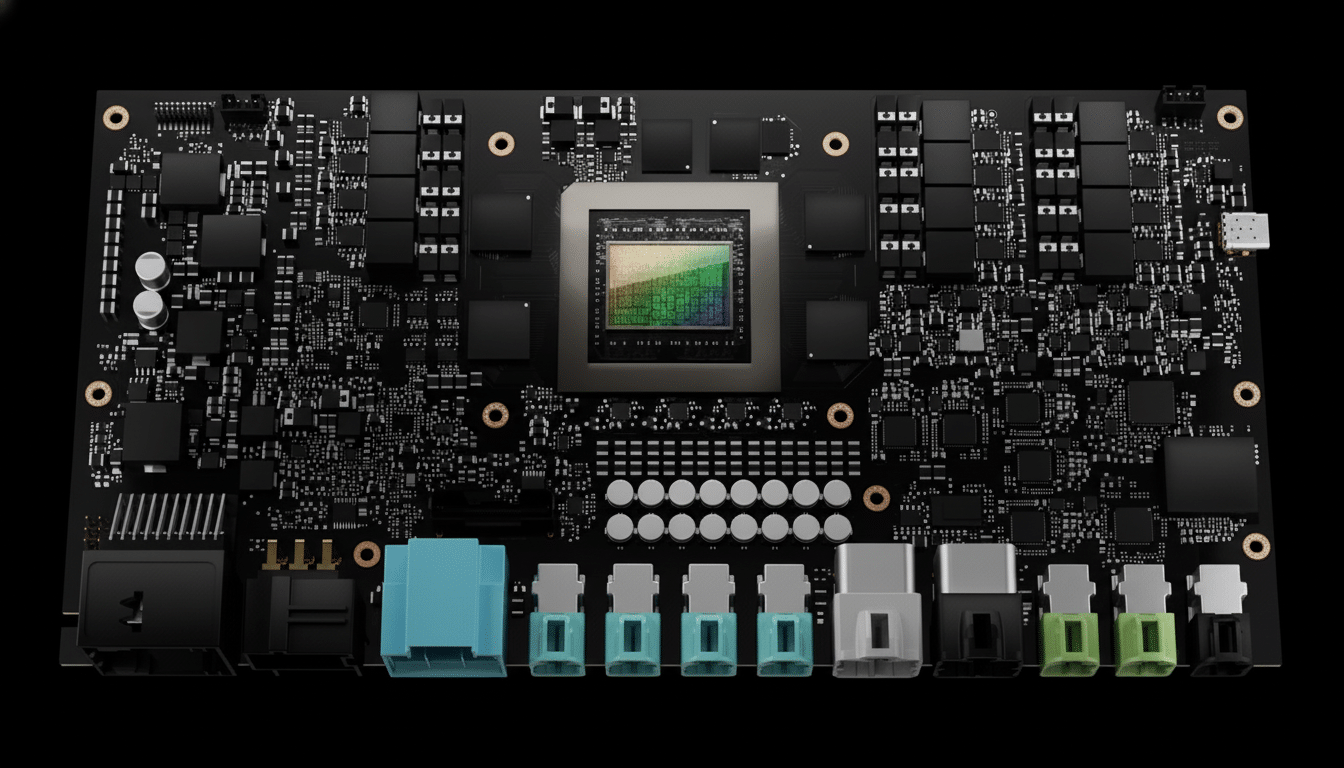

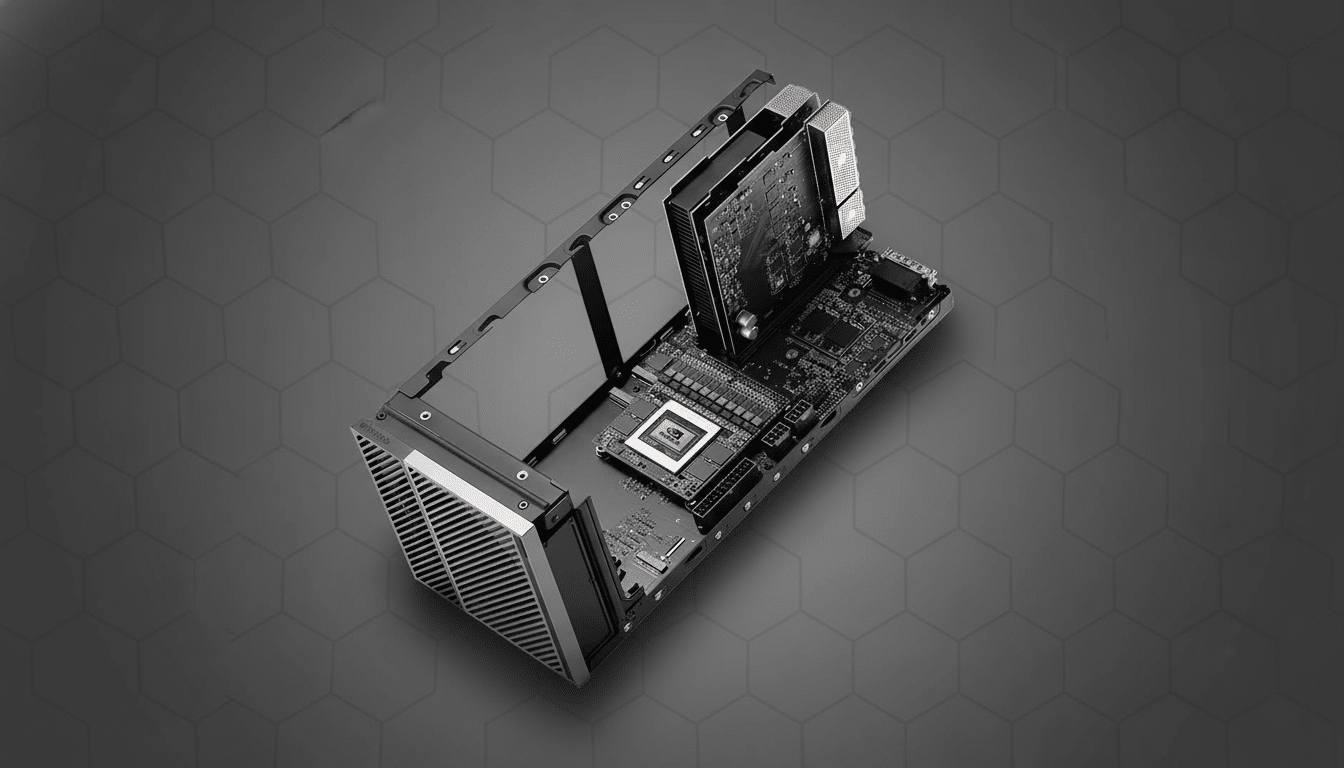

GM says its first production of the new electrical architecture and centralized compute platform will be in 2027 with the Cadillac Escalade IQ, before bringing this across more of its lineup beginning in 2028. At its heart is Nvidia Drive Thor, an autonomous vehicle computer designed from the ground up that builds on GM’s longstanding partnership with Nvidia and allows for many domains to be combined on a single chip.

Why GM Is Remaking the Vehicle Brain for the Future

The modern car is full of electronic control units (ECUs) that govern everything from the wheels and brakes to Bluetooth. That sprawl introduces complexity, wiring weight, and software bottlenecks. The early move by Tesla toward centralized compute and always-on over-the-air updates set the competitive bar, and Chinese brands including BYD, Xpeng, and Li Auto followed suit with rapidly evolving software stacks and aggressive hardware roadmaps.

GM has been headed in this direction for years. Its Vehicle Intelligence Platform opened up bigger data pipes and over-the-air updates, and its in-car software layer, which was (confusingly) called Ultifi out of the gate, brought app-like services to newer models. The new architecture is the clean-sheet step that connects those loose efforts and raises the ceiling for purely autonomous-grade compute, faster updates, and feature expansion.

Centralized Compute With Nvidia at the Center

The company says Drive Thor can process up to 2,000 teraFLOPS of AI — enough power to fuse camera, radar, and lidar signals while running perception, planning, driver monitoring, along with infotainment on a single system. Safety “islands,” hypervisor separation, and deterministic scheduling are also implemented to make sure entertainment and autonomy workloads don’t get into each other’s way — of the utmost importance for ISO 26262 functional safety targets.

For GM, one computer to replace many domain controllers reduces parts count and latency, as well as thermal management complexity and cost over time.

It also future-proofs vehicles for more advanced levels of automation, since software can be updated to take advantage of headroom rather than waiting for a new ECU cycle.

From ECUs to Zonal Networking Across GM’s Vehicles

General Motors says it wants to crunch dozens of electronic control units together into a central core connected to three zonal aggregators. Those hubs are the translation layer from hundreds of sensor and actuator signals to a common digital language, meant to pull commands back across a high-speed automotive Ethernet backbone operating at multi-gigabit speeds.

The overhaul will provide about 10 times more over-the-air update capacity, around 1,000 times greater bandwidth, and as much as a 35x boost in autonomy and other advanced features, such as artificial intelligence performance, compared to current systems, the company said. Industry teardowns by companies like S&P Global Mobility indicate zonal designs can also cut wiring harness length and weight by 20–30% to increase efficiency and manufacturability.

Security and compliance are baked in: Emerging standards such as ISO/SAE 21434 for cybersecurity and UNECE R155/R156 for cybersecurity and OTA management are becoming table stakes, as cars behave more like connected computers on wheels.

What This Allows for Drivers and Passengers Alike

Key features include a natural-language assistant and better automated driving. Expect an AI that understands context (“find me a quiet coffee shop on my planned route, with parking”), and can interface with vehicle systems to alter cabin settings, preprogram charging, or explain a warning light in clear English. Running aspects of the model on the car’s compute should help keep latency low while cloud services process more intense portions.

On the road, GM is aiming for greater automation on highways, including a requirement that certain conditions must be met, with eyes-off capability similar to the Level 3 systems some luxury brands now sell. Mercedes-Benz, for one, has approvals for Level 3 “Drive Pilot” in some U.S. states, representing a road map to regulation. Any eyes-off features in GM cars will depend on strict operational limitations, dual controls for steering and braking, high-definition maps, and strong driver monitoring where mandated by regulators and insurers.

Centralized compute does more than just automate — it enables richer graphics, gaming, and streaming, as well as faster rollouts of OTA features. GM’s switch to an embedded infotainment stack running on Android Automotive OS lets the automaker own the user experience and commercialize software. The company has previously expressed ambitions for multibillion-dollar annual software and services revenue by 2030, and its goals fit neatly with industry analyses from McKinsey that see hundreds of billions in automotive software value over the course of this decade.

The Competitive and Regulatory Environment

GM’s action comes at a time when driver-assistance branding is under scrutiny. NHTSA’s active investigations and crash-reporting programs are evidence that today’s assistance systems do not make cars self-driving. The Insurance Institute for Highway Safety has urged strict monitoring of drivers and unambiguous human accountability. Consumer trust is fragile — in recent years, AAA’s research has found most U.S. drivers are wary of fully self-driving cars — so transparency and controls in the rollout will be key.

GM also bears lessons from the robotaxi sector’s stumbles in 2023, a year that saw high-profile accidents in the industry ratchet up concerns about safety. A shift to scalable, supervised automation in personal vehicles represents a nearer-term, cash-flowing avenue as the company pushes toward higher autonomy in more constrained areas.

Risks and the Path Forward for GM’s Software Shift

It’s a heavy lift to integrate an entirely new compute architecture across both the gas and electric worlds. Supply-chain resiliency for advanced silicon, thermal constraints, and the power draw of high-end chips (they aren’t an insignificant nibble on EV range) are honest-to-goodness engineering trade-offs. But the zonal architecture, software abstraction layers, and OTA tooling are intended to pay back that investment in speed: quicker fixes, faster feature launches, and a much longer useful life for the hardware inside the vehicle.

If GM restarts with Escalade IQ in 2027, expands the rollout through to 2028, and achieves meaningful automation proportions, it will not be all about raw compute.

It will manifest as fewer recalls for software problems, better attachment rates for paid features, regulatory approval to use the car eyes-off where appropriate, and, most important of all, a driving experience that gets smarter every month the car is on the road.