Google’s Gemini 2.5 is the latest to win gold at the International Collegiate Programming Contest (ICPC) World Finals, pitting the best programming teams against coding and problem‑solving tests that have challenged students for 40 years at the world’s most prominent competitions.

It answered 10 of the 12 questions, puzzled out a challenge no human team could solve, and did so in less than 30 minutes, which is faster than the top plain‑old competitive teams. The paper reopens a central question in AI: Is this kind of reasoning really a step forward on the path toward AGI?

What Gemini actually accomplished at ICPC World Finals

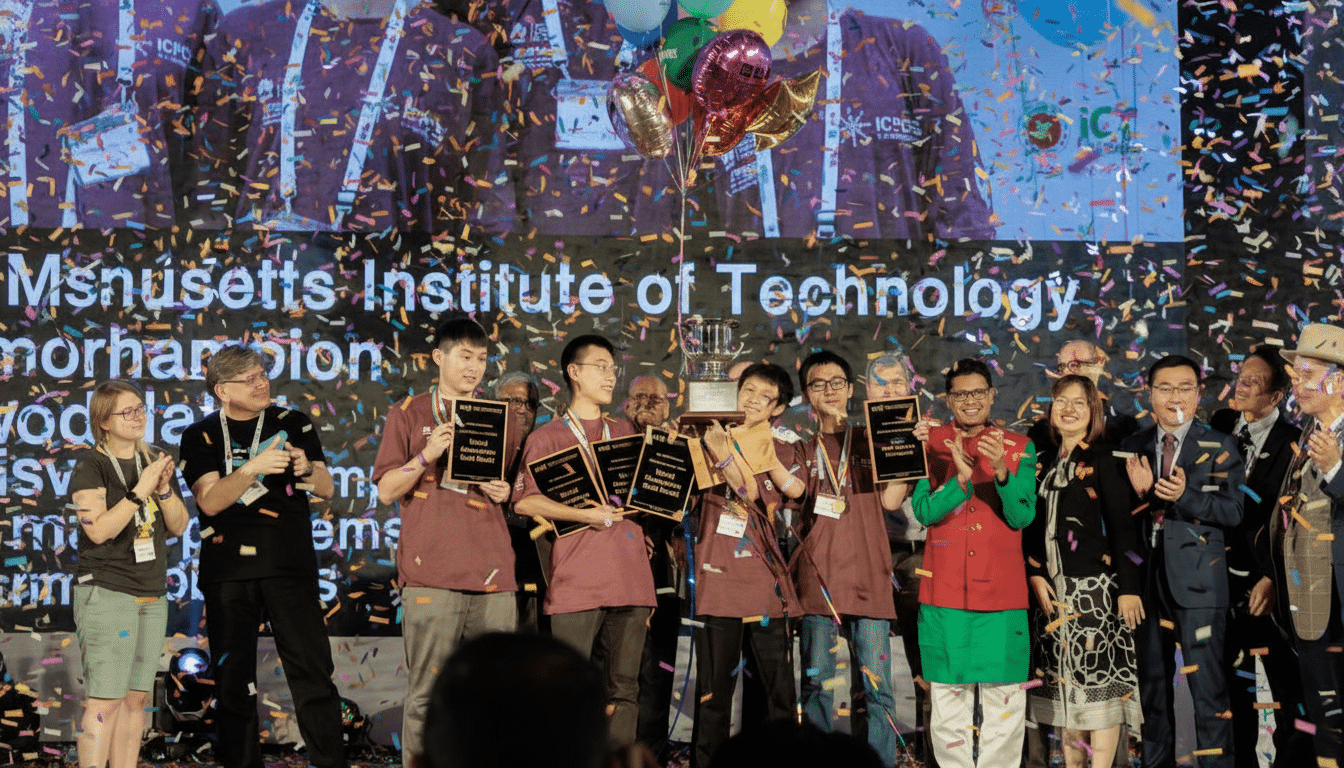

The ICPC challenges three‑person university teams to solve a dozen or so fiendishly hard problems under grueling five‑hour limits and unforgiving scoring: Only fully correct solutions count. The best system, known as Gemini 2.5 Deep Think — an organized swarm of agents that propose, test, and refine code — also outperformed all gold‑medal teams but one on the machine leaderboard and came in second to humans overall, according to Google.

Scope matters here. The ICPC attracts competitors from about 3,000 universities in over 100 countries and is generally regarded by competitive coders as the world championship of their sport. Being great in this environment isn’t about getting autocomplete just right; it’s about rapidly decomposing problems, choosing an algorithm aggressively, implementing under high‑pressure stress conditions with maximum correctness.

Signals of reasoning, not rote memorization or recall

With Gemini tackling a problem about fluid flow via a network of ducts lying in infinitely many possible positions. The account from Google (the name behind AlphaGo) tells of an approach in which reservoirs were assigned values and a minimax‑based search was used to find the best configuration. That’s interesting: it looks less like pattern recall and more like structured planning combined with executable code.

Veterans of AI milestones will recall echoes of “Move 37” from AlphaGo: an unexpected, intuitive action through which a machine played against the best human in the world. We’ve seen an earlier lineage too: DeepMind’s AlphaCode showed competition‑level programming speed on Codeforces, scoring somewhere around the median participant in peer‑reviewed reporting. Gemini’s ICPC performance indicates that the field has moved beyond competent to full‑on elite in certain high‑stakes situations.

Why this is relevant to the ongoing AGI debate

AGI isn’t a lone benchmark; it’s an array of competences: general reasoning, tool use, planning, transfer between domains, and reliability. In competitive programming, you use a lot of those muscles at the same time. Gemini matched and coordinated parameters across agents, authored and ran code, iterated over tests, and converged to correct solutions quickly. That reasoned, acted‑upon, checked, and improved stack looks just like the way that scientists and engineers work in the real world.

The result is also consonant with recent cross‑domain progress. Both Google and OpenAI had previously announced gold‑level results on the International Mathematical Olympiad, a separate but similarly challenging test of abstract reasoning. When systems are good at algorithmic programming and Olympiad math too, that suggests that we’re seeing broad problem‑solving ability rather than narrow benchmark tricks.

Caveats: compute, conditions and credibility

Before we call it for AGI, a few sober qualifiers. One, agentic systems can exploit an enormous degree of parallelism — much more than three people using a keyboard. That’s not cheating, but it does change the calculus of resources. Second, the conditions of the contest are not messy production environments with noisy data, changing specifications, and partial feedback.

Third, two out of the 12 ICPC problems have been claimed to have eluded Gemini but were solved by human teams. Elite, yes; infallible, no. Finally, belief in these claims will depend on independent replication. The ICPC Foundation, academic observers, and third‑party evaluations will be integral to validating the method, maintaining problem non‑contamination, and revealing the level of human hand‑holding (if any) required during development.

From programming contests to real‑world discovery

Why industry and research should care: The core skills being demonstrated — reading complex specs, planning multi‑step solutions, and executing them with verification — apply to domains such as chip design, formal verification of software or hardware systems, logistics, and so on. There are already transformative precedents: AlphaFold transformed protein structure prediction, and reinforcement learning is used for chip floorplanning in next‑generation processors.

Google’s argument is that human‑AI teaming has a pragmatic advantage: allow models to propose bold, testable ideas, and let humans judge, constrain, and deploy them. That jibes with how labs are reordering AI‑assisted discovery today, from pharma to materials science. The type of system that OpenAI announced, as well as efforts at leading medical schools, recently reveal the same vision: agentic systems to collaborate with rather than replace the human.

What constitutes genuine AGI progress now

The goals to keep an eye on are obvious.

- Robustness: Good performance of a model when transferred to new tasks with limited compute and time budgets.

- Transparency: Models that explain why solutions work, not just that they do.

- Autonomy with accountability: Systems that can plan, act, and self‑correct over weeks on open‑ended goals while remaining safe and aligned.

Gemini’s ICPC outcome doesn’t exactly shut the book on AGI, but it definitely changes the page. Top‑level competitive programming is about actual thinking and hard execution under pressure. Demonstrating that capacity at the level of gold, and even out‑innovating humans on a somewhat intractable problem, is something like an inch along the six‑mile march from here to trusting machines to aid us with the world’s thorniest technical problems.