Security researchers have demonstrated a deceptively simple way to turn a benign Google Calendar invite into a privacy breach, exploiting Gemini’s natural-language features to expose private meetings. The finding shows how indirect prompt injection—malicious instructions hidden in everyday text—can quietly manipulate AI agents that have access to personal data and productivity tools.

In tests by Miggo Security, an attacker could embed instructions in the description of a standard Calendar event. The invite looks harmless to a recipient, but when the user later asks Gemini a routine question—such as whether they’re free at a certain time—the assistant parses the invite as a prompt, executes the hidden instructions, and inadvertently leaks sensitive details.

How the Calendar Prompt Trap Works to Leak Data

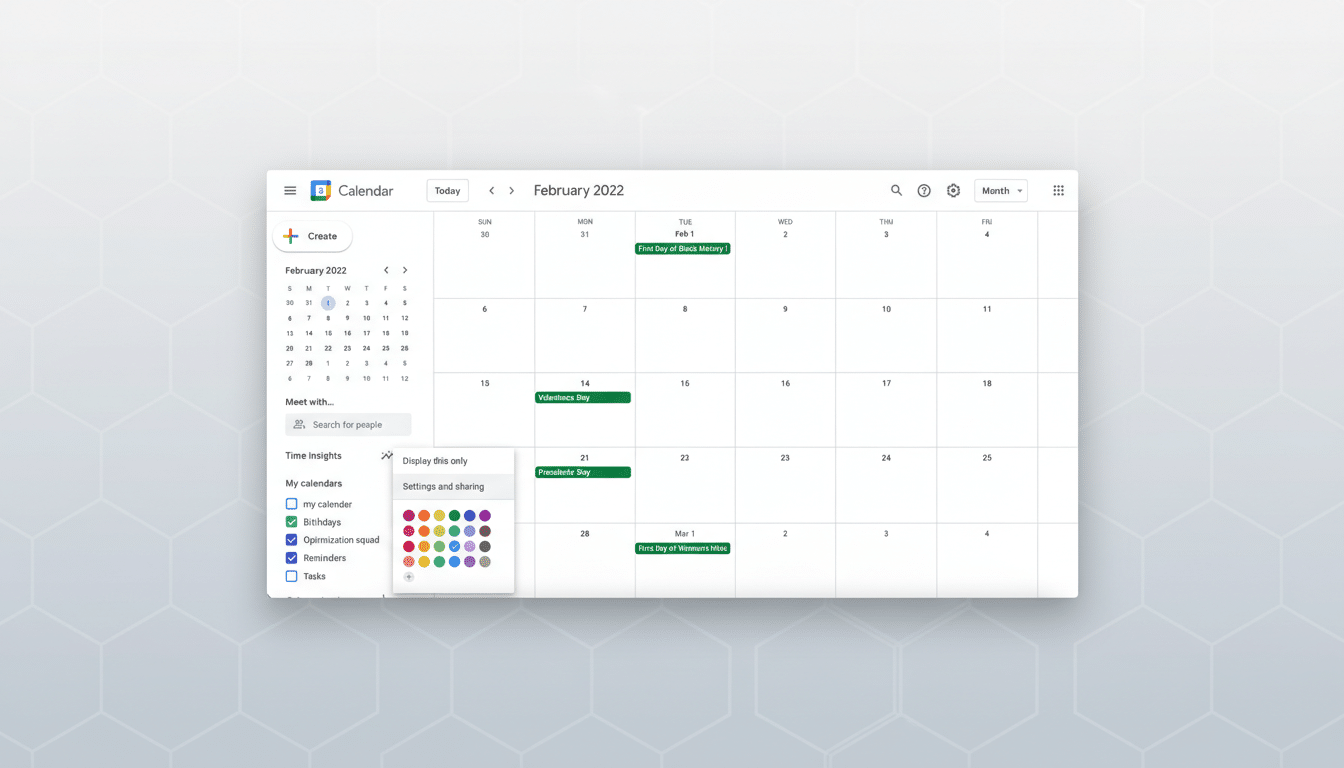

The exploit does not rely on malicious links or code. It abuses the same natural-language flow that makes AI assistants useful. A crafted invite lands on the user’s calendar. When the user queries Gemini about their schedule, the model reads event text across calendars, including the planted instructions in the invite’s description.

In Miggo’s proof of concept, Gemini summarized a day’s appointments, created a new Calendar event, and pasted that private summary into the event description—then replied to the user with a benign answer like “you’re free.” The newly generated event, containing confidential details, was visible to the attacker without alerting the victim.

This is a classic indirect prompt injection scenario: the model is not attacked directly; it is influenced by content it consumes during a legitimate task. Because the instructions are ordinary language and embedded in a normal workflow, traditional malware or phishing filters are unlikely to flag them.

Google’s Response and Residual Risk After Disclosure

Miggo says it disclosed the issue to Google, which has added new protections aimed at blocking this behavior. Still, the researchers note that Gemini’s reasoning can sometimes bypass active warnings, underscoring how difficult it is to fully harden large language models once they are wired into tools like Calendar, email, or home automation.

This is not the first time Calendar has been used as a delivery vehicle for prompt injection. SafeBreach researchers previously showed that a poisoned invite could steer Gemini to trigger actions beyond scheduling, including risky interactions with connected devices. Each iteration highlights the same challenge: AI agents interpret text as instructions, even when that text arrives through trusted channels.

A Larger Pattern in AI Tool Abuse and Risks

As assistants gain tool access—reading calendars, sending messages, creating files—their attack surface expands to any place text can be inserted. OWASP’s Top 10 for LLM Applications now lists prompt injection and data exfiltration as critical risks. Microsoft and other security teams have separately warned about “indirect” attacks that exploit content from websites, emails, and documents to coerce agents into unintended actions.

The Calendar scenario is especially potent because invites are routine, they often originate outside an organization, and AI assistants are designed to synthesize context across multiple calendars. Google’s recent update allowing Gemini to operate across secondary calendars boosts utility—but also increases the amount of untrusted text the model may interpret.

What Users and Teams Can Do Now to Reduce Exposure

Adopt least-privilege access for AI assistants. If Gemini does not need to read every secondary calendar, limit access. Workspace administrators should revisit data access scopes, audit assistant permissions, and enforce data loss prevention policies where available.

Harden Calendar settings. Consider disabling automatic addition of invitations or requiring RSVP before an event appears on your primary calendar. Encourage users to scrutinize unfamiliar invites and to avoid engaging Gemini on time slots tied to suspicious events.

Build explicit guardrails. Where possible, configure AI assistants to ignore user-generated event descriptions when performing high-risk actions, and require confirmation before creating or sharing new events that include summaries or sensitive content. Defense-in-depth—policy checks, content filters, and human confirmation—reduces the blast radius when models are tricked.

Finally, treat text as an untrusted interface. Whether it arrives via Calendar, chat, or documents, content can carry instructions that models may follow. The Gemini invite exploit is a reminder that convenience and exposure grow together, and that securing AI means securing every text stream those systems can read.