UAE-based AI company G42 and U.S. chipmaker Cerebras have announced plans to stand up 8 exaflops of AI compute in India, unveiling the project alongside the India AI Impact Summit in New Delhi. The system will be hosted domestically and built to comply with India’s data residency, security, and compliance requirements, targeting universities, government agencies, and small and mid-sized businesses that have struggled to access high-end AI infrastructure.

The move positions India to expand sovereign AI capabilities at a time when access to training-grade compute has become a bottleneck for research and enterprise innovation. With G42 operating locally and Cerebras supplying its wafer-scale systems, the rollout is designed to accelerate both the training and inference of large models tailored to Indian languages, sectors, and public services.

What 8 Exaflops of AI Compute Means for India

An 8-exaflop AI system represents a material jump in domestic capacity, roughly on the scale of many thousands of top-tier accelerators depending on precision and workload. For practical use, that translates into shorter training cycles for foundation models, lower latency for production inference, and the headroom to experiment with frontier-scale architectures rather than incrementally fine-tuning smaller models.

That matters for applied work in healthcare, agriculture, financial services, and public service delivery—areas where localized data and domain adaptation are often more valuable than generic benchmarks. India’s multilingual environment amplifies the need: models built to understand code-mixed Hindi-English and other regional languages have a direct impact on citizen-facing services and SME productivity.

The collaboration also ties into ongoing academic efforts. Abu Dhabi’s Mohamed bin Zayed University of Artificial Intelligence and India’s Centre for Development of Advanced Computing are involved, building on prior G42-backed research such as the Nanda 87B bilingual model introduced last year. With more compute onshore, similar projects can iterate faster while keeping sensitive datasets within national boundaries.

Inside the G42 and Cerebras AI Compute Stack

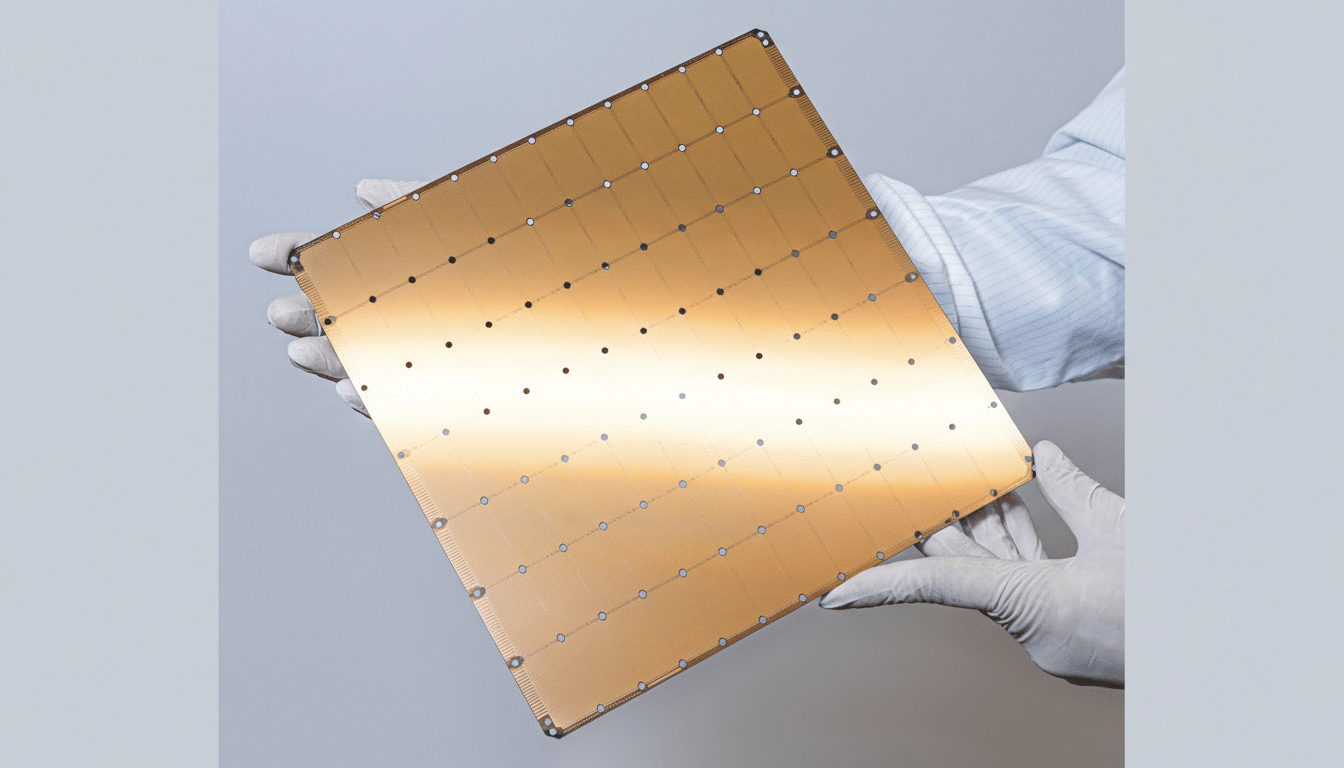

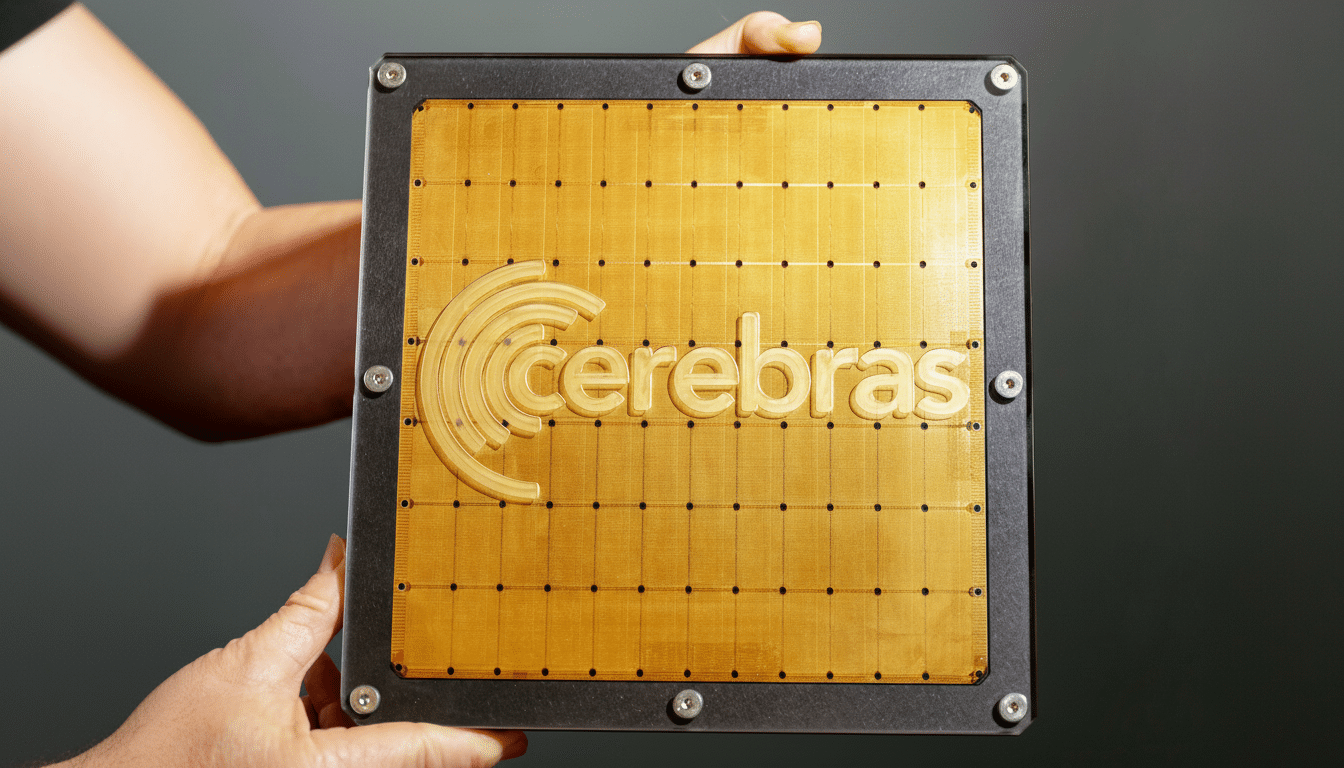

Cerebras brings a distinct approach to scale. Rather than clustering vast numbers of conventional GPUs, its systems center on wafer-scale processors designed to keep training workloads on a single, massive chip and to simplify scaling across nodes. The architecture reduces some of the communication overheads that hamper very large models and can make data-parallel and pipeline-parallel training more efficient.

G42 and Cerebras have already demonstrated multi-exaflop deployments through the Condor Galaxy network, giving both companies operational experience in scheduling large jobs, managing model sharding, and supporting familiar frameworks such as PyTorch. For Indian users, this should mean a more turnkey path to training state-of-the-art language and vision models without reengineering pipelines from scratch.

Another practical benefit is accessibility. Programs that allocate compute time to universities and startups—paired with curated datasets and reference model recipes—can flatten the on-ramp for teams that have ideas but lack the capital to secure capacity on global clouds. Expect this system to be packaged with service layers, MLOps tooling, and support for fine-tuning and retrieval-augmented generation to drive immediate uptake.

Sovereign AI and India’s Evolving Data Policy

Keeping the system and data in India aligns with the country’s evolving privacy and localization posture under the Digital Personal Data Protection Act and sectoral guidelines. For public-sector AI—health records, land registries, citizen services—confidence in where data lives and who touches it is often the gating factor for adoption, not just raw compute.

C-DAC’s involvement signals potential linkages with the National Supercomputing Mission and the PARAM family that already serve institutes nationwide. A dedicated AI cluster that interplays with academic networks could become the default platform for national challenge datasets, benchmark suites for Indian languages, and shared model checkpoints released under permissive licenses.

A Broader Race to Build AI Compute Capacity in India

The announcement lands amid an unprecedented buildout of digital infrastructure. Indian conglomerates have outlined multi-year plans: Adani has mapped up to $100 billion for as much as 5 gigawatts of data center capacity by 2035, while Reliance has flagged $110 billion over the next seven years for gigawatt-scale facilities. International players are moving too. OpenAI and Tata Group are coordinating 100 megawatts of AI compute with a roadmap to 1 gigawatt, and India’s technology leadership has set a target of attracting more than $200 billion in infrastructure investment through incentives, public capital, and policy support.

U.S. hyperscalers including Amazon, Google, and Microsoft have already committed around $70 billion toward expanding AI and cloud footprints in India. The G42–Cerebras system slots into this landscape as specialized capability focused on training-grade performance and sovereign control rather than general-purpose cloud elasticity.

What to Watch Next on Deployment, Power, and Access

Key variables now are deployment timelines, power sourcing, and access policies. AI clusters at this scale require steady multi-megawatt power and efficient cooling, pushing operators toward renewable PPAs and advanced thermal designs. On the user side, transparent allocation frameworks and public calls for proposals can ensure that researchers, startups, and state programs all see meaningful time on the system.

If those pieces come together, 8 exaflops onshore could shift the center of gravity for Indian AI from importing models to building them—faster, cheaper, and closer to the country’s real-world needs.