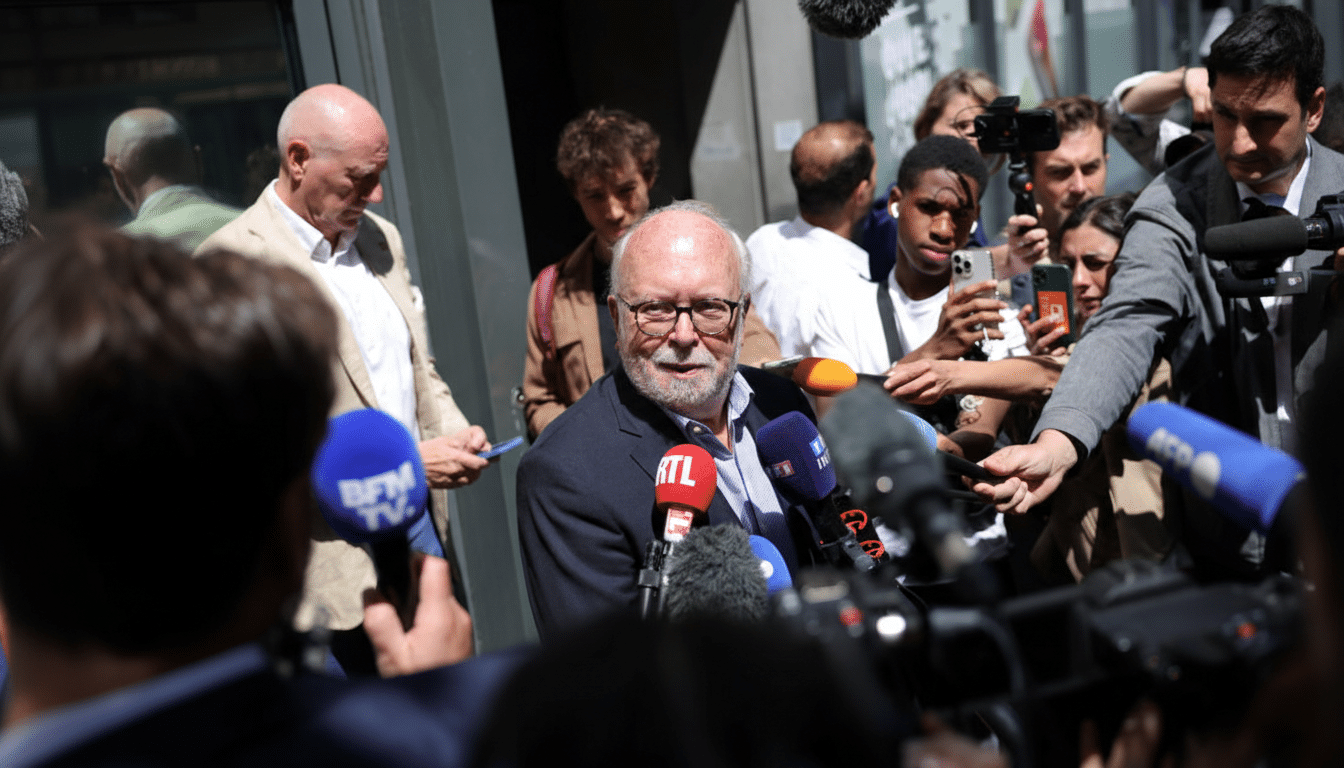

French prosecutors have conducted a court-authorized raid at X’s Paris offices as part of a sweeping probe into the circulation of child sexual abuse material and sexually explicit deepfakes on the platform, according to the Paris prosecutor’s office and reporting by international wire services.

The search was carried out by the prosecutor’s cybercrime unit with assistance from the national cyber gendarmerie (often referred to as CyberGEND) and Europol. The prosecutor’s office publicly confirmed the operation and separately announced it would discontinue its presence on X, directing followers to alternative channels.

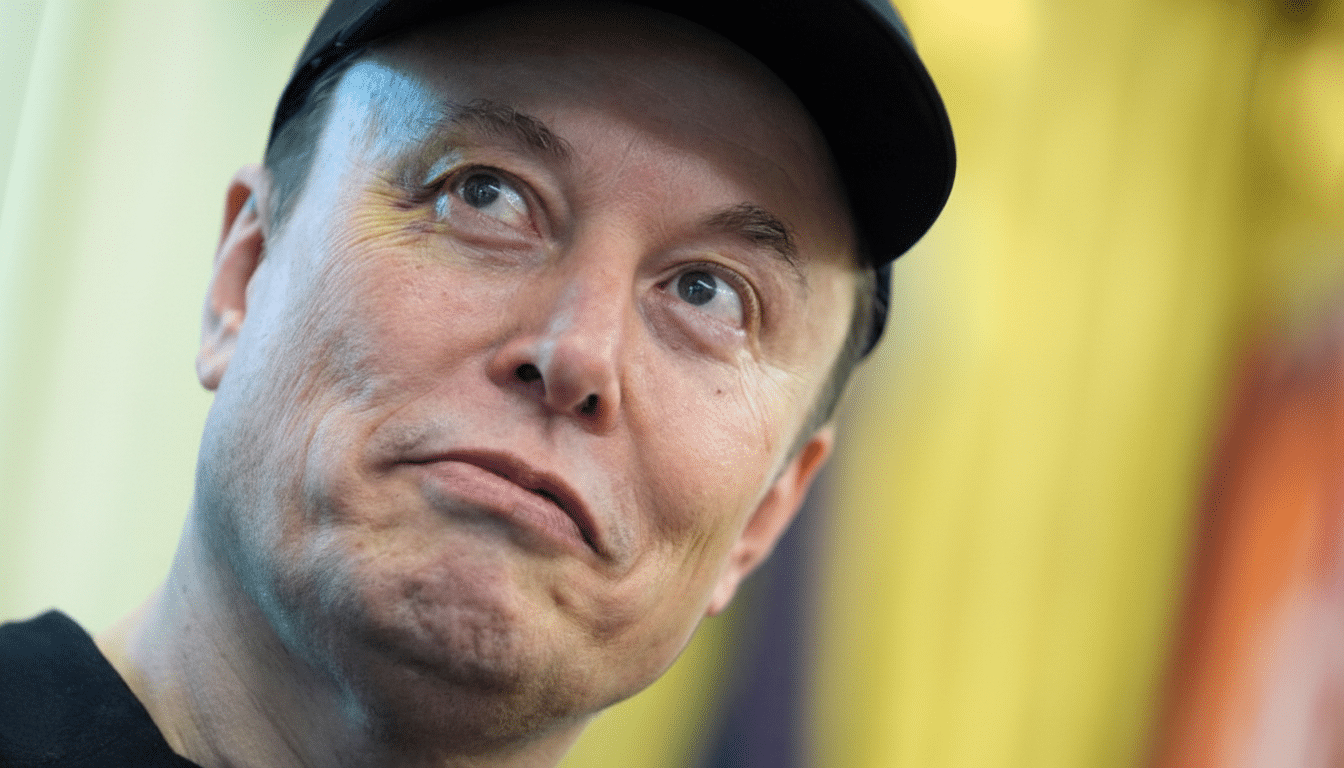

The preliminary case, as described by prosecutors, examines potential complicity by the platform in possession and distribution of images of minors, the creation and spread of sexually explicit deepfakes, denial of crimes against humanity, and manipulation of an automated data processing system by an organized group. Elon Musk and former CEO Linda Yaccarino have been summoned for voluntary interviews, authorities said.

What Investigators Are Probing Inside X’s Systems

At the core of the inquiry is whether X’s moderation, detection, and reporting systems meet legal duties under French and EU law. Investigators are expected to test if industry-standard tools—such as hash-matching technologies like PhotoDNA and CSAI Match—were properly deployed, whether reports were promptly escalated to authorities, and if staffing or policy changes undermined enforcement.

France holds hosting providers to account when they fail to act after being notified of illegal content. Prosecutors are likely to compare internal logs against notifications from the government’s Pharos portal and other hotlines to see how quickly content was removed, accounts were suspended, and evidence preserved for law enforcement.

Legal Stakes in France and the EU for Platforms

French criminal law prohibits the production, possession, and distribution of child sexual abuse material and makes the denial of crimes against humanity a specific offense. Corporate criminal liability can include fines, compliance orders, and additional sanctions. The “manipulation of an automated data processing system” language points to potential offenses involving misuse of algorithms or automated tools.

Layered on top is the EU’s Digital Services Act, which compels very large platforms to assess systemic risks, remove illegal content swiftly, maintain a single point of contact for authorities, and provide data access to regulators and researchers. Non-compliance can trigger fines up to 6% of global turnover and, in extreme cases, temporary service restrictions. X has already faced separate EU scrutiny over illegal content moderation and transparency obligations.

The Deepfake and CSAM Challenge Facing X Today

The scale of the problem is daunting. The National Center for Missing and Exploited Children reports tens of millions of CyberTipline submissions annually, and the INHOPE network of hotlines processes thousands of URLs and files every day. AI systems now enable rapid fabrication of lifelike images, supercharging abuse while lowering the barrier to production.

Independent researchers, including Sensity, have found that well over 90% of deepfakes online are pornographic, disproportionately targeting women. Law enforcement increasingly treats sexually explicit synthetic images of minors as illegal, even when fabricated, because they perpetuate harm, normalize exploitation, and fuel demand for real-world abuse.

X’s tight integration with xAI’s Grok has drawn scrutiny after users showcased mass generation of sexualized images involving both adults and children. The company has said it implemented additional safeguards—such as limiting image generation to paying users and adding stricter filters—moves that several regulators and child-safety groups have criticized as insufficient.

What a Raid Signals for X’s Compliance and Risk

Raids of corporate premises typically authorize forensic imaging of devices, seizure of moderation and escalation records, and access to internal communications under judicial seal. Investigators will look for mismatches between public statements and internal risk assessments, as well as any shelved proposals to bolster trust and safety.

The prosecutor’s office has framed the process as a constructive path to bring the platform into compliance with French law. X, for its part, has previously described the probe as politically motivated and questioned the extent of its cooperation—postures that could factor into assessments of good-faith compliance under French statutes and the DSA.

What to Watch Next as French and EU Probes Advance

Next steps include interviews with senior executives, preservation orders for data, and technical audits of detection pipelines. Authorities could escalate to a full judicial inquiry, impose compliance directives, or seek penalties if systemic failures are established. Europol’s involvement suggests cross-border data and enforcement coordination will remain central.

For French users and advertisers, the practical outcome will be measured in faster takedowns, clearer reporting tools, and verifiable accountability. For the broader tech sector, this case is shaping up as a test of how national prosecutors and EU regulators intend to tackle AI-accelerated harms—and whether platforms can meet rising safety baselines without compromising openness or speech.