DoorDash says it has removed a courier from its platform following a viral post that accused the driver of using an AI-generated photo to fake making a delivery.

The incident, which was first described by Austin resident Byrne Hobart and reported earlier by Nexstar, set off a fast review by the company as questions are mounting around just how generative tools could be used to game proof-of-delivery systems.

According to Hobart, a driver quickly marked his order as delivered and uploaded a photo of a DoorDash bag neatly placed at his front door — an image which he believes was faked.

He added later that another person in Austin said he reported a similar experience with the same on-screen driver name. DoorDash said it had banned the driver and was investigating the claim.

What Sparked the Ban After Alleged AI Delivery Fraud

The episode hinged on a single image — the linchpin in “leave at door” deliveries. Hobart speculated that the scammer had downloaded an old photo of his front door, which can be found through a feature that offers up pre-delivery photos, before using something like a generative model to create a new “proof,” complete with a branded bag. While the details are unverified, it is consistent with popular attack styles against mobiles, such as jailbroken device account takeovers and alterations to apps to circumvent regular checks.

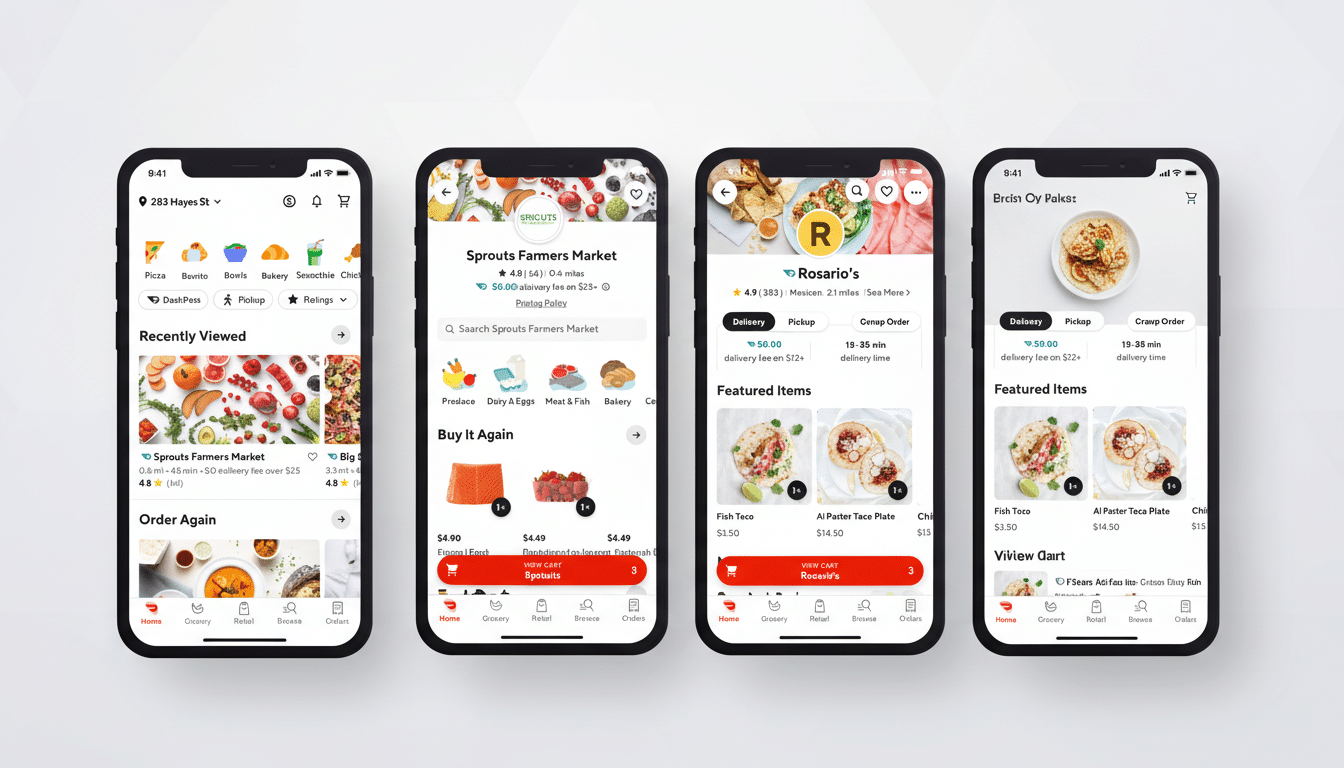

Good news for the maskless: Contactless drop-offs and photo confirmations were a thing pre-pandemic, and continue to be popular. But that convenience added a new verification surface: it depends on images, timestamps and GPS elements that can be faked or altered when bad actors hijack devices or accounts.

How AI Could Counterfeit Proof of Delivery

Today’s image generators are capable of generating photorealistic scenes conforming to a particular environment within seconds. From a reference image of a door, the system can add in a package, bag or branded sticker with appropriate shadows and reflections. And combined with some metadata tampering — manipulating EXIF or obfuscating GPS drift — a fake proof-of-delivery can appear pretty convincing at first blush.

Bad actors will generally need three things: access to an image of the customer’s doorway, a method for uploading fake media into the app, and a supply of location data sufficient to pass basic checks. Access could be obtained through a hacked driver account, shared credentials or exploitation of features that disclose old delivery photos. Upload paths can be unlocked using a rooted or jailbroken phone so that app behavior can be altered. You can fake location trails with apps that move in a manner similar to how the tool would look as if you took a short break at the drop-off spot.

Platform Safeguards and Their Limits Against AI Fraud

Delivery platforms use multiple defenses: GPS geofencing, server-side telemetry that alerts to impossible-speed trips, scheduled ID checks for drivers, and image forensics that search for compression artifacts or editing seams. A number also run risk models that contrast a courier’s behavior with historical norms to flag outliers.

Even then, synthetic media is a moving target. Other initiatives, such as Adobe’s Content Credentials and the C2PA standard itself, hope to embed tamper-evident provenance data, while research groups at NIST and university labs will continue to publish benchmarks for deepfake detection. But detection is probabilistic, and adversaries iterate rapidly. This is also why the measure of media checks is increasingly coupled with behavioral signals — phone-sensor data, variance from route or rapid-fire task completion — to detect fraud and catch scammers that would deceive by relying solely on pixels.

The larger context is this: AI-enabled fraud is climbing in all sectors. The Federal Trade Commission last month projected that consumers sustained record losses to scams, spotlighting how cheap, available generative tools can scale hucksterism. In gig work, that can include fake photos, synthetic identities or bot-driven order sniping — all of which chip away at trust between customers and businesses as well as among delivery drivers.

What Customers and Drivers Can Do for Now

Customers should contact in-app support to report any suspicious proof-of-delivery, particularly if photos don’t match their property. Include any other specifics, such as visible landmarks or odd camera angles and inconsistencies in lighting. Most platforms have a refund or redelivery process that can be triggered when an order is misrepresented or absent.

Real drivers can protect themselves by avoiding rooted devices, enabling two-factor authentication and keeping their apps up to date. According to Akamai, if account takeover is suspected, organizations should get in touch with support, reset credentials and check recent history. Clear photo practices — multiple angles, identifying features in frame and the unit number shown — also minimize disputes.

Why This Case Matters for Delivery Platforms and Users

The supposed AI delivery sham is a small story with large implications. The platforms handle millions of orders at peak cadence, and their economics are based on near-perfect completion rates and very low dispute friction. A takedown of counterfeit proof-of-delivery could require XDTs to tighten their controls, which might slow things down for the honest couriers and introduce further checks on the verification of actual end users.

The speed of DoorDash’s shutout suggests that platforms will treat AI-assisted deception as grounds for zero tolerance. The next step will be technical: better, stronger provenance for photos; tighter controls on who can see historic delivery photos; and more expansive risk scoring that integrates location, device condition and media analysis. The message for consumers is simple: scrutinize the receipt, scrutinize the photo, and report anything that doesn’t look right.