Disney wasted no time defending its cast members in the era of generative AI, sending Google a cease-and-desist letter alleging that they are meritlessly exploiting Disney intellectual property across their AI products. The order couldn’t have come at a more opportune time: It came on the same day Disney announced its deal with OpenAI, signaling a strategic shift toward licensed, character-based AI experiences while putting rival platforms on notice.

Disney Accuses Google of AI Theft and IP Violations

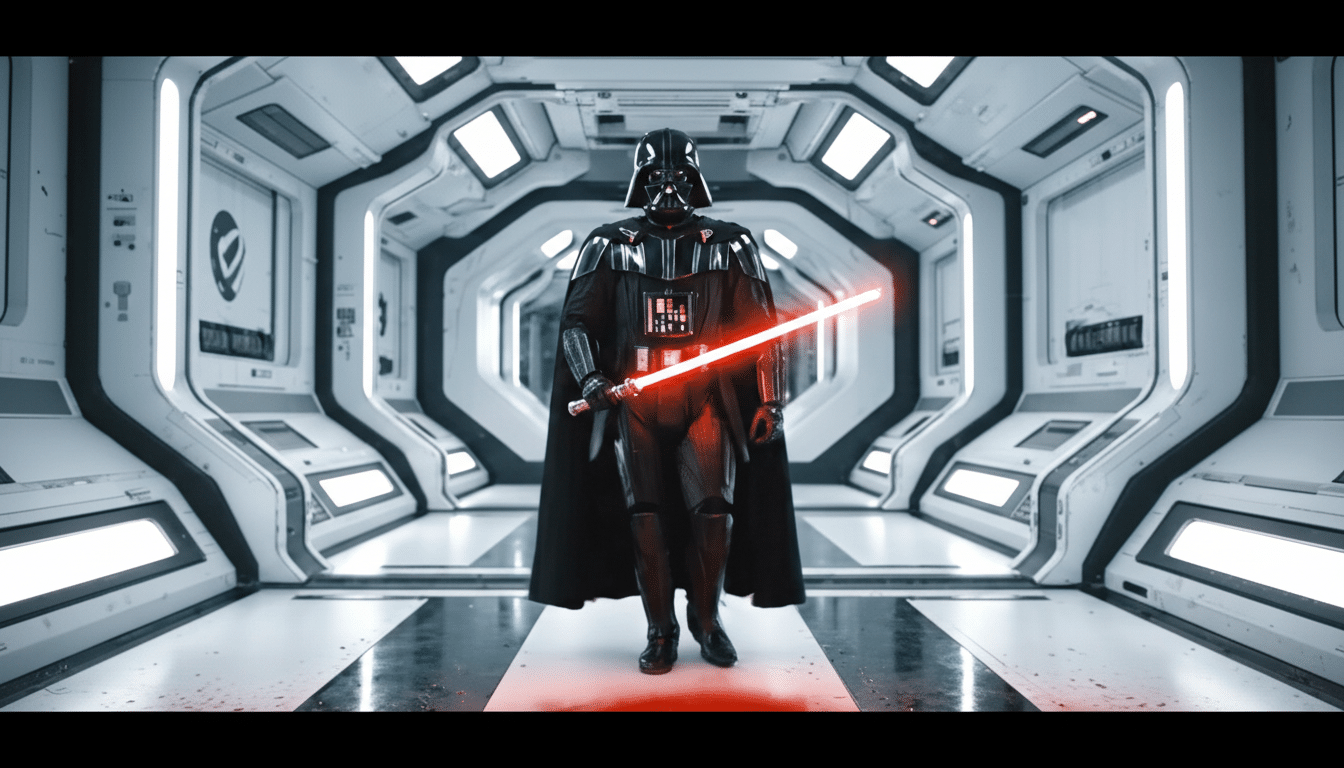

As Variety reports, Disney claims that Google’s AI offering has been reproducing and/or authorizing derivative versions of protected characters on a “massive scale.” The letter is said to give examples, from Darth Vader and Deadpool to Elsa from Frozen, characters from The Lion King and Moana, and others that spark images of action figures and stylized figurines.

Disney’s lawyers are asking Google to stop copying, publicly displaying, performing, distributing, and making derivative works of Disney-owned characters in any output across its AI stack, including YouTube, YouTube Shorts, and the YouTube mobile app. The company also seeks enforceable protections against future violations, a not-so-veiled message that ad hoc takedowns won’t cut it.

OpenAI Partnership Raises Stakes for Licensed Character AI

The Disney-OpenAI arrangement leaves OpenAI as the sanctioned distribution channel for interactive content that’s based on material from Disney, bringing a bright line between licensed and unlicensed use. Such framing could complicate arguments by other AI platforms that their use is covered under fair use, especially if the licensed partner can show commercial harm or that displacement is involved.

OpenAI has already been criticized for letting copyrighted characters appear in user outputs, particularly after it introduced its Sora video model. The company has since prioritized opt-out options and brand-level controls. Disney’s move appears to be a further step: not just opt-outs, but proactive filters and contractual guardrails modeled after studio-grade licensing standards.

Why YouTube Is on the Firing Line in Disney’s AI Dispute

Its size and creator ecosystem are hot buttons for YouTube. The service has over 2 billion logged-in monthly users, and Shorts is rumored to have made it one of the largest consumers of AI-generated media in the world. Even when the models are hosted elsewhere, their outputs can show up on YouTube — in tutorials, but also in mashups and fan-made shorts — muddying the difference between hosting and creation.

Google has introduced measures, like SynthID watermarking, as well as disclosure obligations around altered and synthetic content; YouTube also permits rights holders to ask for misleading or infringing AI videos to be taken down. Disney also argues that current tooling might not be adequate and suggests platform-level detection and filtering will need to advance past the “thumbnail or watermark or caption” level.

A Ratcheting Up of IP Enforcement in Generative AI

Disney is no stranger to this fight. Earlier this year, it sued the image generator Midjourney, calling the model a “bottomless pit of plagiarism.” Other rights holders have pursued similar actions: Getty Images sued Stability AI over training data; large publishers and authors have filed suits against AI companies over text datasets. The U.S. Copyright Office says it won’t issue copyright to works created by AI with no human author, but it has yet to resolve the underlying question of whether training on copyrighted material constitutes fair use.

For Google, the legal difference will make a difference. If the Disney gripe is over results that come too close to protected characters and branded designs, then Google could be targeted beyond its training habits — playing a role in contributory infringement when its platform supposedly aids and abets infringing works. That is a different, and often more pressing, platform liability issue than the longer-running debate over training data.

What Happens Next for Google and Disney in AI

Anticipate negotiations over technical and policy fixes, like tighter guardrails in image and video tools for more granular control, or character-level blocks to measure how much gathering occurs even within large groups. Google could extend its content provenance efforts and bring character-specific filters to Gemini, as well as creation flows that certainly touch YouTube.

The goal for Disney is to corral demand for character-based creation through licensed channels — now including OpenAI — even as the brand remains paramount. And if talks break down, Disney could sue for court-ordered injunctions to force platform curtailments. Either result could send shock waves through the industry, where other studios and rights holders are looking for a workable model for matching commercial licensing with enforceable platform protections.

For AI platforms, the message is clear: Not only will it be insufficient to just remove pirated content on demand; these words and numbers are not enough. Rights owners are demanding structural controls that prevent illegal character use, and they’re prepared to push through partnerships and legal pressure to obtain them.