Discord is rolling out a sweeping safety change that will lock adult content and several powerful communication features behind age verification. The company says all accounts will default to teen safety settings, meaning access to sensitive media and age-gated servers will remain restricted unless a user proves they are an adult or Discord independently determines with high confidence that the account belongs to one.

What Changes Users Will See Under Discord’s New Policy

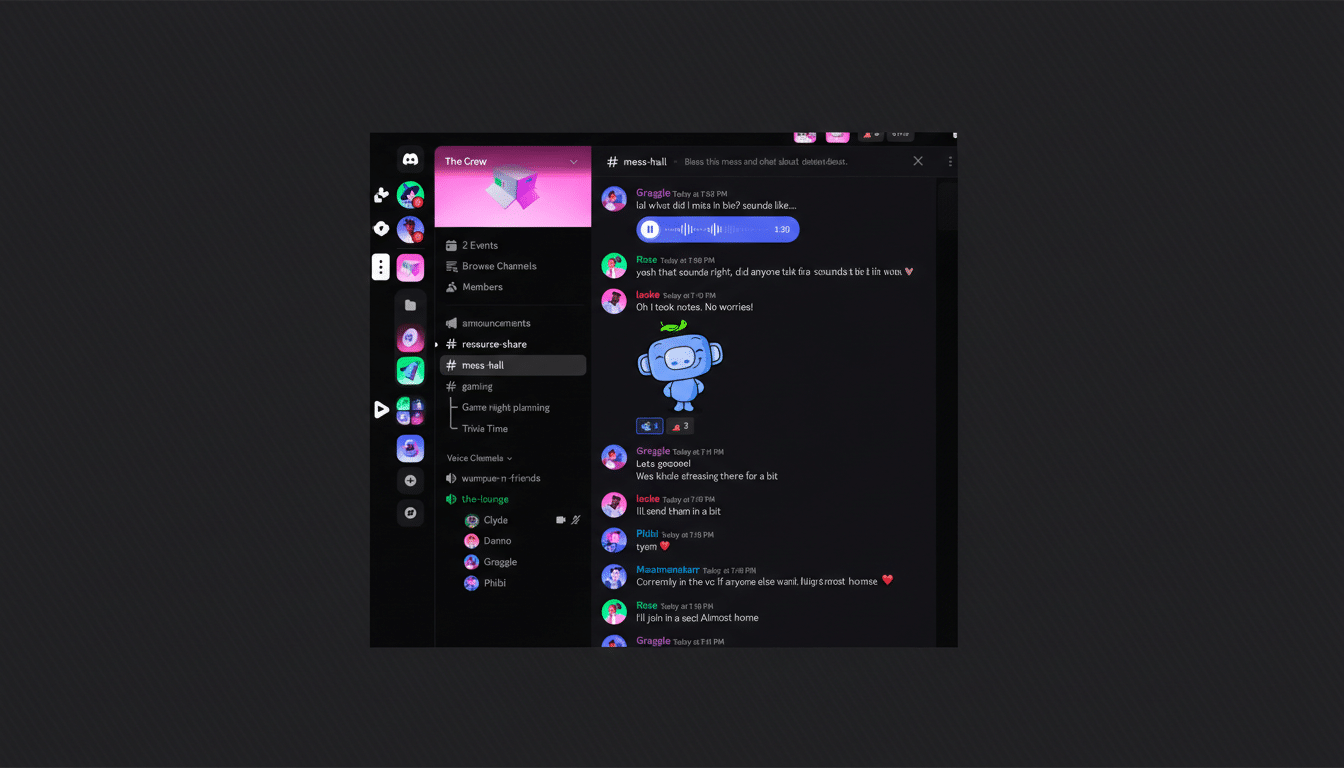

Under the new policy, only verified adults will be able to unblur sensitive images and videos, join or view age-gated channels and servers, receive direct message requests in their main inbox, and speak on live “Stage” events. Everyone else will see a stricter teen experience by default, limiting who can contact them and what content they can view until their age is confirmed.

- What Changes Users Will See Under Discord’s New Policy

- How Discord’s New Age Verification System Will Work

- Why Discord Is Tightening Controls For Teen Safety

- Privacy and Accuracy Questions Around Discord Age Checks

- What Parents And Moderators Should Expect

- The Bigger Picture for Discord’s Age Verification Push

The shift is significant for a service that counts more than 200 million monthly active users worldwide and serves as a backbone for gaming communities, fandoms, classrooms, and creator hubs. It effectively flips Discord’s safety model: rather than asking teens to opt in to protection, it requires adults to opt in to adult privileges.

How Discord’s New Age Verification System Will Work

Discord will rely on k-ID, a third-party age and identity verification provider, to confirm adulthood through government ID checks or facial age estimation. In tandem, Discord is deploying an inference system that uses hundreds of signals—such as account history, behavior patterns, and network interactions—to estimate whether an account likely belongs to an adult. Accounts flagged as likely adult may be granted broader access without manual uploads; others will be prompted to verify.

This hybrid approach mirrors a broader industry push toward “age assurance” rather than a single method of proof. It also acknowledges the trade-offs at play: document checks are robust but intrusive, while inference models are low-friction but carry risks of false positives and negatives.

Why Discord Is Tightening Controls For Teen Safety

Pressure has been mounting on major platforms to harden protections for minors. A recent lawsuit involving Discord and Roblox alleged the services enabled grooming that led to severe offline harm, amplifying public scrutiny of how communities and private messaging are moderated. Regulators are also turning up the heat: the UK’s Online Safety framework and the EU’s Digital Services Act both emphasize stronger safeguards for children, and Australia’s eSafety Commissioner has pressed for robust age assurance on high‑risk services.

The scale of online child safety challenges is sobering. The National Center for Missing & Exploited Children reported 36.2 million CyberTipline reports in its most recent annual tally, a reminder that detection and prevention across platforms continue to lag behind offender behavior. Discord’s default-to-teen model is an attempt to narrow those exposure windows without freezing out legitimate adult use.

Privacy and Accuracy Questions Around Discord Age Checks

Age checks inevitably raise privacy concerns, particularly around ID handling. Discord previously disclosed that a third-party support vendor suffered a breach affecting 70,000 government IDs submitted by users. The company says k-ID will rapidly delete documents, minimizing retention, and that facial estimation can provide an alternative to storing sensitive identity data.

Another open question is precision. Inference systems can be powerful but imperfect, and teens are notoriously inventive at bypassing restrictions. Discord says it aims to reduce false positives while keeping friction low for legitimate adult users, but it has not publicly quantified expected accuracy, leaving moderators and communities to prepare for edge cases.

What Parents And Moderators Should Expect

For families, the change pairs with Discord’s existing parental oversight tools, which can surface a teen’s top messaging contacts, most active servers, cumulative call minutes, and purchase history. Combined with tighter defaults, that gives caretakers more context while cutting down the avenues for unsolicited contact.

Server owners should anticipate operational updates: auditing channels labeled for mature content, reviewing onboarding flows, and clarifying house rules around verification. Communities that host live events may also need backup plans if unverified members can no longer take the stage or DM organizers directly.

The Bigger Picture for Discord’s Age Verification Push

Discord’s plan to require age verification for adult content moves the platform closer to standards emerging across the industry: put stronger gates in front of sensitive spaces and grant wider privileges only after age assurance. The balance will be delicate—safety advocates will watch for measurable reductions in grooming risks, privacy experts will scrutinize data handling, and users will judge whether the new system is fair and usable.

If Discord can thread that needle, it could set a template for other community-first platforms wrestling with the same tension: keep vibrant, adult conversations alive while recognizing that the internet’s default user may be a teenager who needs the platform to say “not yet.”