DeepSeek has revealed the magic trick behind its pioneering inference model, R1: how it trained so inexpensively.

The Chinese AI lab, in a new technical paper, said the entire R1 training run cost just $249,000 — an eye-popping figure in an industry where shopping for state-of-the-art models can require budgets in the tens or even hundreds of millions.

The revelation adds new pressure to the conventional wisdom that performance at the frontier requires spend on the frontier, and it is likely to revive or spur debates within investor and engineer circles (where compute scale really stops mattering in AI) about just where the real moats are: compute scale versus data versus training ingenuity.

Why the $249K training estimate is so important

DeepSeek’s number is an order of magnitude lower than what competing firms have reported or suggested. The chief executive of OpenAI has publicly estimated the cost of training GPT‑4 at well over $100 million. In an earlier paper, DeepSeek pegged the training cost for its V3 model at $5.6 million — a sum that’s already pretty svelte when contrasted with other large-scale systems.

The cost advantage is reflected in pricing: DeepSeek lists R1 inference at around $0.14 per million tokens processed (or 750,000 words), while OpenAI charges $7.50 per million tokens for a similar tier. Though training and serving costs are different line items, a less expensive training pipeline can lead to more aggressive product pricing and faster iteration.

How DeepSeek managed to keep model costs low

R1 was trained on 512 Nvidia H800 accelerators — the China-compliant cousin of the H100, which has performance restrictions tied to export, the paper said. Instead of seeing that as a disadvantage, DeepSeek used system-level efficiency and careful scheduling to boost throughput per dollar.

The company’s approach marries pragmatic hardware choices with algorithmic and data efficiencies: a tighter form of data deduplication, intentional curricula that foreground high-signal samples, and reasoning-focused objectives rather than brute-force scale. In other words, the team may have engineered a shorter, smarter training run rather than a longer and larger one.

This approach is part of a larger trend that includes work from groups like Epoch and the Allen Institute for AI — the most competitive labs are now getting more and more capability out of each GPU-hour through low‑precision training, memory optimizations, workload-aware cluster tuning, and so on, instead of simply throwing more chips at the wall to see what sticks.

Read the fine print on training cost disclosures

Training-cost disclosures are notoriously slippery. Experts say a “run cost” often doesn’t factor in costs such as data acquisition and cleaning, human feedback labeling, evaluation infrastructure, engineering salaries, or the amortized price of compute clusters. The headline number remains useful — though it can be a description of the last gradient-crunching phase, rather than the whole R&D bill.

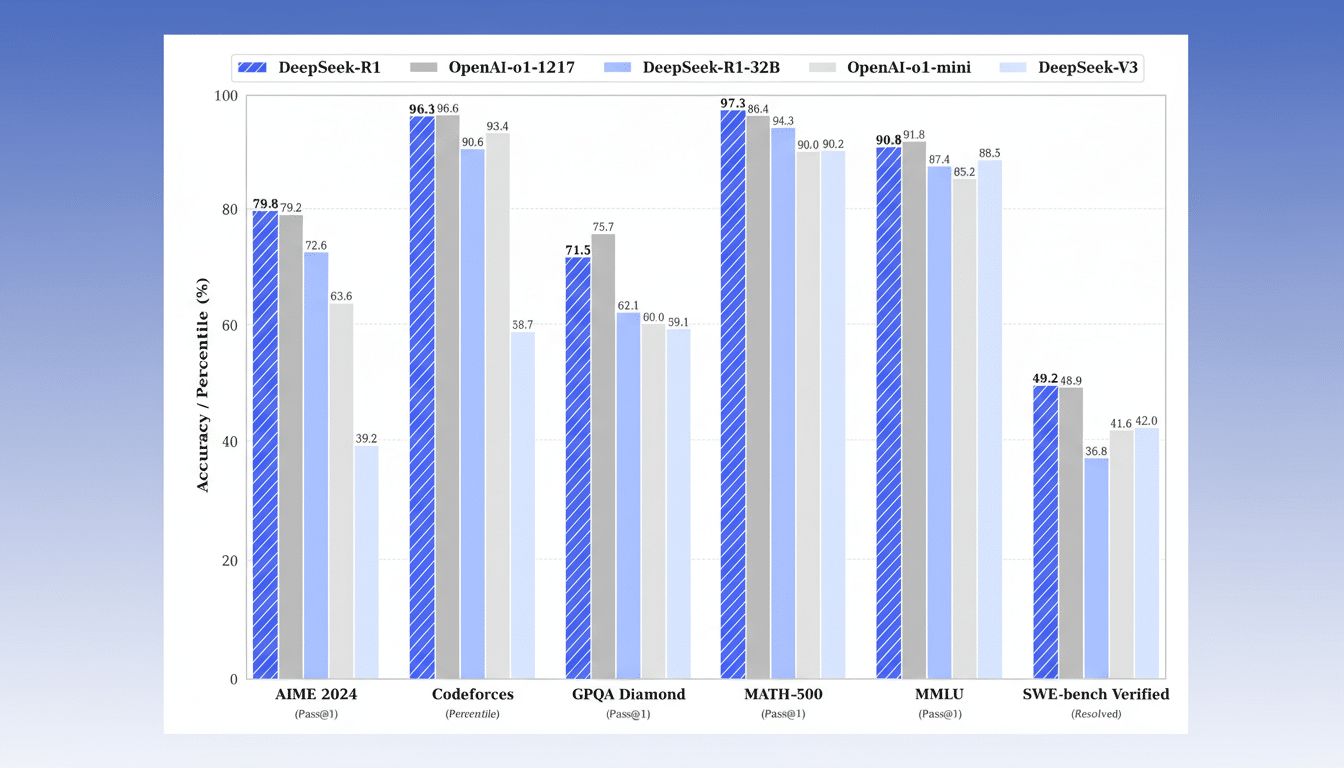

Independent journalists will also be investigating just what quality bar was purchased with that $249,000. Parity can be nuanced for benchmarked comparisons: performance fluctuates across task families or based on the prompt size, or tool adoption. Google researchers, for example, have recently made the argument that a small Gemma 3 configuration can achieve approximately 98% of R1’s accuracy on certain internal metrics using one GPU, stressing that modeling and evaluation decisions contribute significantly to perceived value.

What it means for the AI race and model economics

If DeepSeek’s accounting is accurate, the message to the market is clear: capability gains need not be directly proportional to spend. That defies the narrative that only the most well-capitalized labs can compete, and it complicates fund-raising pitches built on an ever-growing training budget.

It also bolsters the argument for open, reproducible research that disseminates cost-saving methods throughout the system.

There are geopolitical and supply-chain dimensions as well. Export controls have limited access among Chinese labs to the latest Nvidia parts, forcing teams to try to squeeze performance out of H800 clusters and alternative accelerators. According to DeepSeek, success can be achieved even if they are compute-constrained by better software and training approaches.

What to watch next as verification efforts unfold

Verification and reproducibility will be everything. Seek third‑party audits of training logs, standardized benchmarks from the likes of MLCommons, and independent estimates of energy use that account for the full environmental cost. Notice as well how aggressively DeepSeek is shipping updates: if the team can iterate this quickly at this pricing tier, competitors will be on the clock to keep up.

While global AI spending is expected to continue growing, this paper hones in on a crucial role: it’s no longer enough to simply be the one who can buy the most GPUs. Who can turn constrained compute into competitive reasoning performance — and do so repeatedly, with verification that comes cheap?