The latest offering from Anthropic is a small, distilled language model called Claude Haiku 4.5, and it is here to stay; speed and price now rival what the company charges for its larger models—you can try it out for free as of today right inside Claude apps designed for everyday people.

The company claims Haiku 4.5 matches or exceeds Claude Sonnet 4 on important tasks and is “roughly twice as fast while costing a third as much”—an eye-opening claim in a market where lower latency can be more important than raw scale.

For developers and teams struggling with compute budgets, the pitch is simple. Haiku 4.5 is built to provide “good enough” intelligence in most real-time work, with heavier-duty models kept on tap for complex reasoning or planning. That kind of division of labor is emerging as a hallmark in modern AI stacks.

What Claude Haiku 4.5 delivers for low-latency AI use

Haiku 4.5 is a tiny language model created for low-latency environments: live messaging, rapid authoring, in-line code-assist, search enhancement, and agentive tool use. Anthropic says that even though the model it created has a far more spindly physique, it produced code (at least according to a gradient-based measure of coding proficiency) on par with Claude Sonnet 4 over at SWE-bench Verified — a competitive benchmark for software-engineering prowess — and leapfrogged Sonnet 4 when rated on computer-use tasks with an emphasis on speed and tool orchestration.

The result is that it’s a model that feels snappy enough to predict along with you during interactive sessions while still being competitive over a surprisingly broad spectrum of tasks. Anthropic positions it as the natural candidate for high-scale experiences where milliseconds and unit economics inflect decisions.

Haiku 4.5 goes head to head with Claude Sonnet 4.5

On more comprehensive benchmarks, Haiku 4.5 shows competitive performance against Claude Sonnet 4.5 — the company’s primary LLM — on multimodal and reasoning benchmarks (such as MMMU for visual reasoning, AIME-level math, and r2-bench for agent tooling). That’s not to say Haiku unseats Sonnet 4.5 in raw capability; Anthropic still refers to Sonnet 4.5 as its strongest coding model. Rather, it shows how far small models have come and how close the gap can seem in these kinds of practical workflows.

Anthropic also points to a hybrid pattern: let Sonnet 4.5 decompose its complex problem to offer a high-level outline of some multi-step plan, and then pass control over execution to “a team” of Haiku 4.5 instances, if you will.

In enterprise scenarios, that can mean cheaper long-running agents without compromising task completion.

Pricing, access, and availability for Claude Haiku 4.5

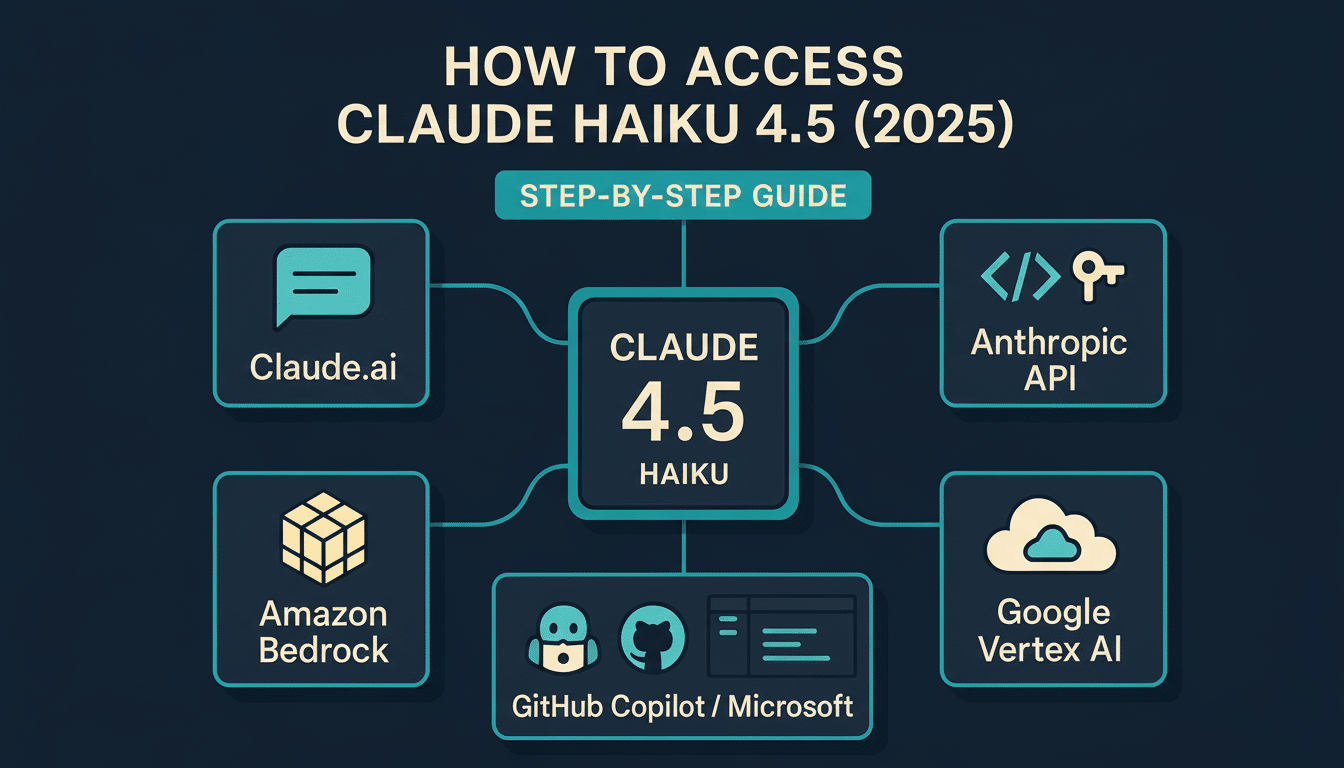

Haiku 4.5 is available as a free product to end users from within Claude apps, making it a painless on-ramp for teams kicking the tires on latency-sensitive use cases. For production deployments, Anthropic’s pricing is listed as $1 per million input tokens and $5 per million output tokens on the API and through partners including Amazon Bedrock and Google Cloud’s Vertex AI.

The economics are striking. To put that in context, let’s say the input is 100,000 tokens (roughly 77,000 words), then it would cost about $0.10 to process and another $0.50 to generate ~100,000 output tokens, for a total of around $0.60 before infrastructure overheads. For high-volume chat, support triage, and automated document operations, that pricing might change the build-versus-buy calculation.

Safety, alignment, and Anthropic’s AI risk posture

Anthropic claims that Haiku 4.5 is low in risky activity and is “substantially more aligned” than its predecessor, Claude Haiku 3.5, and is “significantly” more aligned than both Claude Sonnet 4.5 and Claude Opus 4.1. The model falls under the company’s AI Safety Level 2, an internal threshold described in its system card file, suggesting a more risk-averse posture for customer-facing deployments and compliance-sensitive work.

And while ASL is not an external certification, the focus on alignment and documentation does reflect purchasing habits of finance, healthcare, and public-sector buyers who increasingly demand auditability, safety evals, and documented model scope.

Why a free, fast model matters for real-time AI apps

Small models aren’t simply budget-friendly; they open up product surfaces where lag kills engagement. Imagine live customer support copilots that must reply in real time, spreadsheet and email agents that operate while you type, or coding assistants within IDEs that can’t slow your cursor. In such environments, a 2x speedup can seem like a whole new world.

Haiku 4.5 being free in consumer and team apps is almost sure to drive experimentation. For example, developers can prototype agent workflows that include retrieval, tool use, and UI automation, then take those to production on the same model through API. For more challenging problems — compliance-heavy summarization, complex multi-hop reasoning, large-scale code refactors — teams can automatically escalate to Sonnet 4.5 as needed, while still keeping costs in check by returning routine steps to Haiku.

The firm will be sharpening its portfolio around a clear product line: heavyweight reasoning if you need it and nimble default everywhere else. “If the claimed benchmarks and price-performance don’t crack in production, Haiku 4.5 could potentially find itself as your go-to engine for real-time AI experiences while moving this market even closer to small-model-first design,” he said.