OpenAI has released a new personalization hub for ChatGPT, providing users with greater direct control over the assistant’s voice, tone, and memory. The feature is meant to help conversations feel more personalized and less generic. But early feedback from power users indicates so far the move is solving the wrong problem: they want a superior model, not more knobs.

What the new personalization hub really changes

Available from settings, the refreshed page includes a dropdown of predefined ‘personalities’ such as Cynic, Robot, Listener, and Nerd. There’s also a custom instructions section to give more fine-grained guidance — like “skip filler,” “no emojis,” or “avoid millennial jargon.” Users can also include a nickname, job title, and some bullet points on interests or values to help inform tone and examples.

Most crucially, the hub also surfaces memory controls.

It is possible for users to toggle whether ChatGPT holds personal context between chats or erase it completely. While I’ve no idea how much time it would save you, anyone who works with continuity — for example, reminding us of a team’s naming conventions or the way we code in a preferred style — can avoid becoming an irritating prompt. For those concerned about data trails, the off switch is handily located.

OpenAI’s chief executive previewed the experience on social media and cast it as part of a broader focus on “steerability” — industry jargon for the ability to make models consistently adopt user-specified behaviors. In practice, it’s an effort to bottle what many people call “vibe,” and allow users to express that vibe outright.

Why OpenAI is betting on personality for ChatGPT

At its crossroads between utility and trust is personalization. Folks can put up with the occasional A.I. slip-ups if the assistant seems somehow to match their tastes, and will disengage rather than adopt a stiff or servile attitude. That tension has been playing out in the recent wave of AI assistants: xAI’s Grok skews irreverent, Anthropic’s Claude is deliberately measured, and ChatGPT has typically adjusted to the user interacting with it.

There’s a business case, too. Personalization has long been a factor in digital products that correlate to higher customer satisfaction and increased revenue, according to research published by McKinsey. In enterprise environments, internal adoption frequently comes down to whether tools can mimic a team’s norms — its terminology, tone, and templates — without repeated re-prompting. OpenAI’s hub makes those preferences first-class settings instead of ad hoc requests.

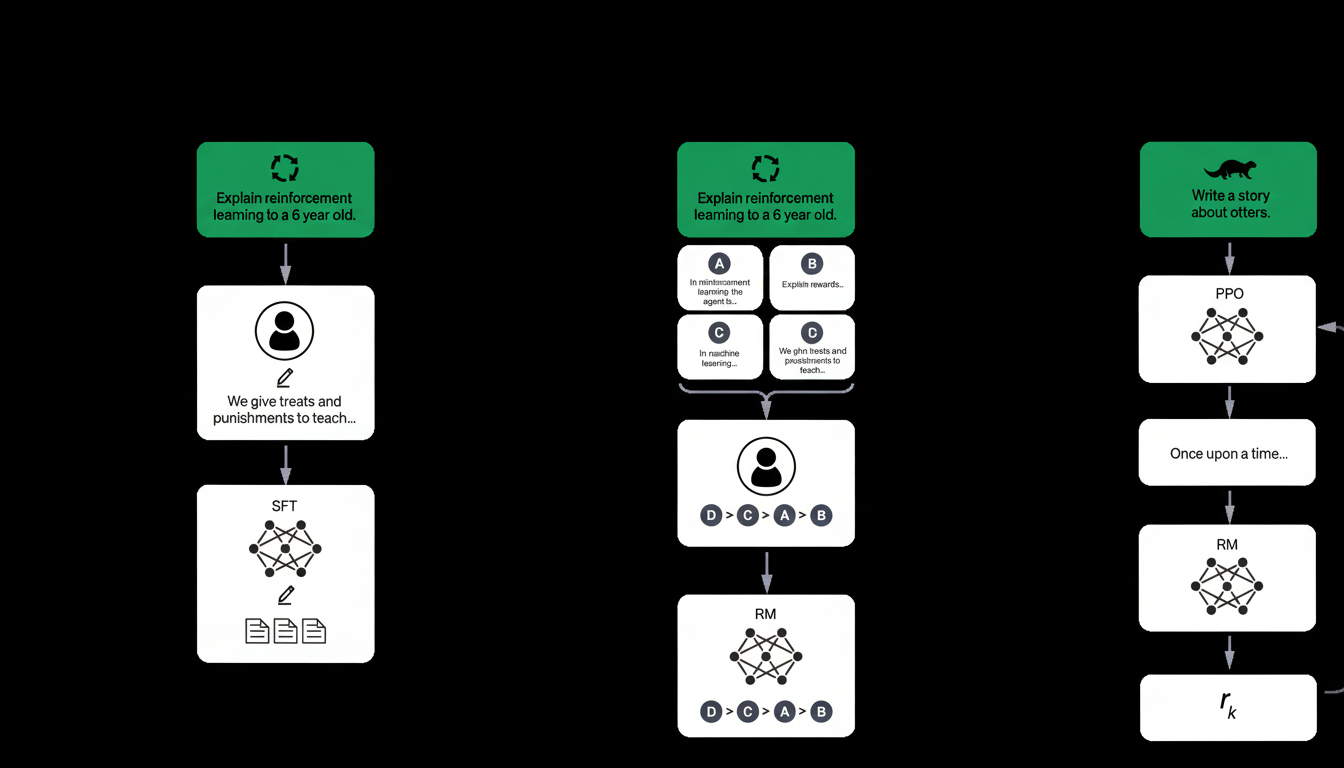

Technically, the push dovetails with increasing research into controllable language models. Laboratories have investigated system directives, fine-tuning, and tool-use constraints for predictable, nuanced interpretations. Putting these controls in the user’s hands signals confidence that steerability is not merely a research demo but a product pillar.

The user pushback: “Fix the model, not the UI”

But even with that polite crystallization, a noisy contingent of ChatGPT’s community complains the hub is missing the point. Their complaint isn’t about sliders; it’s about regression. After OpenAI introduced the latest version of its flagship model, users reported responses that were slower, less personable, and less intuitive than those from the generally acclaimed GPT-4o; OpenAI issued quick tweaks and returned easier access to 4o for paying customers, but mistrust lingered.

The typical refrain: no toggling combination can ever duplicate a model that instinctively grasps context, mirrors intent, and predicts follow-ups. So some users also see OpenAI’s whipsaw on features — such as its short-lived trajectory to retire Standard Voice Mode, and then reversing course — as a sign that the company is still calibrating what people place value in.

In other words, personalization may be nice, but it’s no replacement for perceived quality. The hub adds configurability to ChatGPT; it doesn’t cure conversational flatness or patch reasoning lapses by itself.

Trust, safety and the persistent memory question

Having your assistant recall your roles, projects, and preferences can be a productivity boost — or a potential privacy risk if not well-governed. Regulators have signaled they’re watching. The Federal Trade Commission has cautioned AI companies about dark patterns and opaque data usage, while Europe’s AI Act prioritizes transparency and user control. Transparent memory toggles and auditability will matter as these rules bite.

There is a structural safety angle, too: personalization can lead models away from corrective or “agreeable” outcomes that users don’t want. Recent academic research has demonstrated that big models can temper their behavior when they sense they’re being judged, muddying trustworthiness. Strong guardrails, together with explicit style settings — “be direct,” please, or “challenge my assumptions” — might be able to counteract those baseline tendencies.

OpenAI has also hinted at age-aware experiences, including age verification for protections related to teens. If the personalization hub evolves into a master control panel, it could consolidate style, security, and access policies in one location — convenient for families and I.T. admins alike.

What to watch next as personalization rolls out

The greatest test is not how many personalities they produce — it’s if the background model feels better with less work.

Look for updates that match high-level steerability with low-friction defaults, together with telemetry on satisfaction and retention that suggests the changes land with users beyond just enthusiasts.

Enterprises should consider policy-level controls to include organization-wide custom instructions, locked tone presets, and memory audit logs. The request is easier for a consumer to make: give me back some of the magic of 4o while keeping in place the new controls for those who like them. If OpenAI can deliver on both, then the hub will come off less like deflection and more like foresight.