ChatGPT’s voice feature seems to be edging out of its full-screen, standalone interface and into the main chat—potentially bringing spoken conversation and rich on-screen responses together in a single, unbroken window. In that case, evidence inside a recent Android app build was discovered, where it appears voice sessions will take place inside the chat thread itself, with controls to end a session and to mute or unmute your microphone—as opposed to kicking you out to differentiate speech when you keep things minimal.

What changes in the ChatGPT voice and chat UI layout

Today, commencing a voice chat with ChatGPT triggers a full-page UI that is focused on talking and listening. (Users can toggle captions and read text to see live transcriptions, but the space does not allow for links, maps, weather cards or other rich elements.) You’d have to leave voice mode and go back to the chat log if you want visuals.

Here is the approach: stay within the conversation thread. Ask for a coffee shop nearby, and you could continue to talk while the app produces back a map pin, ratings and directions. Ask for a forecast, and you might get weather without disrupting the conversation. Quick buttons to end the session or mute can be found in strings and behaviors in the new Android APK. It doesn’t appear as though the feature is live on Android devices for all users yet, so it appears that this is a partial rollout via server-side flags and app updates.

Why in-thread voice matters for multimodal AI interactions

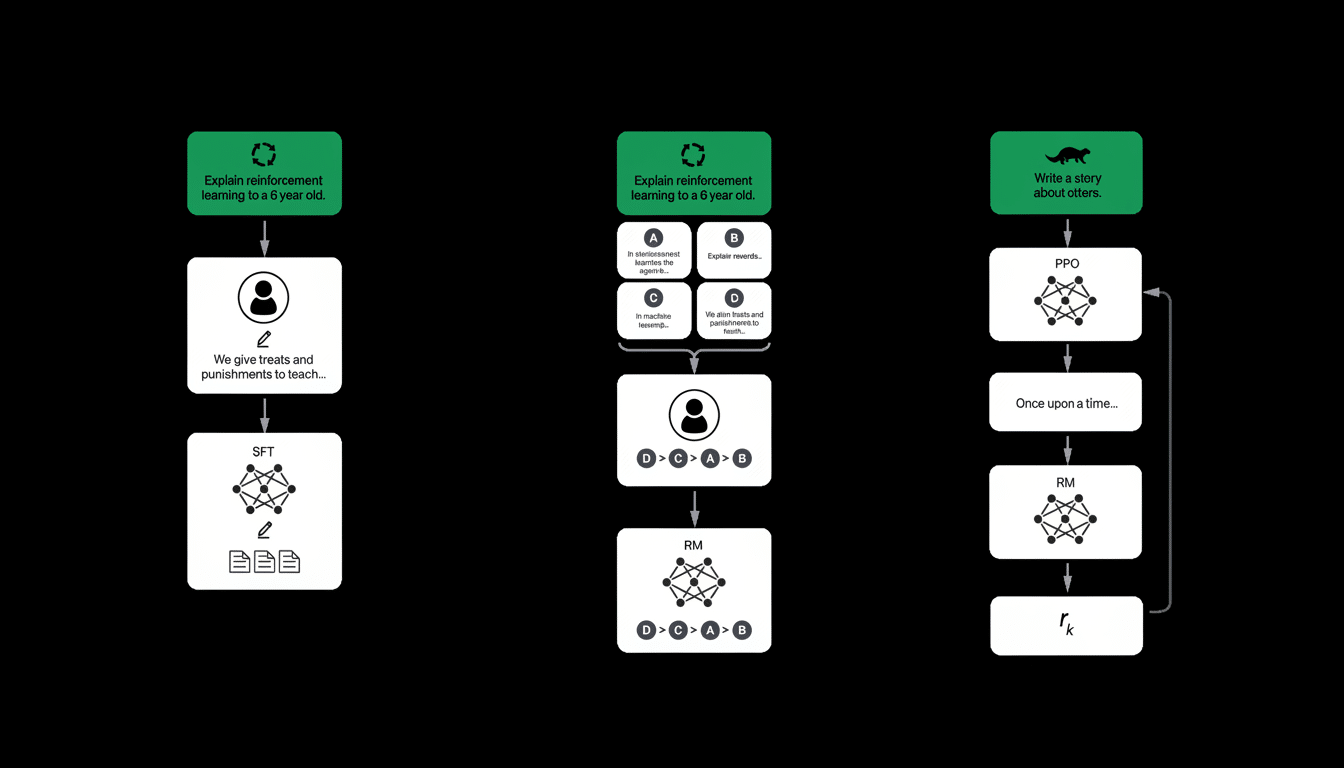

Voice on the main chat echoes how contemporary multimodal (threaded) models are said to perform best: easily blending speech, text and image.

OpenAI’s demos of GPT-4o showcased sub-second, human-like conversational latency combined with the ability to describe what’s on screen and reply with images or formatted answers. The current full-screen voice UI is clean, but also walls those visual elements off. By bringing voice directly into chat, it unlocks the model’s benefits without forcing an inconsistent shifting of modes on users.

The practical gains are obvious. Cooking with your phone standing nearby? You can even talk through the steps while a timer and ingredient list appear on the app. Planning a trip? Continue to dictate constraints as a packing checklist and options for flying populate the thread. In other words, the assistant speaks like a human but thinks like a search, planner and note-taker combined into one.

Competitive landscape and platform context for voice chat

The change is part of a larger industry trend. Gemini Live offers voice-first conversations that do nonetheless still present cards and links, and Microsoft’s Copilot mobile apps allow for the use of voice within a standard chat view. “Folding” voice into the root chat experience means these assistants feel less like separate “modes” you have to exit your flow state for and more like an enfolded, continuous workspace that morphs around how you want to communicate.

The footprint that Android has makes the move particularly impactful. Android commands 70% of the global mobile OS market, according to StatCounter, and that means even small interface changes can affect how hundreds of millions of people experience AI assistants. If all goes well with Android’s deployment, parity on iOS would presumably arrive next, further unifying the in-thread voice pattern across platforms.

Privacy, accessibility, and safety considerations in chat

Runaway voice in the chat raises design and policy questions. It must be clear whether the microphone is on or off, an indicator should be visible, and the “end” on/off controls apparent, to prevent any unintended listening. Because Push-to-Talk and Touch-to-Mute features of the input devices can help to reduce sound capture from the background. Having ChatGPT add captions to chats also makes it that much more accessible for other users who want or need text with their spoken replies.

Policy-wise, consistent treatment of transcripts and audio snippets will also be important around storage, retention, and account controls. OpenAI has made a big public point of emphasizing safety for audio features and labeled voices; extending those safeguards into an always-visible chat context can make trust features more auditable and understandable.

What to watch next as in-thread voice rolls out widely

The in-thread voice experience seems to be right around the corner but not immediately accessible at scale. Anticipate staged availability, A/B testing, and rapid iterations on controls as initial feedback rolls in. The company has also been experimenting with social features such as direct messages and group chat in mobile builds; a voice-first chat thread could complement those efforts, allowing for hands-free collaboration without leaving behind the context and attachments that reside in the conversation.

Done right, integrating voice into the main chat won’t just fill in a UI hole—it will close the loop between speaking, seeing, and doing. That’s the fundamental promise of multimodal AI, and this redesign gets ChatGPT one step closer to bringing it into daily use.