ChatGPT is the most widely used conversational AI system in the world, a general-purpose assistant developed by OpenAI that can generate text, write and debug code, and answer questions about an image or voice query. The appeal is straightforward: You type or speak a prompt, and it responds with fluent, context-aware output in seconds.

What ChatGPT is and how it works behind the scenes

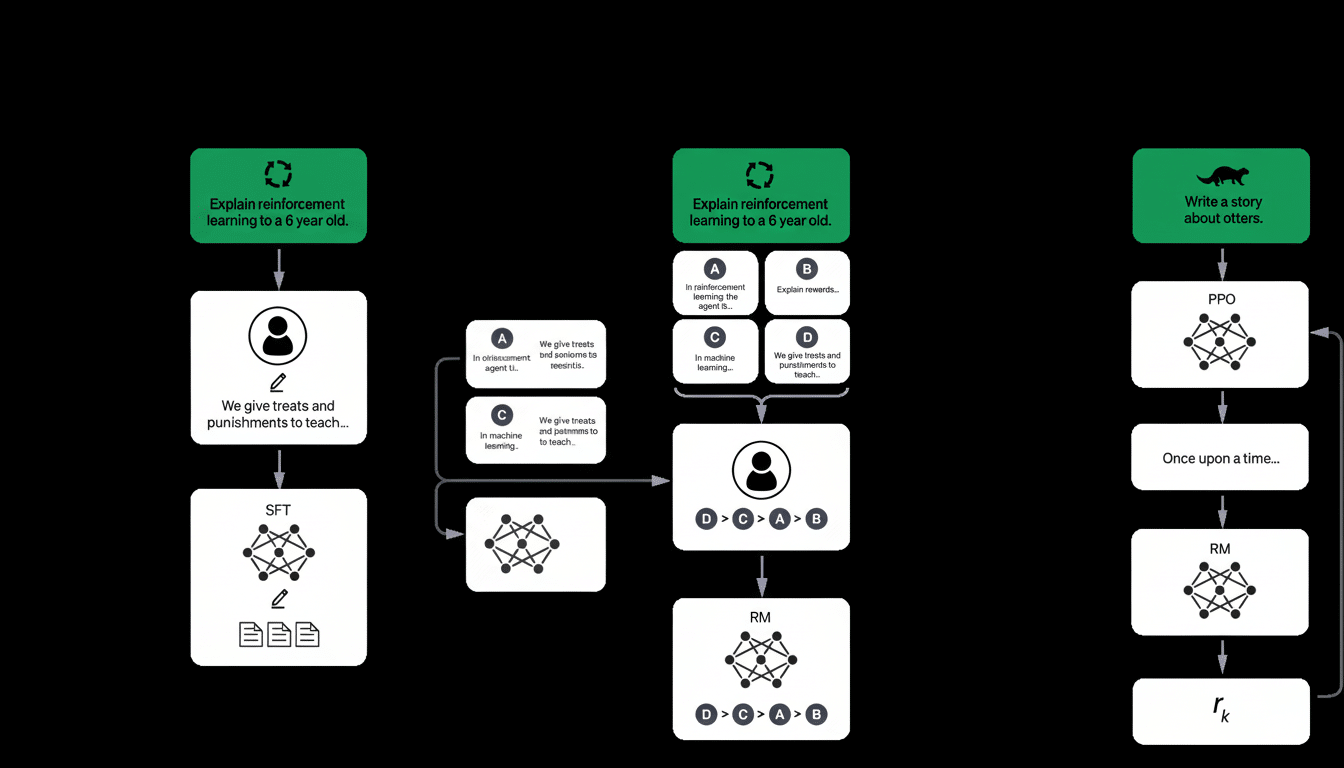

Behind the scenes, ChatGPT is powered by a family of large language models (LLMs) trained on extensive text and media corpora. These models — based on transformers, structures that process words in a way that is sensitive to their proximity — predict tokens (tiny units of language) based on statistical patterns. They don’t “know” facts, as a database does; rather, they work by choosing the most likely next token to add after the text you provide and what comes before it.

- What ChatGPT is and how it works behind the scenes

- What it does well — and where it falls short

- Privacy, safety and governance for AI assistants

- Costs, speed and the compute behind ChatGPT

- Competition and an exploding AI app ecosystem

- If you want to get started — and get better results

- Legal and copyright questions facing AI today

- What’s next for ChatGPT across apps and services

Modern releases add multimodality. With models like GPT-4o, ChatGPT can not only perceive images but also describe them, pull out information, and even speak by voice for a more streamlined interface — essentially transforming the text bot into a general-purpose interface for information and tasks. It is differentiated from simple text prediction by virtue of including tool use (e.g., code interpreter), file uploads for retrieval, and safety guardrails like web browsing.

What it does well — and where it falls short

ChatGPT is best at structured writing and synthesis. That includes first drafts of emails, briefs, reports and all the dreary work stuff to which it applies; spreadsheet formulas; API calls; quick prototypes when you just don’t care about testing yet (“#FIXME, #TODO”); summaries of PDFs; and so forth. It’s commonly used by teams as a rubber duck for brainstorming and as a tireless junior research assistant.

But it can hallucinate — saying things with confidence that seem right but aren’t. It might reference papers that don’t actually exist, or overlook edge cases in code, or overgeneralize. Therapy chatbots “can produce harmful or stigmatizing responses” in delicate environments, researchers at Stanford said when they raised objections to them, a reminder of the value of critical review and expert oversight.

In classrooms, adoption is real but contested. One in four U.S. teens told Pew Research Center researchers they had used ChatGPT for schoolwork, according to a survey by the research group. Educators are giving up on blanket bans and moving to guided use: show your sources, reveal AI assistance and consider process as well as answers.

Privacy, safety and governance for AI assistants

OpenAI says the API data isn’t used for training by default and provides enterprise controls such as data retention settings and regional storage. Still, the best practice is to never paste sensitive or regulated information unless your organization has sanctioned guardrails.

Regulators and advocates are pushing for greater accountability. European privacy groups, such as NOYB, have filed complaints about inaccurate outputs and people’s rights of correction under GDPR. Industry coalitions like the Partnership on AI and the C2PA content provenance initiative are promoting ways to identify AI-generated media and limit its abuse.

Current safety systems screen for self-harm, violence and illegal instruction, but they are not perfect. That’s why high-stakes choices of any kind — medical, legal, financial — should not depend on an unvalidated chatbot answer.

Costs, speed and the compute behind ChatGPT

ChatGPT’s diametrically opposite answers are inexpensive at the level of fanning out L-length sequences to produce one-character outputs, but discriminate between all possibilities in an expensive manner at planetary scale, where blind sampling becomes impossible. Inference takes place on fleets of AI accelerators, historically dominated by Nvidia’s chips with alternative options being explored, according to Reuters. Deeper, more professional modes are slower and costlier; faster and lighter ones sacrifice depth.

Independent analyses from nonprofits like Epoch AI estimate that an average query may use a fraction of a watt-hour of electricity, and OpenAI’s leadership has said the typical prompt uses about one-fifteenth of a teaspoon when you factor in data center cooling (via Business Insider). Energy and water use are workload-dependent — image generation and long “thinking” modes pull more.

Competition and an exploding AI app ecosystem

ChatGPT has stiff competition: Google’s Gemini, Anthropic’s Claude and open-source models Llama and Mistral. Some are good with code, some with reasoning and some with speed. In the meantime, on the xAI side, both Grok of xAI and this wave of research-first models all come with a focus on transparent “reasoning” traces and test-time reasoning.

It’s raining apps, hallelujah!

The app ecosystem is a-hoppin’.

- Cost-driving app: ChatGPT. ChatGPT’s app contributes more to consumer spend compared with the category average, according to Appfigures.

- Shifting search behavior: More searches are getting answered by AI directly, per Similarweb. That is forcing publishers and creators to reconsider distribution and attribution.

If you want to get started — and get better results

Be specific: describe your goal, audience, tone, constraints and examples. Give sources to avoid hallucination, and ask for sources and uncertainty. For coding, provide the stack, input-output examples and failing tests. Ask for step-by-step explanations of reasoning and proof (then counter-arguments) during analysis.

For your own data, use file uploads, retrieval and Excel where you need accuracy. Access memory only if you know what is stored and why. Never paste secrets. And please check with a primary source before doing anything.

Legal and copyright questions facing AI today

Lawsuits filed by news organizations, authors and artists question the rights to use data that were used to train AI models. Courts will determine whether or not fair use applies at scale and if any compensation is owed. (Right now companies mitigate risk via content origin, rights clearance and in-house use policies.)

What’s next for ChatGPT across apps and services

The short-term frontier is agents — systems that plan, that browse, that write code, run software and carry out multistep tasks with less and less hand-holding. Look for closer integration with calendars, docs and data warehouses (and perhaps more seamless commerce); a stronger show of natural voice; richer multimodal understanding across images, audio and video.

Equally important are the guardrails: stronger evaluations, red-teaming and provenance, along with clear labeling when AI is helping out. If we get both sides right — capability and accountability — ChatGPT pivots from curiosity to go-to tool across classrooms, boardrooms and daily life.