Enter the AI productivity director, the unglamorous but game-changing role that many companies are quietly choosing over a chief AI officer. Instead of chasing moonshots, this “magician” focuses on one hard outcome: turning generative AI tools your company already pays for into measurable productivity, safely and at scale.

Why A CAIO Alone Is Not Enough to Drive Adoption

Chief AI officers are useful for governance, risk, and long-horizon model strategy. But most organizations don’t stumble on policy — they stall on adoption. The day-to-day friction of getting thousands of employees to use enterprise-grade AI, inside real workflows, is a change-management challenge, not a research agenda. Even leaders who resist adding a CAIO concede that someone must coordinate this surge in demand and turn “AI everywhere” into outcomes.

- Why A CAIO Alone Is Not Enough to Drive Adoption

- The Rise Of The AI Productivity Director

- What This AI Productivity Magician Actually Does Daily

- Proof This AI Productivity Director Model Works

- How to Hire and Effectively Measure the AI Productivity Role

- The Bottom Line on Making AI Productivity Real at Scale

That job sits in the messy middle between IT and data. When a team buys a tool like Microsoft Copilot or turns on ChatGPT Enterprise, it’s a technology decision. When they need bespoke models, retrieval pipelines, or guardrails over sensitive content, it’s a data decision. The gap between those worlds is where value leaks — and where the AI productivity director earns their keep.

The Rise Of The AI Productivity Director

Think of this role as the head of AI enablement. They orchestrate deployment, training, prompt patterns, policies, and process redesign across business units. They partner with IT to integrate identity, data access, and security controls; with data teams to define when to build versus buy; and with operations to measure time saved and error rates reduced.

Why now? Evidence shows that with structured onboarding, AI tools pay off fast. Microsoft’s early Copilot studies reported users completing tasks faster and feeling more productive, while GitHub found developers finished coding tasks significantly quicker with AI assistance. An MIT experiment showed knowledge workers writing and editing substantially faster with large language models. These gains don’t materialize by accident; they emerge from intentional adoption programs led by someone accountable.

What This AI Productivity Magician Actually Does Daily

First, they drive usage. Licenses for Copilot, Claude, and ChatGPT Enterprise are expensive; the director ensures seats are activated, workflows are mapped, and teams get hands-on training. They seed prompt libraries, create role-based playbooks, and run office hours so employees go beyond gimmicks to repeatable outcomes.

Second, they make AI safe by design. Working with security and compliance, they set guardrails for PII, confidential data, and model selection. They maintain a catalog of approved tools, define when to escalate to a private model with retrieval augmentation, and cut off shadow AI that bypasses enterprise protections.

Third, they optimize workflows. Instead of bolting AI onto old processes, they redesign steps to exploit summarization, drafting, code generation, and agent automation. They pilot, measure, and scale what works, then retire what doesn’t.

Their KPIs include:

- Active-use rates

- Time saved per process

- Quality uplift

- ROI per license

Proof This AI Productivity Director Model Works

At insurance group Howden, leaders created a director of AI productivity to bridge the “build versus buy” divide and push adoption across a global workforce. The mandate: ensure employees use enterprise tools — Microsoft Copilot in Office for summarization, Anthropic Claude for analytical depth, and ChatGPT as a flexible reasoning partner — in ways that are compliant and effective.

The impact is tangible. Brokers buried in thousands of pages now extract answers in minutes. Routine tasks that once consumed a week can be compressed to a scheduled agent run that finishes in under half an hour. Critically, the role relieves the data team from being the help desk for gen AI, freeing them to focus on proprietary models and risk analytics that differentiate the business.

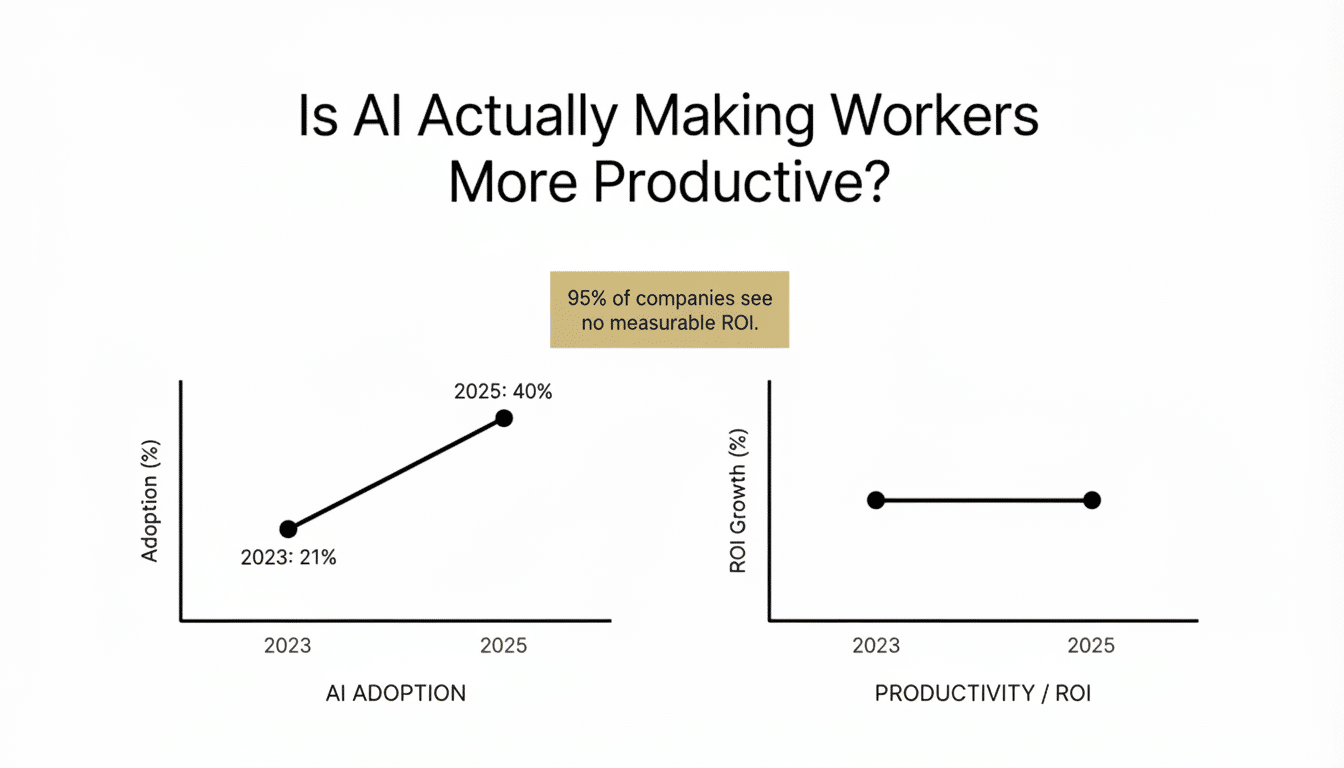

The broader market signals align. McKinsey estimates generative AI could unlock trillions in annual value, yet only a fraction of companies capture it at scale. IDC projects sustained double-digit growth in enterprise AI spending, even as executives cite adoption and change management as top barriers. The gap between investment and impact is precisely the AI productivity director’s remit.

How to Hire and Effectively Measure the AI Productivity Role

Look for a change leader who speaks business, data, and IT. This is not a research scientist or a pure project manager. The ideal candidate has shipped automation at scale, understands prompt engineering and retrieval patterns, can navigate security reviews, and has the credibility to coach senior operators.

Set clear metrics:

- Weekly active users per tool

- % of workflows with AI assist

- Average time saved per task

- Cost per productive seat

- Reduction in shadow AI

- A backlog-to-scale cadence for new use cases

Track quality signals such as error rates and customer satisfaction changes, not just speed. Align incentives so business leaders own outcomes, while the director standardizes the playbook.

The Bottom Line on Making AI Productivity Real at Scale

You don’t need another C-suite title to unlock AI value. You need the operator who turns AI from promise into throughput — the magician who makes enterprise tools disappear into everyday work. Appoint an AI productivity director, fund them properly, and give them a mandate to measure. The fastest way to win with AI isn’t a new model; it’s disciplined adoption at scale.