Asana is introducing AI Teammates, a new category of work agentic assistant designed to function within the company’s project platform and team up with actual teams. Instead of a chatbot twined around a workspace, powering these agents is Asana’s Work Graph—the networked map of goals, tasks, owners, and dates—that allows the agents to make decisions with context to keep projects moving.

The pitch is straightforward, and ambitious: It’s a way to provide every function at every company—from marketing to engineering—a reliable digital coworker who can plan, execute, then course-correct while remaining auditable and under human control.

- What AI Teammates Do, and Don’t, Inside Asana’s Workflows

- Guardrails and Transparency for Safe, Auditable AI Use

- Why This Matters for Coordinated, High-Trust AI at Work

- How It’s Different From Generic Assistants

- Availability and Early Access Details for Asana AI Teammates

- The Bottom Line on Asana’s AI Teammates and Team Impact

So it’s a significant step forward in the wave of agents reshaping work software, which tries not only to answer questions but also get work done.

What AI Teammates Do, and Don’t, Inside Asana’s Workflows

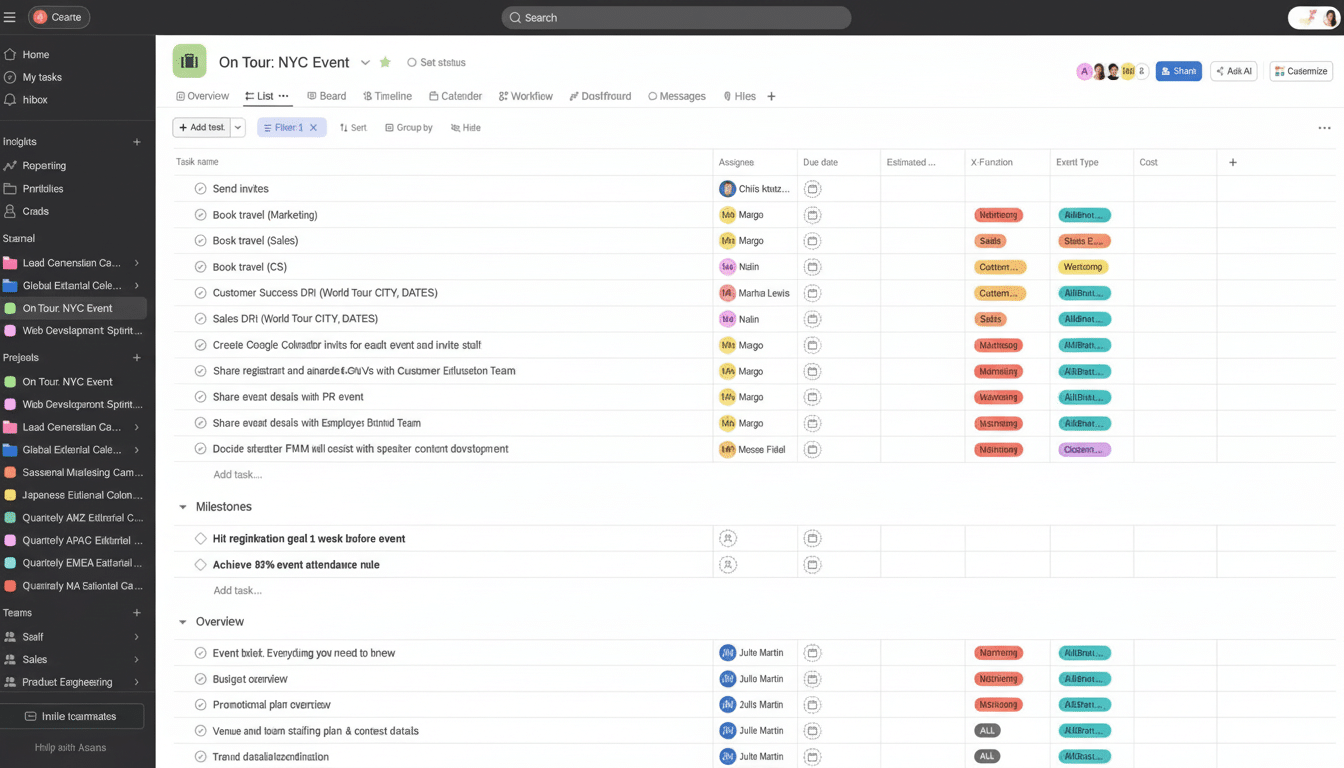

By being able to read the Work Graph, AI Teammates can understand how objectives relate to projects and who’s responsible for what. That context enables them to translate strategy into execution: sketch out plans, assign tasks, track dependencies, and flag risks before deadlines slide. They can even recap progress for stakeholders and suggest trade-offs when resources are limited.

Use cases vary by team. A marketing team member can create campaign briefs that reflect the brand guidelines, and compare final assets to those rules to point out discrepancies. An engineering teammate can triage bug reports, bucket duplicates, and suggest next steps based on severity and component ownership. For customer-facing teams, an agent can digest support tickets into product insights and open follow-up tasks with the right assignees.

Consider a product launch. An AI partner could design an end-to-end workback strategy, monitor milestones across marketing, sales, and engineering, and ping task owners when blockers start to emerge. If dates change in one workstream, downstream impact can be recalculated and a new schedule proposed along with its rationale.

Guardrails and Transparency for Safe, Auditable AI Use

Autonomous weapons are helpful only if their handlers trust them. Asana stresses that AI Teammates “show their work,” which enables teams to better understand what the agent plans on doing, and why. Users are able to intercede, edit the plan, or demand approvals for high-impact actions. That visibility counts in regulated or complex environments where rule-of-thumb automation just won’t fly.

Admins receive governance controls to determine what projects, fields, and documents an agent may reach. There are audit trails on what is done and policy controls to keep sensitive data cordoned off from the rest of the organization. The model is informally called an “explainability first” design, aimed at mitigating the possibility of silent errors cropping up only after the work has gone off course.

Why This Matters for Coordinated, High-Trust AI at Work

The business case for agentive software is getting more compelling. A McKinsey study suggested that generative AI might automate 60% to 70% of all tasks that workers spend 60% or more of their time on, for most jobs with the greatest potential in knowledge work. But automation without organization can shoot blanks. By orienting decision-making around the Work Graph, Asana is targeting the difficult part of knowledge work: coordination and prioritization—not mere content creation in isolation.

There’s also a cultural angle. Professionals are more willing to work with A.I. when the systems perform mundane, low-stakes tasks and provide clear supervision, according to a Stanford-affiliated study. AI Teammates are framed exactly that way: As teammates, to assist with cool things, not as team-killers beside your name on the leaderboard; controls help compensate for how much control managers have over those personal boundaries of autonomy.

How It’s Different From Generic Assistants

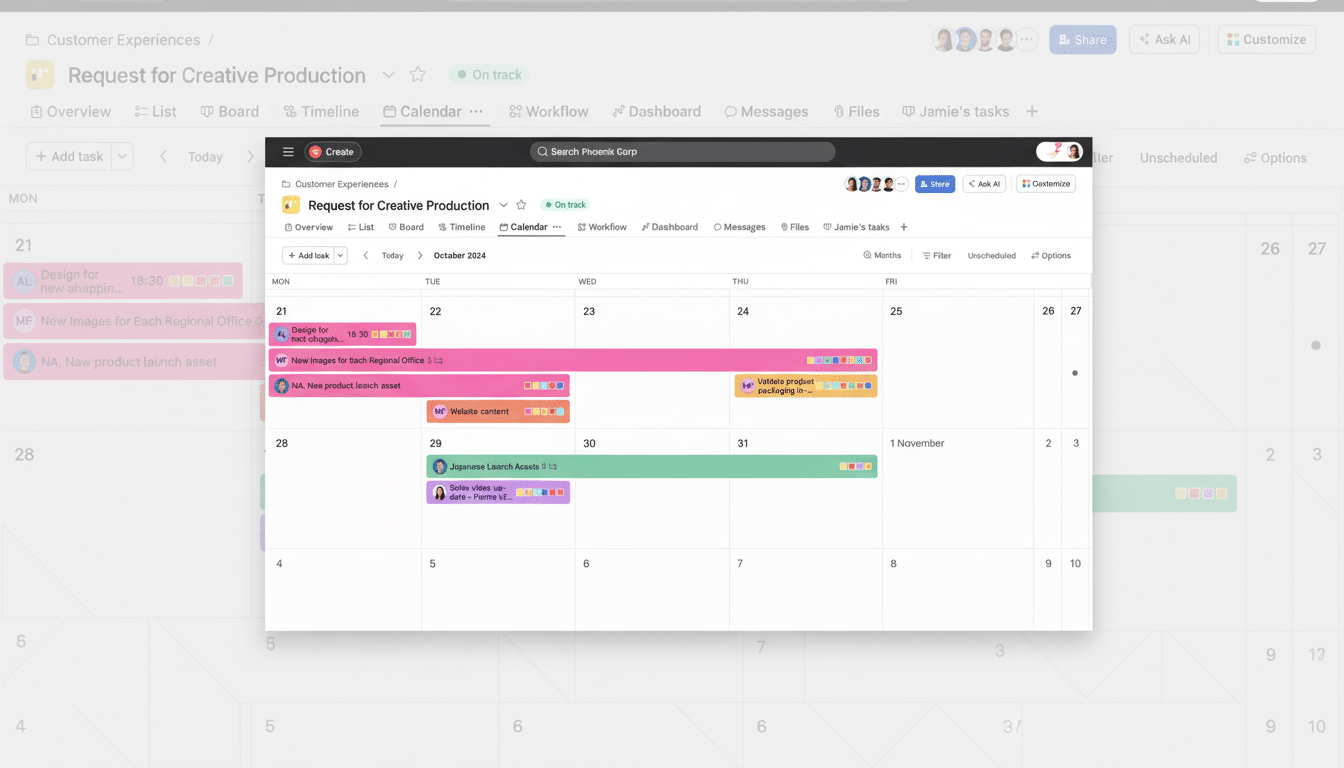

Chatbots answer prompts; agents pursue goals traditionally. Asana’s model provides live role-based control of resources, continuous visibility into ongoing progress, and the ability for agents to take multistep actions within constraints. Via the AI Studio framework, organizations can customize behaviors, interoperate with their own external tools, and script workflows where agents work together.

This same trend can be seen across workplace apps at large. Zoom recently amped up its AI Companion, adding agentic behavior that sucks in data from third-party services like Salesforce and Google Drive, indicating that system orchestration is becoming table stakes. Asana’s bet is that agents built on top of project metadata—owners, priorities, dependencies—will make smarter, more accountable decisions than free-floating assistants.

Availability and Early Access Details for Asana AI Teammates

AI Teammates are available in public beta through Asana’s AI Studio, where teams can get started with templates built for popular roles and iterate. The company says it plans to make this model more widely available in the first quarter of 2027, which should give businesses time to test out governance models, take stock of impact, and train the agents on their individual workflows.

The Bottom Line on Asana’s AI Teammates and Team Impact

AI Teammates are not just a new button in the toolbar; they are an effort to encode the way teams really work. If they live up to the promise—less “work about work,” faster decisions, more predictable outcomes—they could become as ubiquitous as task lists and dashboards. The real challenge will be reliability and trust: clear guardrails, measurable progress, and agents that understand not just the work, but how your team works.