A 30-person startup just lobbed a serious challenge into the frontier-model arena. Arcee AI announced Trinity, a 400B-parameter open-weight large language model released under the Apache 2.0 license—positioning it as a U.S.-made, permanently open alternative intended to compete with Meta’s latest Llama family at the very top of the open ecosystem.

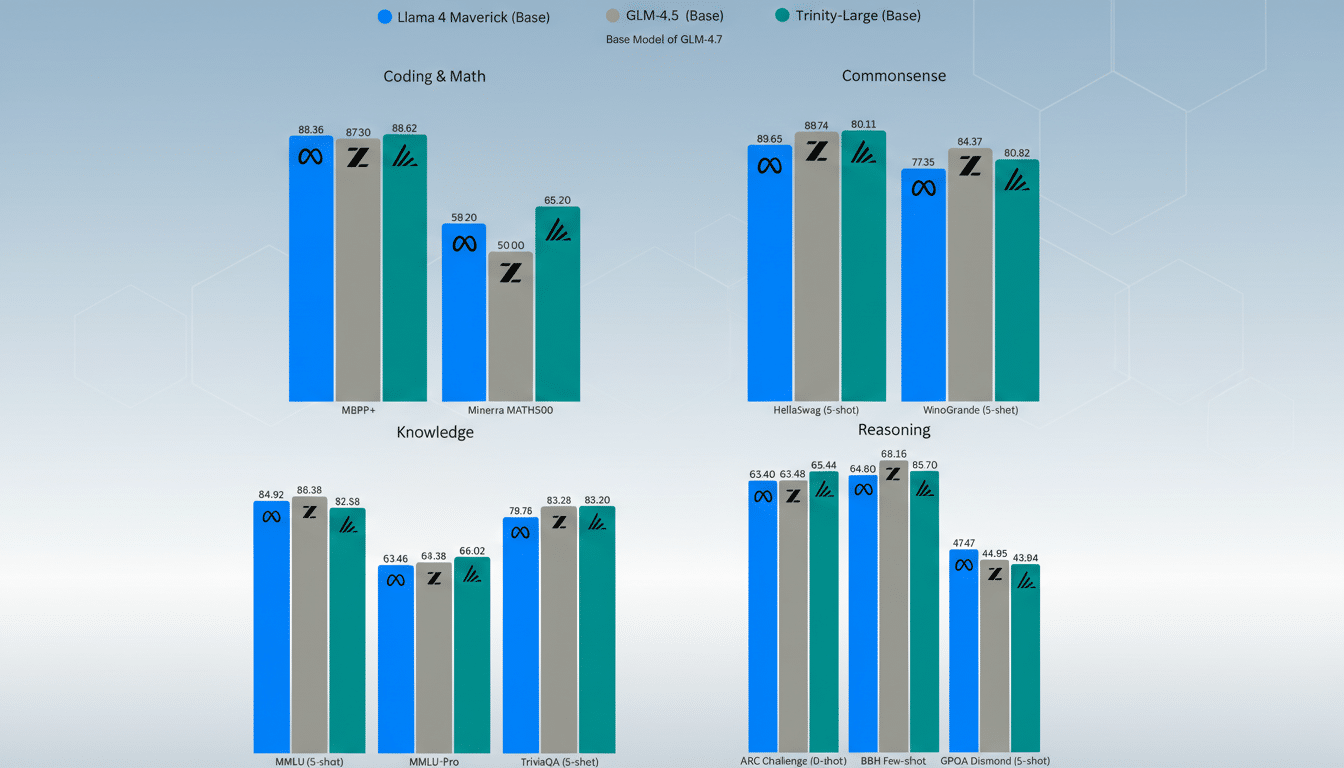

Arcee says Trinity was trained from scratch and already compares favorably with Meta’s Llama 4 Maverick 400B and China’s Z.ai GLM-4.5 in early benchmarks using base models with minimal post-training. While text-only for now, Trinity is pitched squarely at code generation, step-by-step reasoning, and agentic workflows—the sweet spot for developers building AI-first products.

What Arcee Built: Trinity Variants and Release Lineup

The flagship Trinity Large weighs in at 400B parameters, making it one of the largest openly released base models from a U.S. team. Arcee is shipping three flavors to cover different needs: a Base model for general research and fine-tuning, a lightly post-trained Large Preview tuned for instruction following, and a “TrueBase” variant that omits instruct data so enterprises can customize without disentangling prior assumptions.

Trinity follows two earlier releases: Trinity Mini (26B), a fully post-trained reasoning model for apps and agents, and Trinity Nano (6B), an experimental small model emphasizing responsiveness. All variants are available for download, with a hosted API planned once the large model’s post-training is complete.

Early Benchmarks And Performance Signals

According to Arcee’s internal testing, the Trinity base model is already holding its own—and in some cases edging Llama—in categories such as coding, math, common-sense reasoning, and knowledge. Because these results are from the base stage with limited post-training, the company expects further gains as reinforcement learning and safety tuning progress.

As with any large model, parameter count is not a proxy for real-world utility; instruction tuning, data quality, and inference optimizations matter. The early signal, however, is that Trinity’s pretraining is competitive enough to justify investment from developers who want a domestic, open-weight foundation with a clear path to continued improvements.

Why the License Choice Matters for Enterprise Adoption

Arcee’s insistence on Apache 2.0 isn’t window dressing. Apache provides broad rights for commercial use, modification, and redistribution without the bespoke caveats typical of many “open-weight” licenses. That clarity resonates with legal and procurement teams that have hesitated over usage restrictions tied to some leading models.

The company is also pushing a national-provenance angle: many of the strongest recent open models have come from China, which some U.S. enterprises avoid for compliance, regulatory, or risk reasons. Arcee argues that a domestically trained, Apache-licensed model at frontier scale can unlock adoption in conservative industries that need both technical performance and straightforward licensing.

Training A Frontier Model On A Startup Budget

Arcee says it trained Trinity in six months for roughly $20 million using 2,048 Nvidia Blackwell B300 GPUs—an aggressive timeline and spend relative to the resources of Big Tech labs. The company has raised about $50 million to date and employs around 30 people, with a compact research team led by Atkins driving the training effort.

The team’s path started smaller: a 4.5B-parameter experiment in collaboration with DatologyAI helped validate its training stack, followed by December releases of the 26B and 6B models. CEO Mark McQuade, formerly an early employee at Hugging Face, said the startup initially focused on post-training and customization for large clients before deciding that owning the full pretraining pipeline was strategically necessary.

Who It’s For and How to Get Trinity and Its API

Trinity targets developers, researchers, and enterprises that want an open, U.S.-trained foundation with clean licensing. All models are free to download. A hosted service for the large model is slated to arrive after additional reasoning and safety training, with the company promising competitive API pricing.

For now, Trinity Mini is available via API at $0.045 for prompts and $0.15 for outputs, with a free rate-limited tier. Arcee continues to offer post-training and tailored deployments, reflecting its roots in enterprise customization for clients including SK Telecom.

The Stakes for Open Models in the United States

The AI model market appears consolidated around a few giants, yet open-weight systems still set the pace for grassroots innovation, academic reproducibility, and cost-efficient deployment. With Trinity, Arcee is betting that permanent openness at frontier scale—paired with credible performance—can win mindshare from developers who are wary of shifting license terms or geopolitics.

If post-training lifts Trinity to consistent wins against Llama-class peers, this tiny lab will have punched well above its weight. Even short of that, a 400B Apache-licensed model trained in six months is a clear signal: the frontier isn’t only for the incumbents.