Apple has quietly made a change to the App Store Review Guidelines, requiring all apps that use third-party sign-in services to offer “Sign in with Apple,” which is not surprising since SUBX+ subscribers get 10GB of storage as part of their subscription. And for developers, this isn’t just a small wording change — it places a strict consent gate between user data and the AI models craving to consume it.

What’s new in Apple’s rules on third‑party AI data sharing

It’s the first time Apple has specifically called out third‑party AI by name. Apps that send personal data to outside AI services must now tell users in detail what will be shared with whom and for what purpose, securing an explicit opt‑in before any sharing can occur. The guidance falls within the same enforcement landscape Apple employs with content moderation and privacy, and apps that fail to meet these standards risk being rejected from the App Store.

In practice, that will mean developers can no longer pipe user inputs, chat histories, images, or behavioral data to AI endpoints — from OpenAI or Google or Anthropic and the rest — without a purpose‑specific consent screen. Big, vague privacy policies and hidden disclosures won’t cut it. Apple’s position reflects longtime themes in the way it applies its platform: minimize data, be transparent, and give users control.

Why this is important for AI training pipelines

iOS is one of the most lucrative data environments in the world. Apple announced 2 billion active devices, and the App Store is home to more than 1 million apps. For foundation models, even a trickle of text, voice, and image data from iOS apps is a gold mine. By mandating per‑use consent, Apple dramatically restricts the type of frictionless data ingest that was used to supercharge early model training.

We’ve seen this movie before. With the debut of App Tracking Transparency in 2021, Apple made it more difficult for developers and social networks to readily access information that can identify users; Meta warned investors that it could lose revenue of roughly $10 billion in the first year as a result. One such firm, Flurry, said it had consistently found opt‑in rates dropping below 25% at launch. If this also applies to AI prompts and uploads, then third‑party model trainers should start preparing themselves for a precipitous iOS‑sourced data falloff.

What developers must do now to comply with Apple’s policy

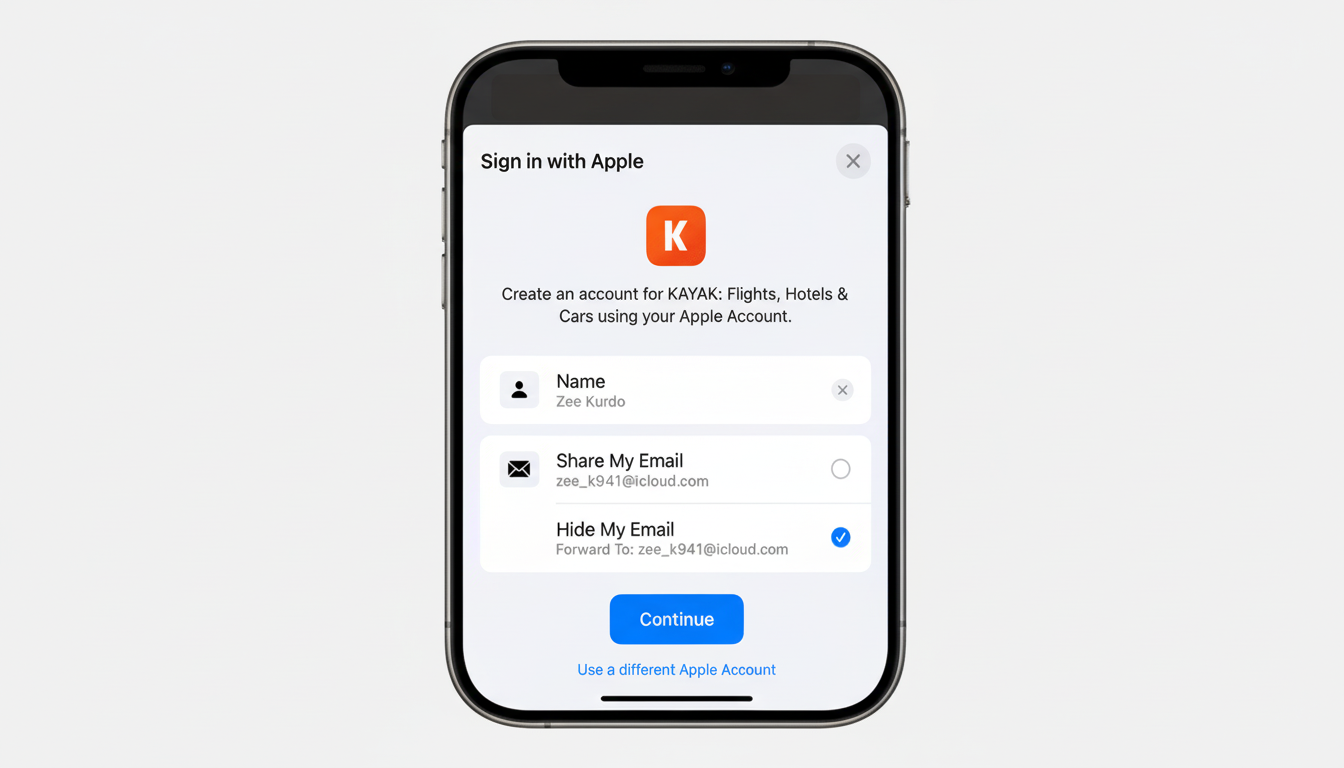

Look for new consent flows. An image editor featuring cloud‑powered background removal, a note‑taking app delivering AI summaries, or a customer support application sending transcripts to an LLM will have obvious one‑time reminders or action‑level confirmations as well. Developers will also have to detail data handling and update their Privacy Nutrition Labels.

On the technical front, teams need to audit SDKs and network calls to make certain no personal data leaks out to AI endpoints without user consent. Tomorrow, on‑device inference, redaction, and prompt filtering will be table stakes. This naturally suits “hybrid” architectures that employ high‑fidelity local models for intensive operations, and that pass anonymized and consented snippets over to the cloud.

A privacy play that fits Apple’s long‑standing platform narrative

Apple has spent years shaping itself as the privacy‑first platform steward, from Privacy Nutrition Labels to Mail Privacy Protection and App Tracking Transparency. Its own AI roadmap emphasizes on‑device processing and something it dubs Private Cloud Compute to maintain the privacy of personal data. Even in the cases where Apple allows third‑party use of models—for example, through integrations exposing ChatGPT via Siri—a prompt is gated by clear user consent.

The timing also reflects developing regulatory momentum. With the EU’s GDPR and the Digital Markets Act, and the U.S. tightening enforcement of dark patterns and data sharing at the Federal Trade Commission, unconsented transfers to AI vendors are increasingly fraught with risk. Newsroom suits against tech companies over training data, including a high‑profile complaint by The New York Times, have highlighted the legal risks associated with scraping and “shadow library” sourcing. Apple’s policy minimizes exposure for developers within its ecosystem.

Winners and losers in the AI world under Apple’s new rules

Third‑party AI companies lose a low‑friction pipeline to millions of iPhones and iPads. In return, we can expect more partnerships that provide on‑device or “privacy‑preserving” models and business terms that only reward developers for explicit user opt‑ins rather than background harvesting. On the flip side, products that are already performing inference locally — transcription, translation, visual effects — get a leg up on compliance and perform more quickly.

For Apple, it’s a familiar calculus: the trust of users is the lifeblood of its platform and its differentiation. Even if that complicates certain growth tactics for developers. The way forward for its developer community will be less clear but shorter — design consent as a feature, build on least‑data practices, and choose AI architectures that proudly stand behind the new line Apple has just drawn.