Anthropic chief executive Dario Amodei jolted the World Economic Forum with an unusually blunt rebuke of Nvidia and the U.S. decision to allow approved Chinese buyers access to high-performance AI chips, including Nvidia’s H200 line and comparable processors from AMD. In a conversation hosted by Bloomberg’s editor-in-chief, Amodei warned that loosening export controls risks accelerating strategic AI capabilities abroad, despite Nvidia’s status as a critical supplier and investor in Anthropic.

The bite of his critique wasn’t just the policy stance—it was the target. Nvidia supplies the GPUs that train and run Anthropic’s models across hyperscale clouds, and it recently pledged up to $10 billion to Anthropic. For a leading AI lab to publicly challenge the world’s dominant AI chipmaker underscores a growing rift between commercial momentum and national security caution.

A Rare Public Rift in AI’s Global Supply Chain

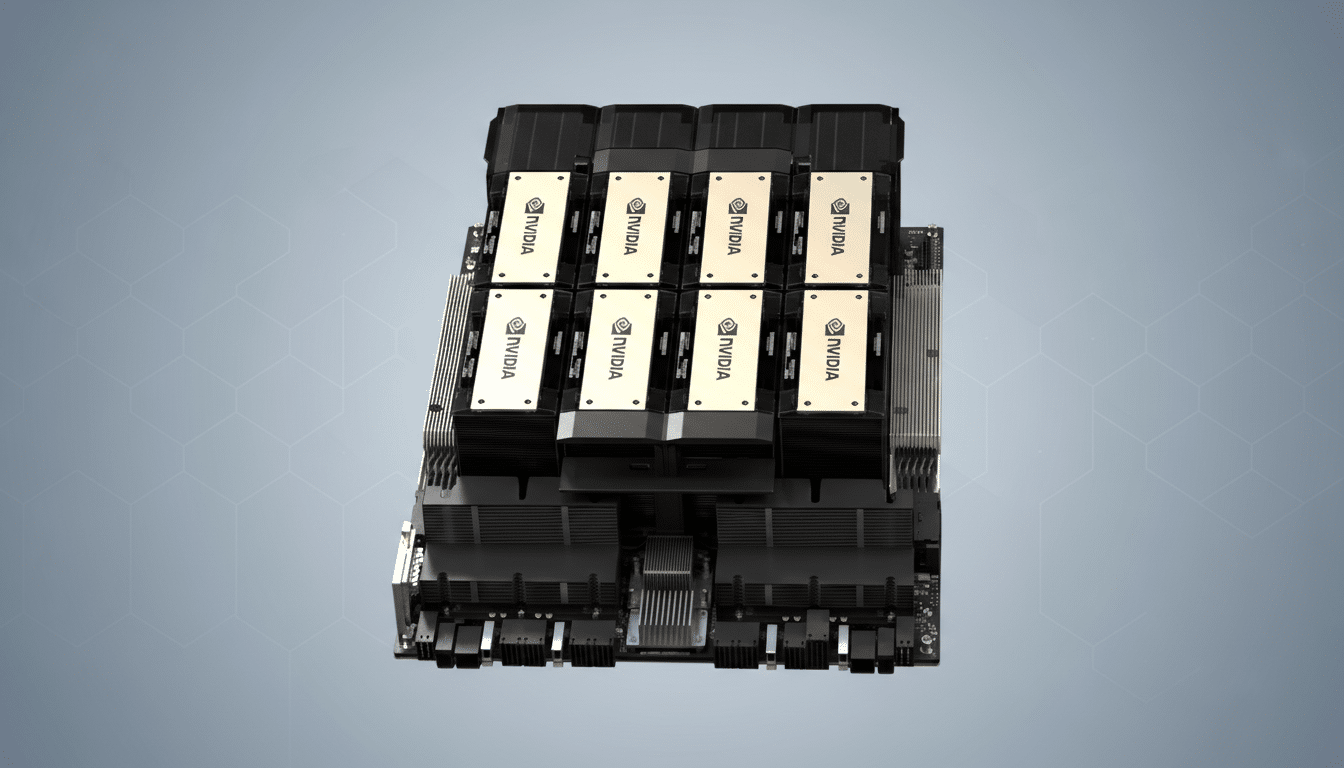

Nvidia sits at the heart of today’s AI economy. Industry trackers such as Omdia and TrendForce have estimated that Nvidia controls well over 80% of the market for accelerators used to train cutting-edge models. The company’s CUDA software ecosystem, networking hardware, and close integration with major clouds make it the default choice for labs trying to scale quickly.

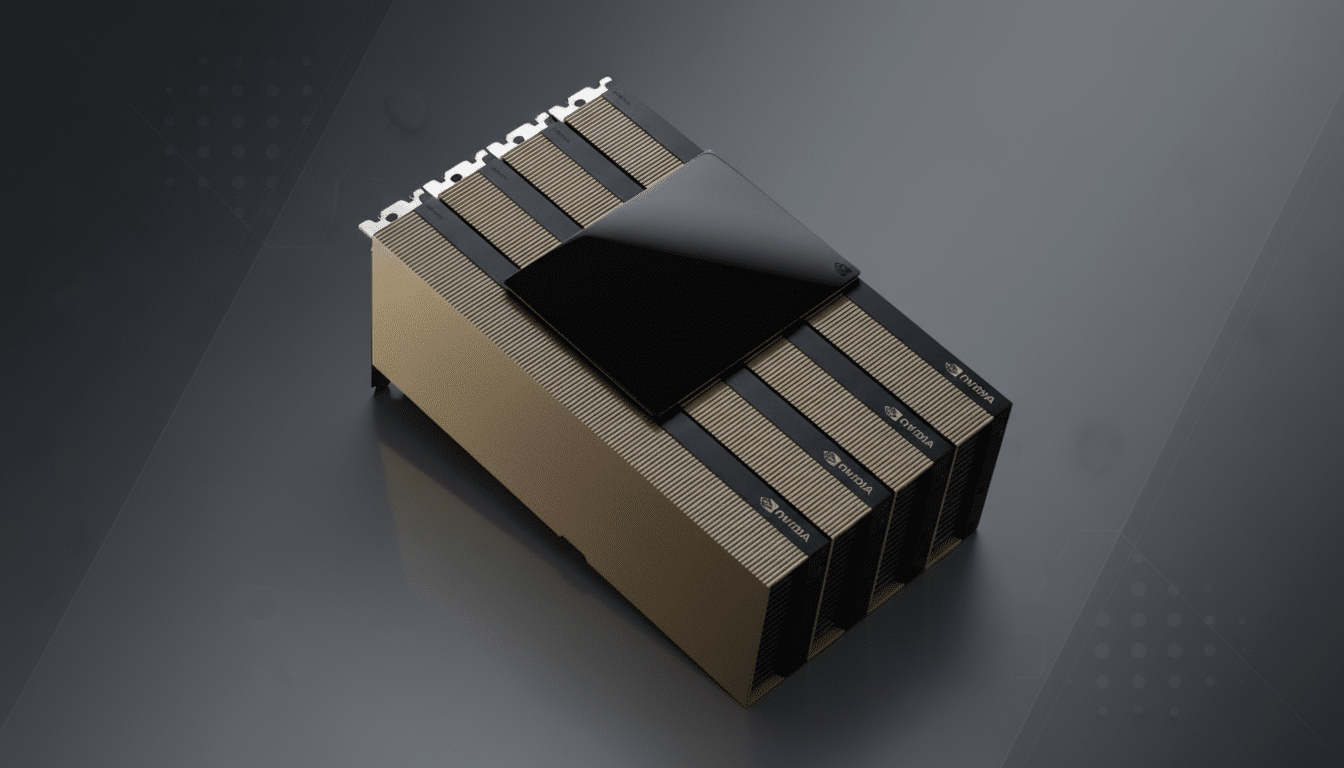

That dominance magnifies the stakes of export policy. The U.S. has repeatedly tightened and recalibrated chip rules for China, restricting Nvidia’s top-end A100 and H100 while prompting compliant variants like A800 and H800. The latest approval for H200 sales to vetted Chinese customers—alongside certain AMD alternatives—reopened a debate that many security analysts, including experts at CSET and CSIS, argue hinges on compute access as the primary throttle on large-scale AI development.

Amodei’s argument is straightforward: access to advanced accelerators is the clearest constraint on training frontier systems. Give an ecosystem more modern GPUs and high-bandwidth memory, and you shorten iteration cycles, expand model sizes, and reduce the cost of experimentation. Even with strict screening of end users, hardware inflows can compound quickly in industrial settings.

Security Stakes and the China Question in AI

Onstage, Amodei framed AI capability as a national security variable, not just a commercial edge. He cautioned that future systems may function like concentrated brainpower in a data center—an analogy meant to signal how compute translates directly to strategic capacity. That framing echoes findings from policy researchers who track how training compute, model size, and algorithmic efficiency jointly drive performance.

China’s leading AI labs—embedded in tech giants and state-linked research centers—have navigated past restrictions with domestic accelerators, model distillation, and efficient training tricks. But top-tier Nvidia parts still represent a step-function improvement in practical throughput. Analysts have estimated that China accounted for a meaningful double-digit share of Nvidia’s data center business before tighter rules. Reopening the spigot, even partially, could reset that trajectory.

Business Risk on Both Sides of the AI Divide

Publicly crossing Nvidia is unusual for any AI lab dependent on scarce GPUs. Yet the imbalance of supply and demand likely dampens immediate fallout. Every major cloud—AWS, Microsoft, and Google—relies heavily on Nvidia for training clusters, and the buildout of networking and specialized software further locks in the stack. In 2024, Nvidia’s data center revenue grew triple digits year over year, according to company filings, reflecting just how far demand outstrips capacity.

For Anthropic, the calculus is equally complex. The company has raised billions and is scaling its Claude family rapidly across enterprise workflows, from coding copilots to knowledge management. Securing compute remains existential; so is ensuring rivals don’t close the gap via subsidized or state-aligned access to frontier chips. That tension—growth versus guardrails—explains why a CEO would risk a public scolding of a key partner.

What It Signals for AI Governance and Policy

Amodei’s comments reflect a broader shift: AI leaders are now lobbying in public over the pace and perimeter of compute flows. The hardware bottleneck has become the most consequential policy lever—arguably more immediate than model licensing or content standards—because it sets the ceiling for how fast next-generation systems arrive.

Meanwhile, the industry is hedging. AMD’s MI300 family continues to gain traction with hyperscalers; custom silicon efforts at cloud providers are expanding; and foundry concentration remains acute, with TSMC fabricating most leading-edge AI chips. Each of these dynamics complicates export enforcement and raises the cost of strategic missteps.

The takeaway from Davos is not just one fiery soundbite. It is a reminder that the balance between open markets and security priorities will be negotiated in real time by the actors with the most at stake. When a top AI lab accuses the world’s most valuable chip supplier of enabling the wrong race, it signals a new phase in the politics of compute—one where candor, however uncomfortable, may shape the rules as much as the regulators do.