Artificial intelligence isn’t a sidecar in software delivery any longer. A new report from Google’s DevOps Research and Assessment program reveals that AI serves as an amplifier, refocusing attention either upward or downward on the engineering organization’s maturity continuum; isolating makeshift outfits from powerhouses and accentuating friction within them. It’s really that simple: what AI tool you roll out is relatively less important for outcomes; where your delivery system is on the maturity scale, far more so.

What the latest DORA study concluded on AI adoption

Google’s most recent DORA report was prepared from aggregating responses from close to 5,000 software professionals, structured around more than 100 hours of interviews and a deep dive over 142 pages of analysis. Adoption is nearly universal: the report puts AI use for development work between 90% and 95%, a sharp increase from last year.

Developers now use AI tools on a median of two hours a day. Usage is situational, not constant. Just a minority say they always turn to AI at the first sign of trouble, while the largest chunk uses it some of the time and a majority do so at least half as often as they work through a task.

Appreciated gains are significant but not even. Some 80% report productivity gains, but just 59% say their code quality improved. Trust is divided: some 70 percent trust AI-produced output, but a large minority does not. My only takeaway, if you will, is pragmatic: AI speeds work up but needs humans to gauge it, to review rigorously and with ample safeties.

AI is an amplifier, not a shortcut, for delivery teams

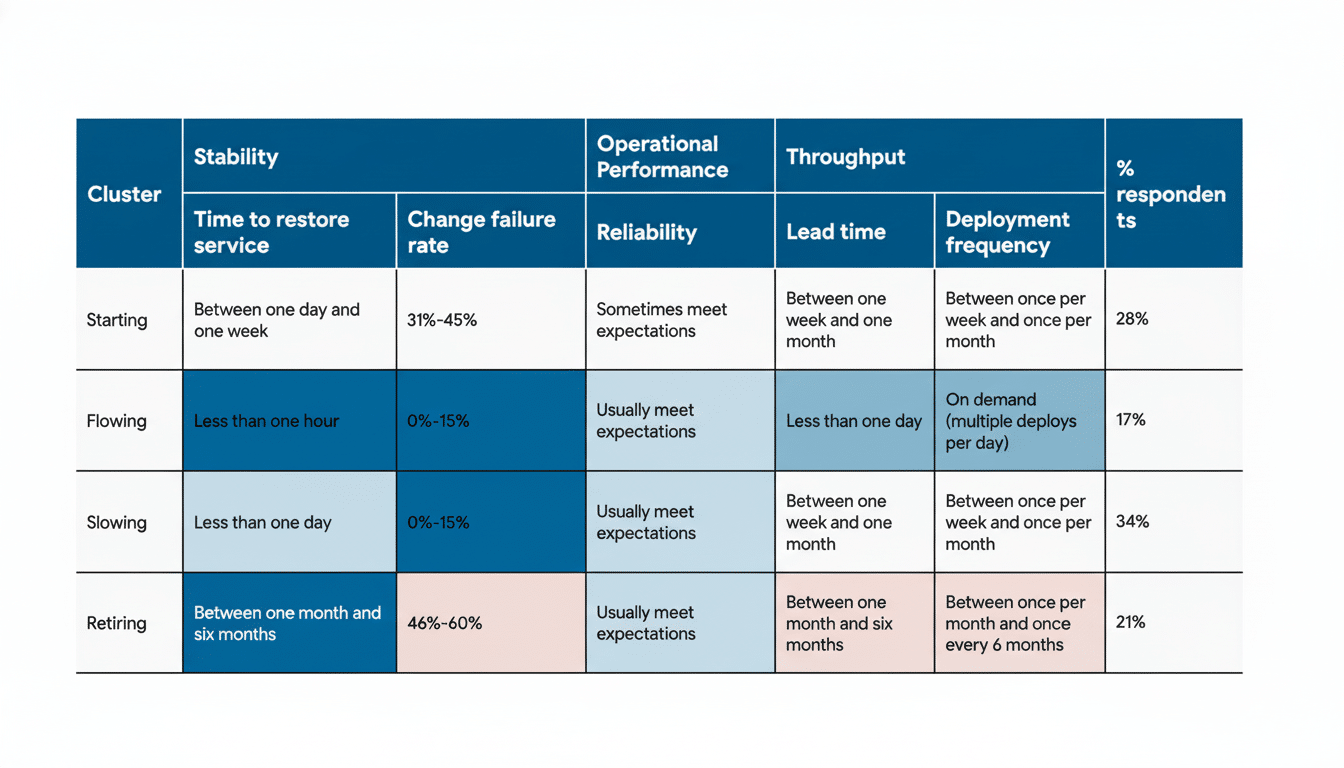

The central insight behind DORA is that AI reflects the health of your delivery system. High-performing teams (disciplined practices, clear feedback loops) have more throughput, faster, and with quality maintained. That ragtag approach contributes to more rework, variable releases, and defects as AI scales the existing chaos.

The report also defies a conventional myth: that you no longer have to give up drama for stability. The highest-performing archetypes as identified by DORA simultaneously deliver fast and with high quality, which seems to indicate the right foundational practices turn AI assistance into both faster iteration and lower production incidents.

An easy-to-understand example illustrates the point. In a shop with a strong CI/CD pipeline, excellent test coverage, and enforced code review, AI-generated changes will automatically pass through the guardrails that catch errors early. Without these controls, an AI-suggested refactor can easily sneak through, break downstream services, and increase incident load. The tool didn’t change; the system did.

The habits that set the winners apart in AI adoption

DORA frames team performance in terms of core engineering disciplines and organizational capabilities. Though the report has multiple dimensions, there are seven practices that come out as strong indicators of AI impact; these are:

- Mature internal platform engineering

- End-to-end value stream visibility

- Automated testing and continuous integration

- Small-batch changes with trunk-based development

- Robust observability and incident response

- Built-in security and compliance in the pipeline

- High-quality documentation and a data governance approach to keep AI systems grounded

These are not shiny add-ons. They are force multipliers: They lower cognitive load, normalize delivery, and take AI suggestions into trustworthy action. Teams that lack these basics can still experience local productivity bumps, but the benefits rarely spread across the organization.

Platforms And Value Streams Unleash AI Benefits

Two levers in particular transform outcomes. First, platform engineering. For example, it’s worth noting that a strong majority of organizations currently have internal developer platforms that consolidate tooling, automations, and paved paths for delivery. With these platforms strong, engineers don’t need to fight environment drift nearly as much and can ship value (and AI has a stable surface to plug into).

Second, value stream management. The process of mapping how work travels from idea to production also revealed bottlenecks like delays in reviews or release gates — common culprits where things often stall. With such an x-ray in place, leaders can apply AI to the proper constraints — triaging pull requests, adding tests where there’s thin coverage, automating routine deployment checks — so local wins become organizational uplift instead of downstream mayhem.

How to lead AI adoption the right way across teams

Consider the rollout of AI as a systems change, not a tools purchase. Invest in first-order effects that magnify AI’s impact: a coherent internal platform, consistent test and release automation, observable services, and data quality practices to ensure model drift or hallucinations don’t rot code and docs.

Bake trust into the workflow. Mandate tests of AI-generated code, maintain human-in-the-loop review for high-risk changes, and measure success with the classic DORA metrics — lead time, deployment frequency, change failure rate, and mean time to restore. If those are trending in the right way as AI usage scales, then your system is eating up the change. If not, fix the system before going after more tools.

The headline isn’t that AI makes teams better or worse. It’s that AI makes teams more like themselves. The upside is real and compounding for those organizations with strong engineering fundamentals. For everyone else, the way forward is as clear: reinforce the fundamentals and then let AI compound the improvements you’ve already made.