The sensationalist narrative that artificial intelligence will take your tech job makes for good headlines, but it distracts from a more pressing issue. AI is less depopulating seats than reordering the work performed in them. Analyses from the likes of Boston Consulting Group and Indeed’s Hiring Lab support this: few roles are liable to full automation, but the task mix is shifting and team structures are evolving at high velocity.

It often starts with tech workers feeling the change. They work closest to the tools, experiencing both productivity lift as well as pressure to adapt. AI agents are already able to write code, generate tests, summarize logs and explore data — with human supervision. The result is not wholesale turnover but a reshuffling of effort and a premium on hybrid skill sets.

- What the numbers really say about AI and tech work

- The changing shape of core tech jobs in the AI era

- Skills that now differentiate high-impact tech teams

- Productivity gains without pink slips or false alarms

- Future-proofing your role with practical AI fluency

- The bottom line: AI changes work, not human relevance

What that means in practice is that layers are being simplified and “AI fluency” is emerging as a core competency. Teams effectively leveraging AI reprioritize around specification, review, architecture and guardrails as machines take over bigger chunks of the repeatable scaffolding.

What the numbers really say about AI and tech work

According to research by McKinsey, most jobs involve lots of tasks that are partially automatable. The focus is on tasks, not jobs. Most activities performed today by people are also candidates for automation and, as that happens, human activity moves higher up the value chain—in the product life cycle to things like system design, stakeholder engagement and risk management.

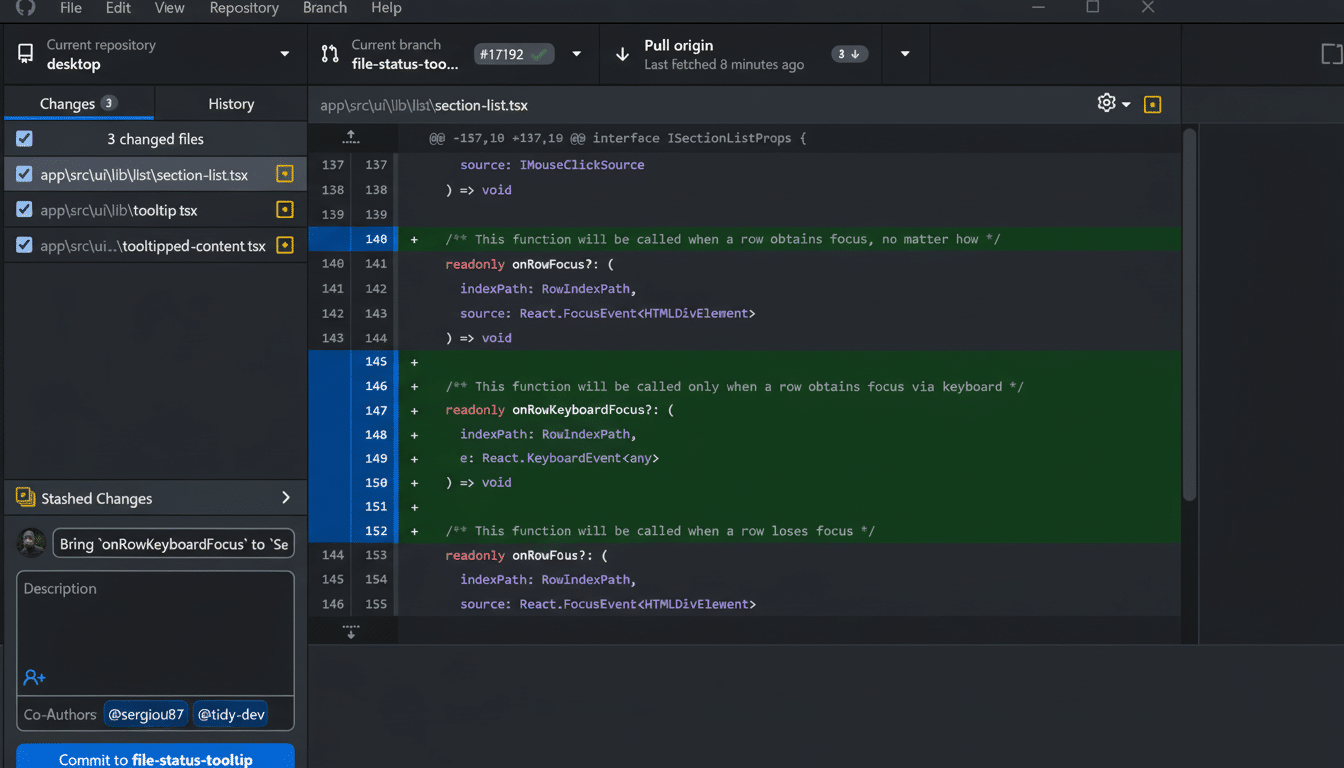

In experiments on GitHub and with Microsoft and academic partners, developers who used an AI coding assistant completed tasks at least three times faster — in half the time — and performed those tasks more accurately than they did when working without the assistant. Speed improvements concentrated in the boilerplate routines and patterned work, allowing humans to concentrate their efforts on architecture, security and clarity.

Beneath the grim headlines, a very different peril is emerging: a skills gap. With almost half of core skills evolving, the danger isn’t joblessness but irrelevance for those who don’t skill up.

The changing shape of core tech jobs in the AI era

Software engineers are shifting from keystrokes to intent. “Rather than hand-coding each unit test or data access layer, they say which behavior they want,” and then review AI-generated diffs and spend more time on performance, threat modeling and interoperability. AI agents create scaffolding; humans harden it.

AI summarizes incidents and suggests runbook steps. DevOps and SRE teams use AI to summarize incidents, propose runbook steps, and surface likely root causes from noisy telemetry. People still have responsibility — capacity planning, making change controls, and participating in postmortems depend on judgment that cannot be expressed by tools.

Product managers have been increasing their surface area by combining prompt design, data hygiene and lightweight QA with rapid prototyping. AI already helps iterate on user stories, mock flows and acceptance criteria before engaging full teams so the job of discovery is not artificially accelerated at the expense of governance.

Designers are emerging as architects of AI-first experiences. They train systems to ask for clarification, to generate safe defaults and to articulate their thought process. As the focus on interaction shifts from clicks to conversations, this discipline is gathering strategic mass.

QEs and analysts focus less on manual running and more on strategy—adversarial tests, red-teaming, model evaluation. The aim is reliable/fault-tolerant behavior across edge cases, not just pass/no-pass on happy paths.

Skills that now differentiate high-impact tech teams

AI fluency matters. It’s constructing prompts, it’s chaining tools, setting evaluation criteria and understanding when to trust or override outputs. I think of AI as a junior teammate: Give it very explicit directions, check its work and measure its effects.

Data literacy is foundational. Knowing provenance, privacy constraints, bias and data contracts is what separates good practitioners from the kind of people who will just ship around brittle systems. And with ungoverned, shoestring AI there is the same problem; imagine an amazing but faulty intern running your business.

Most of all, governance goes from design afterthought to design principle itself. Companies are conforming to the NIST AI Risk Management Framework and ISO guidance which use guidelines for defining guardrails, human oversight, and audit trails. There is a shortage of engineers able to translate these needs into sturdy systems.

Productivity gains without pink slips or false alarms

Stack Overflow developer surveys show a clear majority of developers either already use or have plans to use AI tools. Early adopters are reporting quicker onboarding, less time spent on rote tasks — and more bandwidth to spend with complex problems: signs of a quality-of-work shift, not just the abandonment of transaction-based bodies.

Google research shows AI doesn’t really compensate for weak engineering practices; it augments strong ones. Teams with disciplined testing, observability and automation reap outsized rewards — but teams that aren’t built on those foundations find it difficult to translate tools into outcomes.

The recent waves of tech restructuring are mostly about capital cycles and portfolio resets. Often the AI programs also overlap with new hiring within security, data engineering and product to scale responsibly and meet demand.

Future-proofing your role with practical AI fluency

Audit your workflow. Prioritize tasks by frequency and risk, automate the repetitive low risk tasks, and reinvest that time saved into design, stakeholder work, and experimentation.

Build your own AI toolbox—a pair programmer, a retrieval-augmented doc search, test and data generators, and evaluation harnesses. You’ll never prove progress if you don’t track baseline measurements.

Lean into hybrid work. Get good enough for prototyping in design, judging quality in data, and spotting risk in governance. Cross-functional literacy is the most durable career insurance.

The bottom line: AI changes work, not human relevance

AI isn’t stealing your job; it’s changing the nature of your work. The winners will be those who match tools with sound engineering discipline and people who encourage AI fluency, data savvy and systems thinking. Tend to the machines, and you will find yourself even more relevant — not more dispensable.