If your organization has a dozen chatbots, a few copilots, and plenty of model-powered add‑ins on staff today, congratulations. You’re consuming the new enterprise reality: AI sprawl.

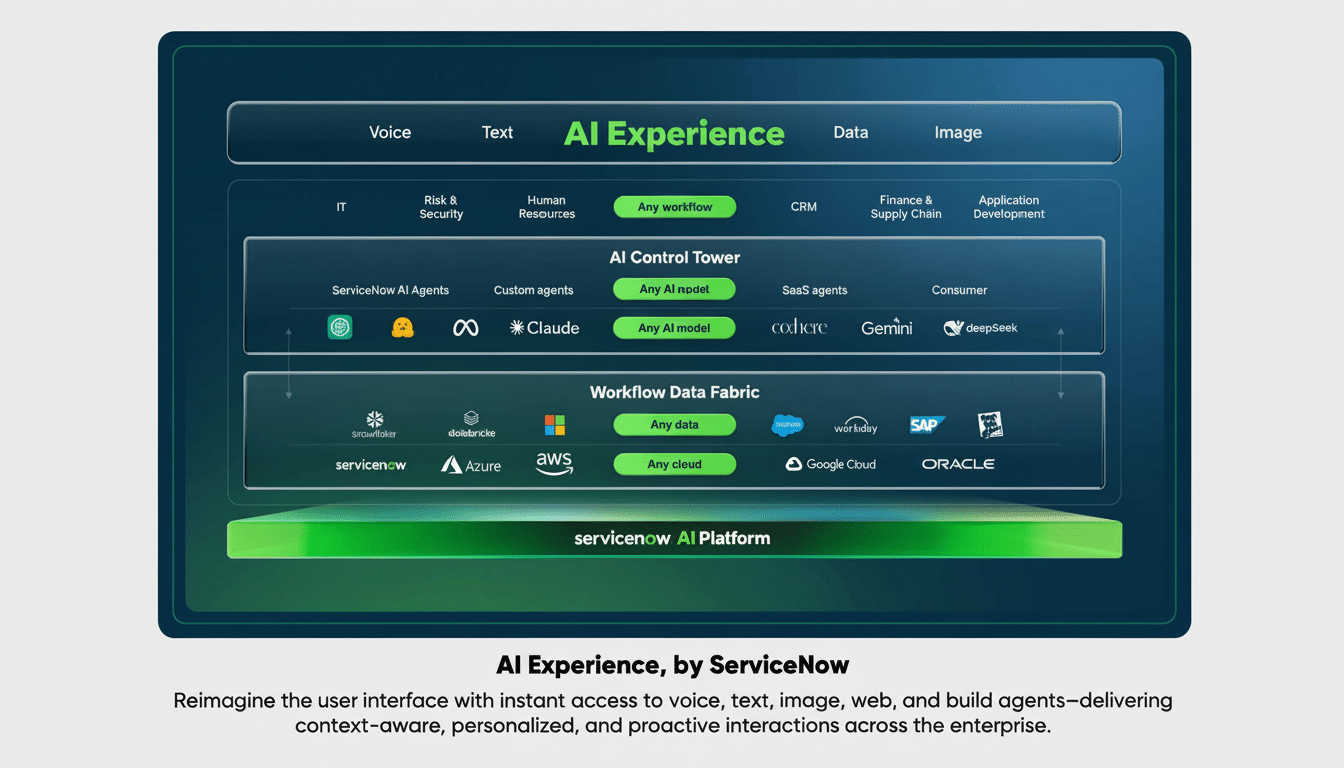

That complexity, however, stands to be tamed by a new type of platform that puts all those AI tools behind one intuitive interface. The newest is service management specialist ServiceNow, which this week debuted an “AI Experience” that seeks to standardize access, orchestration, and oversight for the burgeoning horde of enterprise AI agents.

- Why AI Sprawl Requires One Platform for Enterprise Control

- How a Unified AI Layer Works Across Enterprise Tools

- Inside ServiceNow AI Experience for Unified Enterprise Agents

- Governance, security, and cost control in unified AI

- Market context and alternatives among major AI platforms

- What leaders need to measure next for AI performance

Why AI Sprawl Requires One Platform for Enterprise Control

Enterprises embraced AI quickly, if not always cohesively. Teams try different vendors and models, pilots spread, and point solutions explode. The result is fragmented workflows, redundant effort, and a governance headache. Analysts at Gartner have anticipated that the majority of businesses will consume generative models via APIs and tools, indicating widespread adoption — but also a proliferation of heterogeneity. McKinsey projects that generative AI can unlock trillions in annual value, but realization of that potential depends on consistent integration, security, and measurement at scale.

The business impact is tangible. Workers toggle between interfaces to locate answers or invoke actions. Data is siloed, so AI responses may be based on outdated or partial information. Compliance teams have a hard time enforcing policy across multiple vendors. There is no place where finance can see usage or ROI. A common layer can solve all four pain points: the user experience, data fidelity, governance, and cost management.

How a Unified AI Layer Works Across Enterprise Tools

It’s an AI control plane, if you will. Users engage through a single conversational surface as the platform routes requests to the right agent or model, rooted in the right data and with the right permissions. Under the hood, connectors pull in context from systems such as CRM, IT service management, HR, and knowledge bases; retrieval‑augmented generation helps keep answers fact‑based; policy guardrails police who may ask what and share back what.

It’s important to note that this is the layer where identity, logging, and analytics come together. That includes audit trails for every AI action, redaction for sensitive fields, and dashboards that show what employees are asking, where answers fall short, and which agents provide a measurable value. Organizations can, over time, direct tasks to the most cost‑effective model for the job, rather than using one large model for everything.

Inside ServiceNow AI Experience for Unified Enterprise Agents

ServiceNow’s model combines two types of agents. Voice Agents process and act on plain‑language prompts for things like fetching information, recording data, or walking human users through the normal course of business requests — from entitlement checks to case summaries to incident workflow initiation. Web Agents go beyond internal systems to work in third‑party apps, filling forms, fetching data, and performing multi‑step tasks that previously required people to manually click through.

Two analytics functions are placed beside agents. AI Lens reveals visual insights — ticket volume trends, common failure modes, and opportunities to automate. AI Data Explorer delves deeper into patterns across teams and processes, enabling leaders to see where there are bottlenecks and thus prioritize where inserting new agents might have the most impact. Together they make the platform more than just a command center — it becomes an optimization loop.

Consider a customer service example. A Voice Agent takes an incoming case, summarizes the customer history, and recommends a policy‑based response. A Web Agent then goes to a partner portal, files a warranty claim, updates the CRM, and schedules a follow‑up — no swivel‑chairing at all. The human remains the decider, but those repetitive steps are offloaded, reducing handle time and error rates.

Governance, security, and cost control in unified AI

Centralization is not just a convenience — it’s a risk and spend issue. A unified platform allows consistent NIST AI Risk Management Framework alignment, role‑based access enforcement, and model‑level auditability. Considerations for buyers may include options to control data residency, input and output redaction features, closed‑loop human review, and evaluation reports detailing model behavior on representative workloads.

And on costs, the platform can use usage metering and route with all of that in mind. You don’t need a big, expensive model for every task. The ability to dynamically choose cost‑effective, specialized models for classification, extraction, or summarization can help cut bills without compromising on accuracy. Finance leaders can trust a single source of truth for license utilization and agent performance, and even perform portfolio rationalization rather than letting AI creep run wild.

Market context and alternatives among major AI platforms

Big platforms are converging on similar goals from disparate directions. Microsoft provides its own orchestration of Copilot and Copilot Studio across the productivity suite. Physics may be a challenge, but Amazon delivers agents via Bedrock and enterprise assistants courtesy of the new Amazon Q. Google returns with agent‑building and grounding tools in Vertex AI. What sets ServiceNow apart is its workflow and operations lineage — the backbone for many enterprise processes already lives on it, making end‑to‑end automation a logical leap.

The industrywide bet here is obvious: As companies stack up dozens of AI tools at the cost of millions of dollars each, they will pay for a governing layer that makes implementation simpler, raises trust, and demonstrates return on investment. Success will be about depth of connectors, the quality of grounding data, guardrails that meet risk teams’ requirements, and measured lift in job‑level outcomes.

What leaders need to measure next for AI performance

Begin with those that are linked to work: average resolution time, first‑contact deflection, case backlog, time to create knowledge, and employee satisfaction. Track AI answer precision and hallucination rates, and add human‑in‑the‑loop safeguards for high‑risk jobs. Pilot with one or two mission‑critical journeys, then scale agents only where there is evidence of the specific benefit being achieved in a sustained manner.

The takeaway is straightforward. The phenomenon of AI sprawl is certainly not going to slow down any time soon, but it does not have to overwhelm teams. One blanket platform, such as the one available now from ServiceNow, can transform a bunch of isolated AI tools into one governable system that speeds up work instead of slowing it down.