I’ve tried on Meta’s new Ray-Ban Display glasses, and for the first time, smart eyewear felt like a believable leap past the smartphone. Two breakthroughs are key: a lit, private color display that sits in the lens and a neural wristband gestural system that turns nuanced finger movements into better-than-mouse-level input.

Together, they distill everyday interactions — checking a message, framing a photo, picking up directions, jotting notes — into glances and gestures too brief for you to notice then whip out a slab of glass.

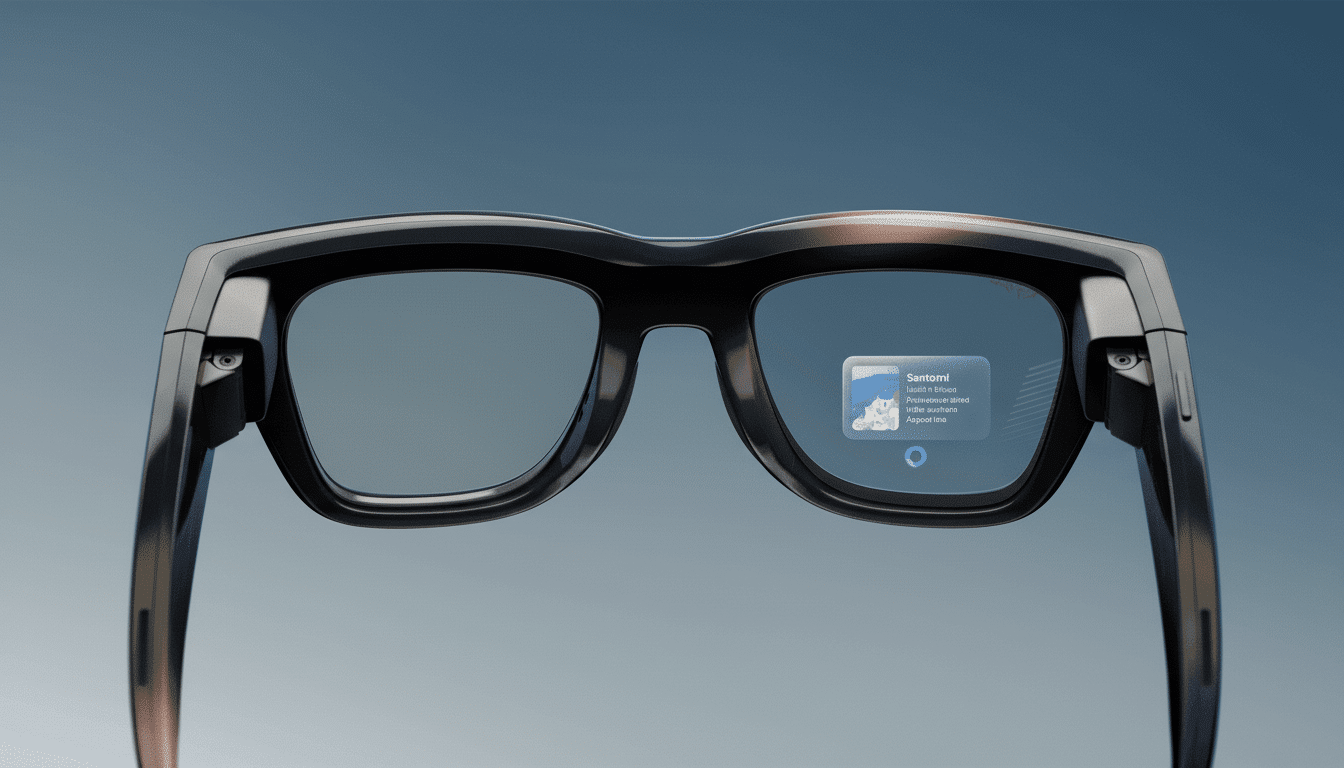

Breakthrough 1: A bright, inconspicuous microdisplay

The right lens holds a liquid crystal on silicon microdisplay rated up to 5,000 nits that kept VR text and UI cards readable in full sun and indoor glare. The image is visible only to you — a projection that reads as if it’s invisible to bystanders, which makes 3-D interactive GIFs feel intimate rather than performative.

The image is positioned a little off-center so you can work heads-up and not look straight through a HUD. As a viewfinder, it’s world-shaking: I was able to frame and refocus shots before hitting record and then could pinch-and-twist zoom up to 3x; finished clips and photos previewed in-lens, ready for sharing via messaging without having to peek at your phone.

Live captions were the eye-opener. I tagged the person I was speaking with and the glasses transcribed that person’s voice while filtering out surrounding conversation. Together with Conversation Focus, which boosts the sound you’re facing, it was selective hearing and selective reading for loud places.

AI answers came in the form of swipeable cards. Request a banana bread recipe, and you receive the method as though it were flashcards to flip through while your hands are employed elsewhere. And turn-by-turn walking directions and, the whopper, hands-free video calling on top of that, round out a display that’s all about glanceable utility rather than immersion.

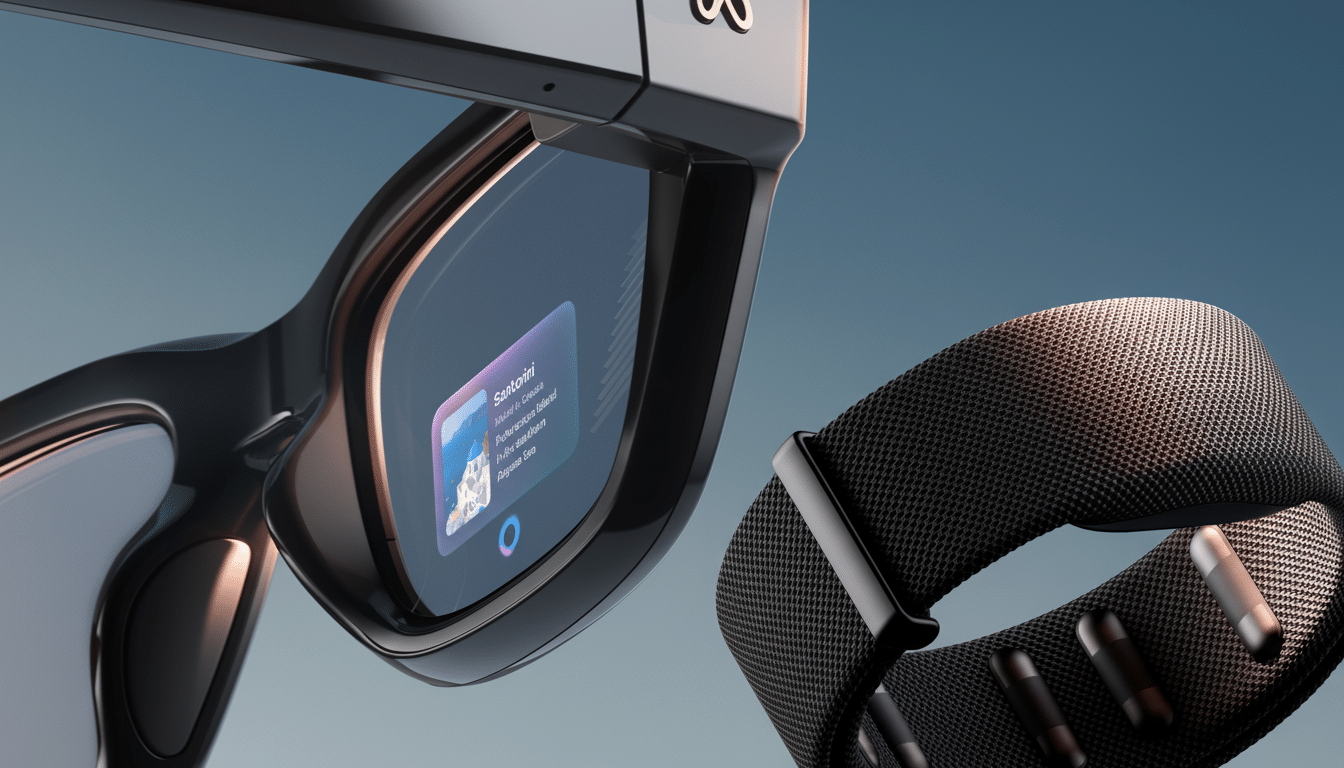

Breakthrough 2: Input for EMG wristband that feels natural

Meta’s EMG wristband detects the tiny electrical signals emitted by your wrist muscles, a technique that comes from CTRL-Labs’ work developing a neural interface which Meta acquired earlier. Calibration was completed in less than 10 minutes, and pinches and thumb slides began to feel second nature.

I controlled it with thumb–middle finger pinches to go back, index–thumb pinches to select, and a pinch while twisting your wrist to adjust volume or zoom. It’s silent and local, so it works even in noisy environments where a loud voice command would fail. I also tested tracing letters in the air to write short replies (when someone would deliver them to me, like a handheld carrier pigeon); the system was better at recognizing characters than I’d ever have anticipated for one-off words and names.

The higher-ups at Apple are never going to take a huge risk on the clinical-grade results here, but academic labs at Carnegie Mellon and ETH Zurich have shown IMU EMG can go touch-to-touch almost completely with a few points separating for simple UI tasks, and this is the first mainstream product that wraps around all of that potential promise in an always-on band you’d also be happy wearing all day. It’s the half that made smart glasses make sense: accurate, private input to complement a personal display.

Smart glasses specs that matter (day to day)

It weighs about 69 grams — heavier than an audio-only model but also comfortable over a full day. Battery claims max out at up to 18 hours total with about 6 hours of mixed use in my experience, and the glasses are water-resistant for real-world wear.

Each pair comes with transition lenses and works for prescriptions ranging from +4.00 to −4.00. Color options are shiny black and clear light brown. The bundle is available for $799 with the glasses and wristband, and distribution through Ray-Ban’s retail network — which includes LensCrafters, Sunglass Hut and Ray-Ban stores, as well as additional carrier shops — promises easy try-ons and fittings.

What still holds them back from replacing your phone

The display is monocular. For dense text, I found that I needed — and could comfortably squeeze in — to close my left eye to focus, a reminder that it’s built for quick glances and prompts rather than long reads. Field of view is purposefully small to remain discreet; excellent for steps, not spreadsheets.

Navigation is being released city by city as Meta builds its own mapping layer. And as with any camera-wearing gadget, discretion matters: features like Live AI can see and hear what you’re doing to take notes and summaries, so visible cues and user restraint are important while norms play catch-up.

Why these moves are important beyond the smartphone

Analysts at Data.ai track increasing hours spent on mobile — and so much of it is wasted on app switching and taps that don’t move things forward. A glanceable display plus silent EMG input removes that overhead: I caught a moment, glanced at a step, replied and stayed present.

They look like eyewear for the simple reason that they are eyewear, thanks to EssilorLuxottica’s Ray-Ban design and retail penetration. IDC characterizes smart glasses as young but rapidly accelerating, and this recipe — real world first, display when useful, and voice optional — feels like the most alluring bridge to ambient computing we’ve seen yet.

They won’t retire your phone, but the color display and neural wristband transform smart glasses from novelty into necessity. After a few hours, I stopped thinking about the tech and just used it — and that’s the milestone that counts.