If you’ve recently updated to iOS 26, there’s one AI setting that you might want to use sparingly: Apple’s AI-generated notification summaries for news and entertainment. The feature offers faster, cleaner alerts, but Apple has now slapped a beta label on it with a warning that summaries can be misleading compared to the original headline. That’s no small caveat — push alerts decide not just what we read, but how quickly we react and what we believe.

The warning isn’t window dressing. Summarizing journalism as it unfolds is tough for machines. Headlines tend to use hedges, quotes and cautious attribution; A.I. often compresses, smooths and occasionally overcommits. The outcome can be punchier-than-the-facts notifications — and sometimes incorrect.

- What’s new in iOS 26: AI notification summaries return

- Why AI summaries get headlines wrong on iOS 26 alerts

- Real-world fallout and publishers’ concerns

- How to use it safely—or turn it off—in iOS 26

- Privacy, accuracy and the trade-off with on-device AI

- Bottom line: treat AI summaries as drafts, not headlines

What’s new in iOS 26: AI notification summaries return

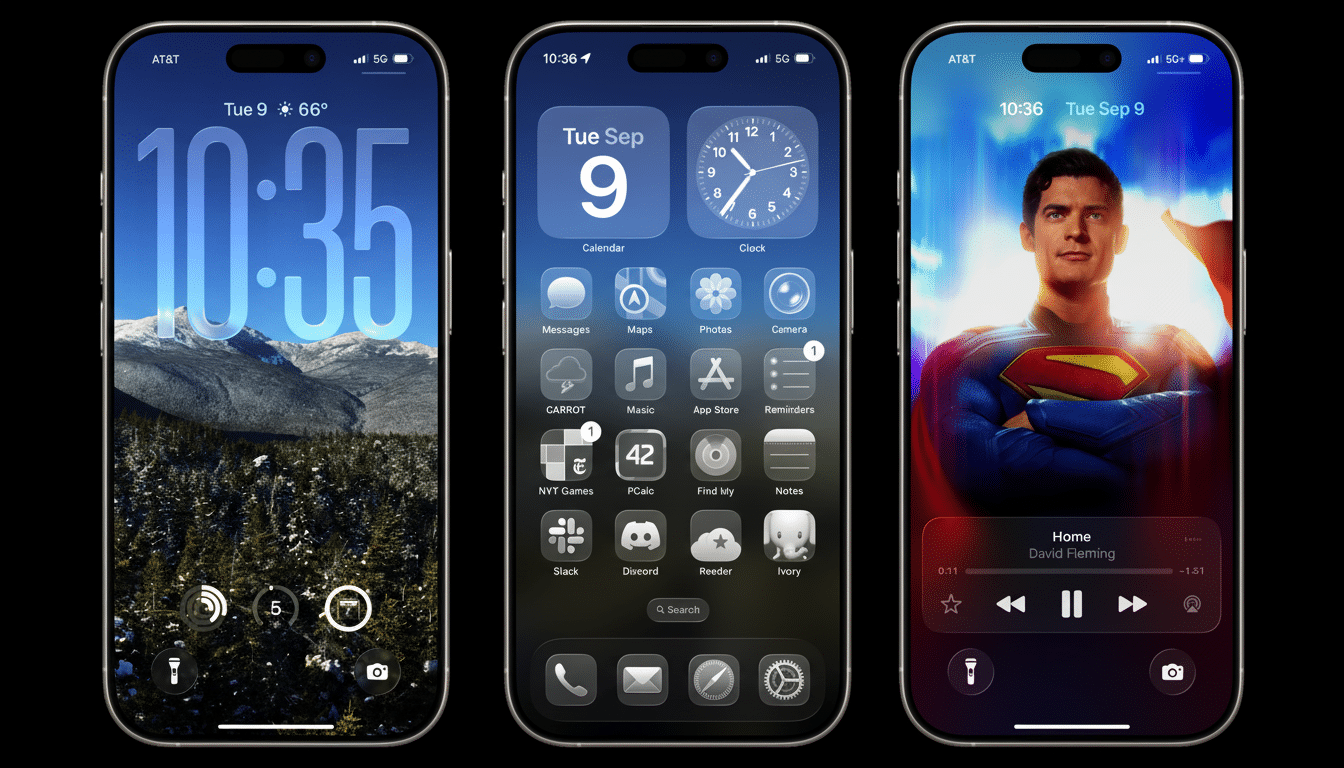

For iOS 26, Apple brought back AI summaries for notifications from news and entertainment apps, alongside a big beta label and an accuracy warning. When you pick the apps that can be summarized, Apple says this feature could change the headline’s meaning and warns you to verify a summary before acting on it.

The gradual introduction comes after Apple previously disabled summarizing for some publishers in the wake of a broadcaster alerting it to an AI paraphrase that distorted an important fact. The return of the feature, however, includes more stringent messaging and actual control on the part of the user.

You can control this in Settings > Notifications > Summarize Notifications. From there, choose the apps you trust with summaries — or turn them off altogether. The control is per app, meaning you can let through summarization for low-stakes entertainment alerts while receiving uncompressed full headlines for hard news.

Why AI summaries get headlines wrong on iOS 26 alerts

AI is good at arithmetic, reminders and boilerplate writing. Squeezing nuanced reporting is a whole lot different. Models typically discard hedging words (“alleged,” “reportedly”), fuzz who-did-what in long, convoluted sentences and journey from they to he or she while processing a bank of pronouns. That’s made worse by push notification character limits, which force the model to be aggressive in culling.

There is a larger context: The Stanford HAI AI Index has repeatedly found that even large language models still hallucinate under pressure, with factual error rates that depend on task and prompt style. In news-style situations — where names, places and timelines are important — a small compression can turn into a large distortion.

Trust makes this risk consequential. The Reuters Institute’s Digital News Report shows that fewer than 4 in 10 people say they trust most news, most of the time. If an AI summary is exaggerated or flips the meaning of a headline, it adds friction to already precarious trust in media and can mislead the most engaged audiences who may be depending on fast alerts.

Real-world fallout and publishers’ concerns

Publishers have been vocal because one errant push alert can go a long way. One broadcaster’s airing of grievances earlier this year illuminated what happens when an AI-generated paraphrase brutalizes a sensitive story. Reporting on reporting: journalists’ unions called on platforms to turn off summarization for news until the accuracy improves, and media leaders warned that experimental systems should not be tested on live headlines.

The concern isn’t only about “hallucinations.” It’s also about subtler shifts in meaning — making an ongoing investigation seem like a bill of health, softening attributions into assertions (substituting “police say,” for example, with “meat is murder”) or compressing quotes in ways that alter what they mean. These are classic failure conditions for automated summarization — particularly when the character budget is so low.

How to use it safely—or turn it off—in iOS 26

If you want the speed and not the risk, observe these guardrails:

- Turn off summaries for high-end sources: public safety, health, elections, finance. Let official alerts arrive intact.

- Demand a tap-through for news. Think of it like a teaser, not the tale. And if you’re sharing or making a decision, listen to the original first.

- Tailor by app: Go to Settings > Notifications > Summarize Notifications. Enable for entertainment and shopping, disable for investigative reporting or breaking news.

- Set up Time Sensitive and Focus notifications so that important alerts don’t get missed while you’re boiling rice in the bag.

To turn off AI summaries altogether: Open the Settings app > Notifications > Summarize Notifications and flip that switch to off. This will bring back the original headlines and preview texts written by publishers for all apps.

Privacy, accuracy and the trade-off with on-device AI

Apple focuses on processing AI on the device in many of its functions, which is good for privacy. But privacy is not the same as precision. Even if your data never leaves the phone, a compressed summary can still be reductive or misleading. Until automated summarization faithfully carries the mantle of attribution, uncertainty and nuance, skepticism makes perfect sense for news.

Bottom line: treat AI summaries as drafts, not headlines

AI notification summaries in iOS 26 can be useful for chattiness and long group texts, but they’re not a substitute for journalism. The beta label is a message: Consider them as drafts, not headlines. If accuracy is essential, keep summaries off for news — or, if nothing else, always tap through and check before sharing or committing.