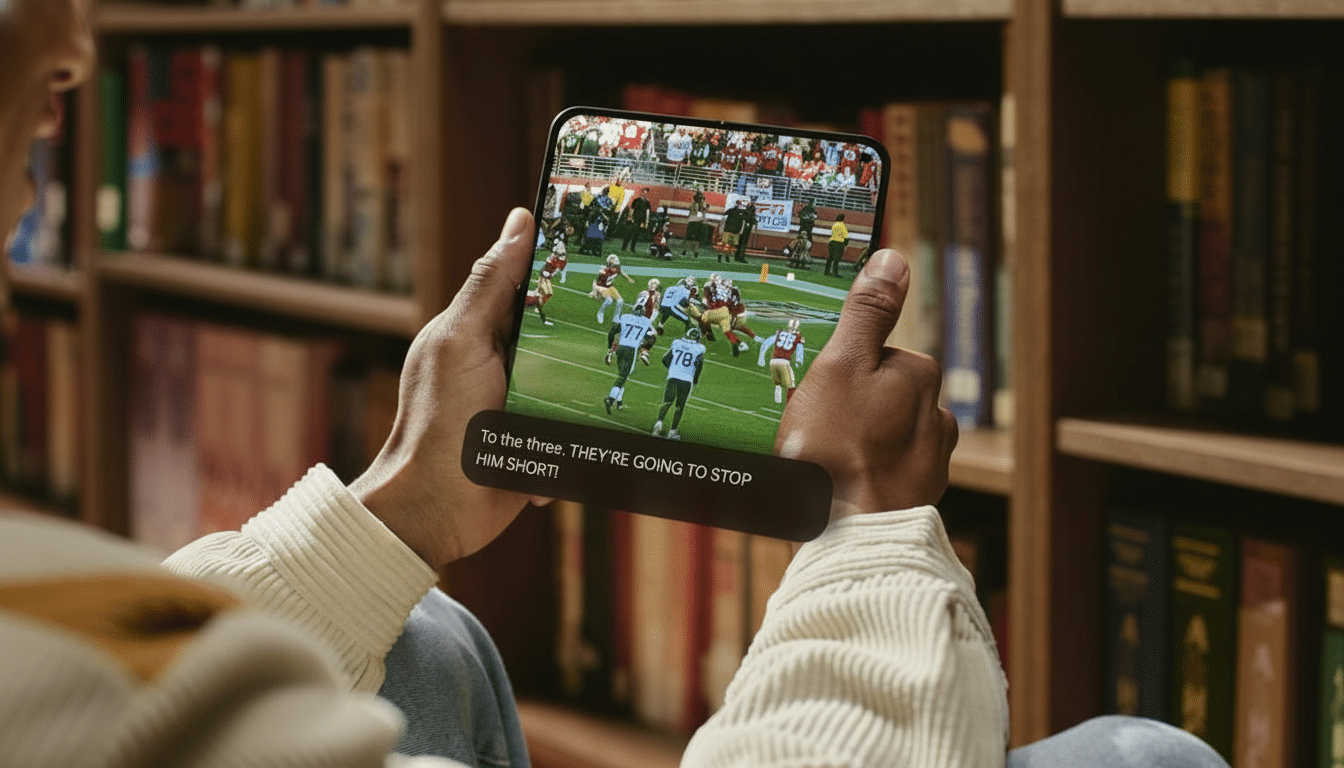

YouTube is unveiling Expressive Captions, a next-level subtitle system that incorporates tone, emphasis and ambient cues into on-screen text. Rather than flat, literal transcripts, audiences will see context — “[joy]” before a punchline is spoken into the microphone; “[applause]” when contestants raise their boards in response to an answer reveal; “[sigh]” during a pause. The feature is intended to make videos more accessible and engaging for all, especially when the audio is off or hearing loss presents challenges.

What Expressive Captions Do to Enhance On-Screen Text

Standard captions have long captured the words, not the vibe. Expressive Captions layer in how it’s being said and what else is happening in the image. And if a creator whispers a spoiler, the captions can indicate it’s a whisper. If a streamer stretches out a word for effect, the text will show that stretching. Background events like laughter, gasps or clapping serve as cues so you don’t miss the emotional beats when the volume is turned down.

- What Expressive Captions Do to Enhance On-Screen Text

- Accessibility and engagement impact for viewers and creators

- How the tech works behind YouTube’s Expressive Captions

- Limits and safeguards for emotion-aware video captions

- What creators should know to get results with captions

- Part of a broader effort to boost accessibility on YouTube

![A smartphone displaying a football game with the caption To the three. [gasps] THEY ARE GOING TO STOP HIM SHORT! [cheers and applause]](https://www.findarticles.com/wp-content/uploads/2025/12/youtube_expressive_captions_edited_1764763837.png)

Expressive Captions will be available at launch on English-language videos for new uploads, with additional language support and a more widespread rollout in the works.

They will be available across devices and are meant to complement the platform’s existing auto-captioning, not replace it.

Accessibility and engagement impact for viewers and creators

This is a significant upgrade for the deaf and hard-of-hearing community. The World Health Organization estimates that hundreds of millions live with disabling hearing loss, and the richer captions more accurately mirror what hearing viewers are exposed to. The change also accommodates the big portion of viewers who watch with no sound — industry research has repeatedly shown strong habits of sound-off viewing, especially on mobile, and usage data shows that captions can increase completion rates and comprehension.

Creators can reap the rewards too. Emotional clarity makes punchlines land, tutorials become easier to follow and reaction content reads like you want it to. Ultimately, these can translate to higher watch time, better retention over key moments and more accessible content — all of which are tied to platform recommendations and advertising standards such as the FCC’s caption quality guidelines or the W3C’s WCAG guidance.

How the tech works behind YouTube’s Expressive Captions

Expressive Captions fuses automatic speech recognition with AI models for prosody (rhythm, pitch and stress), timing and alignment of the audio description scripts, and soundscape event detection. The system hunts for signs of sarcasm, enthusiasm or frustration and puts them into structured labels. You’ll encounter brief tags like “[sarcasm]” or “[sadness],” and also stage directions for things happening in the background, audible elements and whatnot, akin to how professional captioners mark up live TV.

The feature is an extension of the ground that Android’s Live Caption broke in offering on-device transcription that could accommodate shouting, whispering and elongated words. Bringing that idea to YouTube’s vast library means expressive metadata is available to many more viewers and creators without so much as lifting a finger.

Limits and safeguards for emotion-aware video captions

Tone is notoriously difficult to do well. Sarcasm, regional dialects and cultural idiosyncrasies can give human transcribers trouble too. Misattribution of emotion could distract from the content or misrepresent a creator’s intentions. So that’s why transparency and control are important — viewers can manually switch caption styles directly through typical playback settings, and creators still have the ability to give their own human-edited captions, which provide the most accurate information.

Privacy is another consideration. Detecting applause or laughter is one thing — inferring delicate emotional states, another. YouTube claims the intent is clarity, not profiling, and these labels are based on what’s audibly present in the track — i.e. what a listener might hear with sound turned on — rather than personal attributes.

What creators should know to get results with captions

Creators who already provide captions should keep it up. Expressive Captions complement auto-captions; however, human-written tracks continue to describe content with accuracy in words, names and terms. For channels that are heavy on comedy, gaming reactions or live events, lively tags can make a real difference in how jokes, jump scares and crowd moments carry to sound-off viewers.

It’s a good idea to check how captions appear on mobile and TV, where timing and line breaks are relevant. Clean audio, less background noise and clean mic technique mean the models can pick up signals more reliably. If a beat is dependent on a reaction, consider including a fast OTS text cue or pacing cut to supplement what’s communicated in captions.

Part of a broader effort to boost accessibility on YouTube

Expressive Captions follows on from our ongoing accessibility efforts such as enhancements to TalkBack, expanded support for hearing aids from Fast Pair and other investments in speech and hearing we continue to make across Android. On the video side, the move represents a reprioritization from “captions as compliance” to “captions as experience.”

The takeaway: showing emotion = better video. Whether you’re watching quietly on a train or can’t find a caption crunchy enough, Expressive Captions make even more of the creator’s intent available with just one sigh, giggle or gasp at a time.