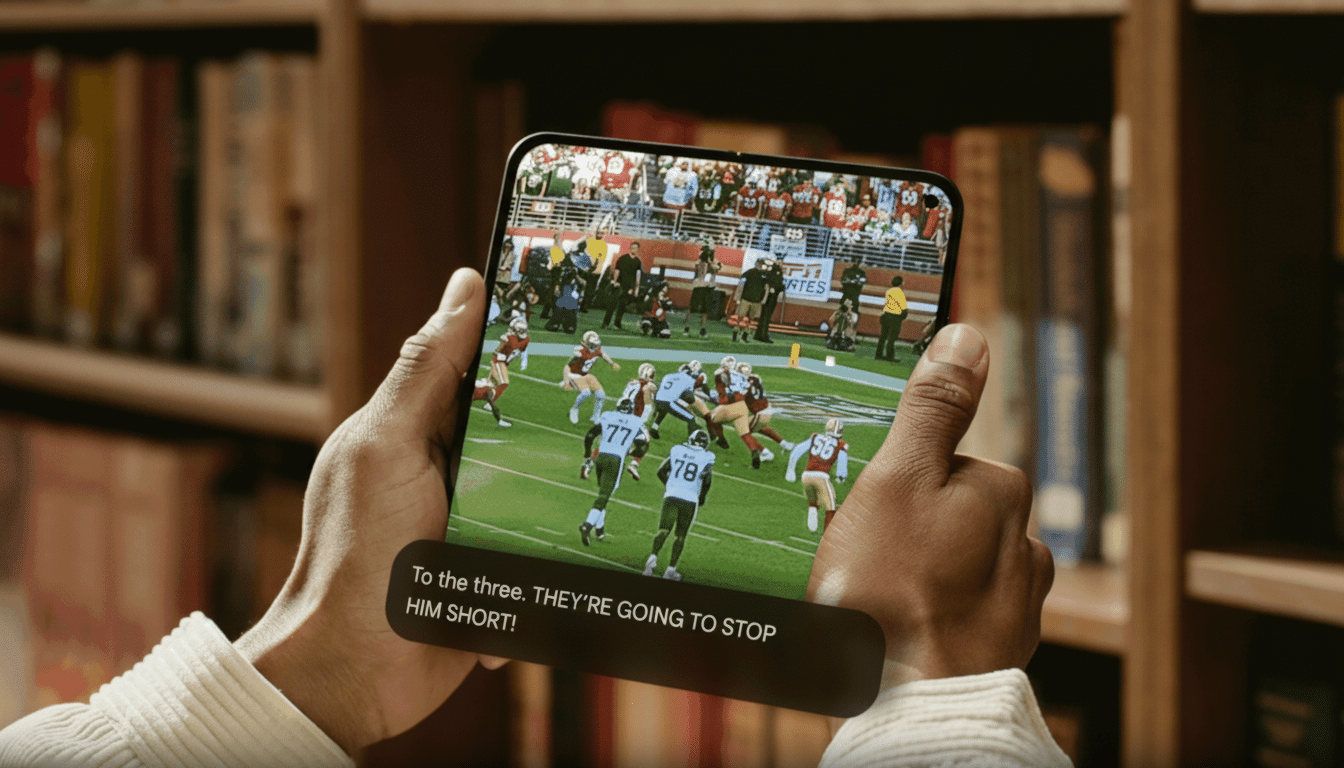

YouTube is rolling out Expressive Captions, an AI-driven captioning experience that captures tone and context to help you feel every gasp, sigh, and shout. The system incorporates cues like all caps when someone is shouting and bracketed descriptions of ambient sounds or human noises, which give emotional texture to subtitles that have long been limited to transcribing words.

The feature builds on functionality first introduced in Android’s Live Caption and is now rolling out to English YouTube videos on any device.

- What Expressive Captions add to YouTube subtitles and SDH

- Why expressive captions matter for viewers and accessibility

- How it works and where you’ll find it across YouTube

- Effects on creators and the wider streaming industry

- Limitations and open questions about YouTube captions

- The bottom line on YouTube’s new Expressive Captions

![A smartphone displaying a football game with the text To the three. [gasps] THEY ARE GOING TO STOP HIM SHORT! [cheers and applause] on the screen.](https://www.findarticles.com/wp-content/uploads/2025/12/youtube_expressive_captions_edited_1764793912.png)

At launch, it applies to recent uploads, with YouTube saying support will grow to other videos over time.

What Expressive Captions add to YouTube subtitles and SDH

Auto-captions generally are good at transcribing from speech to text, but on the whole they flatten emotion. Not all of the story is contained in the text, because some messages are “read” from commentary or paralanguage. Expect moments like NOOO! when a character screams or [audience gasps], [door slams], and [whispering] to display in line. It’s more akin to SDH (subtitles for the deaf and hard of hearing) practices that access groups have long been advocating.

This is in harmony with recommendations from groups like the National Center for Accessible Media that promote sound-effect labeling and speaker identification, quality principles highlighted by the FCC (including accuracy, synchronicity, completeness, and placement). In short, captions should tell the story rather than simply the script.

Why expressive captions matter for viewers and accessibility

Expressive Captions is a nice little nudge toward accessibility and comprehension. According to the World Health Organization, more than 1.5 billion people suffer from hearing loss in some form — a segment of society that depends on captions to understand dialogue and action as it unfolds. Including emotional cues gives direction in scenes where tone can be just as important as the words themselves.

They also serve the vast swell of folks who watch with sound off in public, or a low-volume rumble at home. According to Ofcom, much of the use is from viewers with perfect hearing, indicating captions have moved into the mainstream as a viewing accessory. Emotional cues can also cut through confusion, hasten comprehension, and retain viewers’ interest when audio prompts are easy to miss.

How it works and where you’ll find it across YouTube

YouTube says the system uses AI to determine tone, volume, and sounds of the surrounding environment in an audio track and then creates readable cues for captions based on that data. The rollout is currently for English-language videos, and the feature appears across platforms such as mobile, desktops, TVs, and game consoles. For now, it is offered for videos uploaded from October, with more content being targeted.

Because creator-supplied captions tend to already include descriptive cues, the more obvious changes will come on auto-generated tracks. It’s unclear whether the processing is done by your device or YouTube in the cloud. For the record, Android’s Live Caption handles audio locally, but the how of YouTube’s version hasn’t been shared.

Effects on creators and the wider streaming industry

“Creators may see increased retention and comprehension, especially for genres like gaming highlights, horror, or even how-tos,” Bergen said. “Traditionally these are types of content where a mood with reaction shots conveys the story.” Clearer captions also remove ambiguity for international audiences who use the English subtitles as a scaffold for second-language learning.

The feature brings YouTube more in line with the markup descriptions common in premium SDH tracks on other major streaming services. It is also a counterpart to creator tools and industry standards like WebVTT and SRT, both of which also support notes as well as positional cues. The proof of the pudding will be balance: too much annotation can overcrowd the screen, while not enough could miss out on crucial context.

Limitations and open questions about YouTube captions

There are natural edge cases. Sarcasm, deadpan humor, and overlapping dialogue can be difficult for AI to interpret; yelling in excitement versus rage might not always be apparent just from waveform analysis. A clear way to turn expression on or off would allow a viewer to set the intensity however he likes instead.

Another open question is how Expressive Captions works with third-party or professionally created subtitle files. Based on history, YouTube values creator-submitted captions — viewers will have a vested interest in understanding when the expressive layer manifests and whether it can be mixed with or replace human-authored SDH tracks.

The bottom line on YouTube’s new Expressive Captions

By allowing tone and sound effects to be added in captions, YouTube is updating an essential accessibility feature for how people actually watch video now. It’s a useful upgrade for audiences who are deaf or hard of hearing, and handy for anyone watching without sound, not to mention closer to being able to reflect emotional beats that creators have worked hard to hit.

If the rollout proves to be what it promises — truthful without being obtrusive, and with sincerity that isn’t invasive — Expressive Captions could reshape how millions and eventually billions experience content, not just an accessibility feature for a relative niche of web-goers. That’s good design: inclusive by default, informative when necessary, and invisible when it should be.