Windows 11 is testing AI agents that have more to offer than answers.

Supported by the Copilot Actions framework, these agents can click, type, and navigate apps for you based on your files and settings to help accomplish tasks. The pitch is convenience. The challenge is trust.

The last time Microsoft attempted to give Windows wide-ranging AI-level access to personal data, in a feature called Recall, it was met with scathing criticism from security researchers before getting rewritten. There, the company is proceeding more cautiously, with agentic features. That’s smart, because the stakes are higher when software is given power rather than just offering advice.

What these Windows 11 AI agents actually do in practice

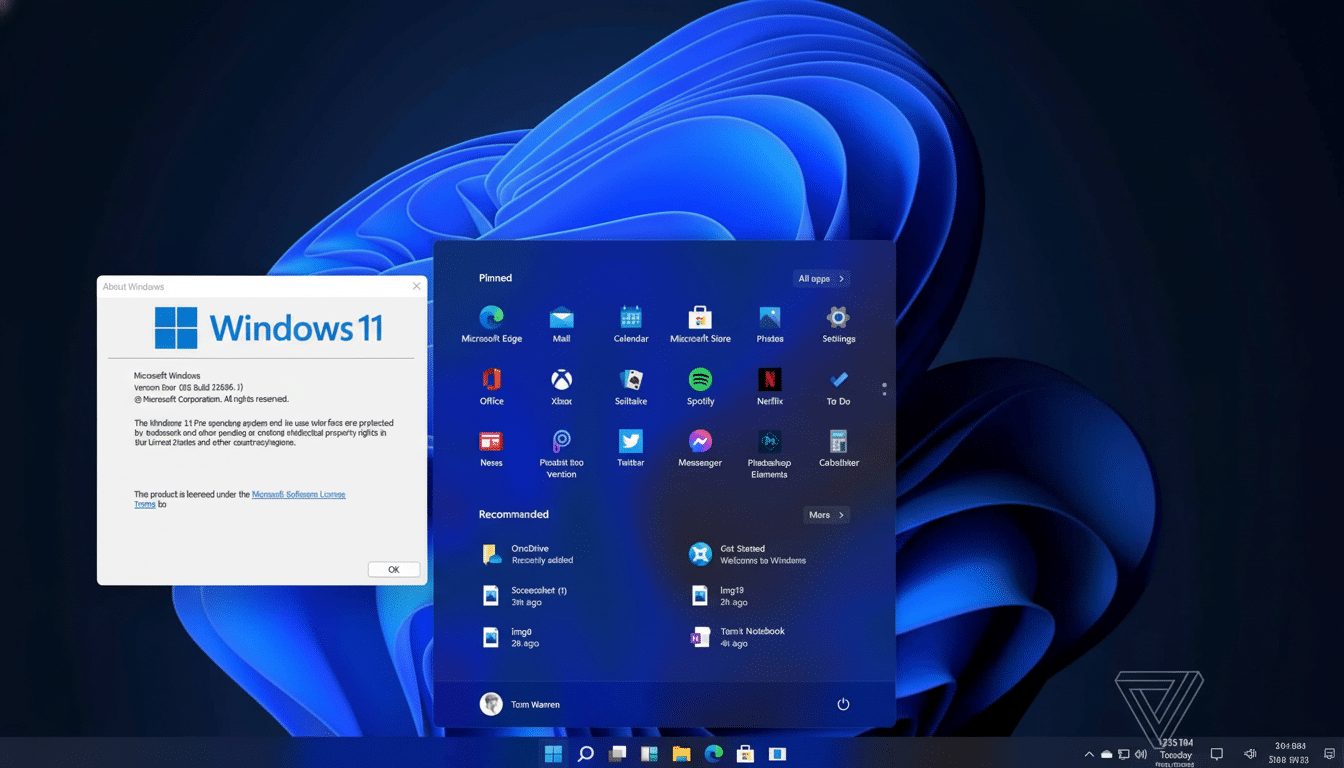

Copilot Actions is rolling out as an opt-in preview to Windows Insider builds in a dedicated experimental mode. It’s off by default, and only turns on if you toggle Experimental agentic features in Settings under System, AI components, Agent tools. That’s an intentional speed bump, and not a dark pattern.

After enabling, agents work in an isolated Agent workspace, a confined desktop experience designed to minimize its reach. They operate under a different standard account spun up when the feature is turned on. The four folders known so far are Documents, Downloads, Desktop, and Pictures; access at launch is limited to these unless you grant additional freedom.

Agents, Microsoft says, must be signed with a digital certificate like any other executable app. That manufactures a revocation path in case of a malicious signed agent. The company also loves consent gates: An agent starts with minimal permissions and needs your say-so to access more files, apps, or settings.

The new risk surface created by agentic AI on Windows 11

Agentic AI poses new threats beyond run-of-the-mill malware. One of those is a quick injection, where hateful content within a document, an email, or a UI component discreetly rewrites the agent’s instructions. Microsoft dubs one variation of this cross‑prompt injection: a poisoned object on one screen prods an agent to take a harmful action in another, such as extracting files or installing software.

Another risk is credential scope creep. If an agent can work in apps where you’re already logged in, a slip could mean the right command is sent to the wrong location. And given that agents emulate human behaviors — clicking, scrolling, typing — traditional controls like URL allow lists may miss out on bad moves made by humans via UI automation.

The business risk is nontrivial. IBM’s recent Cost of a Data Breach publication estimates the worldwide average breach costs $4 million. The Verizon Data Breach Investigations Report continues to report that most breaches contain a significant (human) element — mistakes, social engineering, abuse. Agentic AI fuses automation with the human element, which can multiply productivity as well as errors.

Microsoft’s Guardrails, And What The Company Is Missing

Microsoft says it is actively red‑teaming Copilot Actions with its security teams, and that finer controls will come during preview. Isolation, explicit permission prompts, signed agents, and revocation mechanisms are mentioned by the company. Those are meaningful protections, particularly the contained workspace and default‑deny permissions.

Open questions remain.

- How frequently are consent prompts exposed, and do users even understand them?

- Will enterprises be able to set policy boundaries by application and by data type, rather than just by folder?

- Can administrators retroactively audit any and every action the agent performs, with clear provenance and roll back?

- How will third‑party agents be validated in addition to code signing?

And these gaps parallel broad industry guidance. For LLM applications, OWASP’s Top 10 warns about prompt injection, insecure output handling, and over‑permissive integrations. NIST’s AI Risk Management Framework emphasizes ongoing monitoring, transparency, and human control. The show‑me‑and‑control rules are what Microsoft must translate during the preview into controls users can see and administrators can govern.

How to use Windows 11 AI agents safely right now

Begin with the smallest scope that you can. Minimize what agents can do to read‑only whenever possible (and restrict them further on your test files and noncritical accounts until you understand their behavior). Approach any complicated task as a two‑step flow: propose, then approve. If the program itself can summarize a plan—what it will open, edit, or send—you should approve before its execution.

Watch for injection vectors. And be careful when allowing an agent to act on content from untrusted emails, documents you don’t recognize, or snippets copied from the web. If you have to, put those tasks into a different Windows profile or virtual machine, just like when trying out software.

At organizations, limit the blast radius.

- Keep experimental agentic features turned off and in the dark from the start.

- Build allow lists for signed agents.

- Constrain folder access to least privilege.

- Couple agent utilization with data loss prevention, endpoint detection, and network egress controls.

- Ensure audit logs are associated with every action for all agents, and treat them like privileged admin activity.

Finally, plan for revocation. Understand how to turn an agent off, revoke its permissions, and roll back changes. Trust must be both conditional and revocable.

The bottom line on Windows 11’s experimental AI agents

Windows 11’s AI agents might be truly useful, transforming those boring clicks away from your life into a finished task. But help that acts is a different trust calculus than a chatbot that chats. The early safeguards — opt‑in, isolation, code signing, explicit permissions — are moving in the right direction.

Whether you trust them will be a function of how comfortable you are with risk and whether your controls are disciplined. The result is that for most users, cautious experiments with low‑stakes tasks make sense. For businesses, it means policy, monitoring, and guardrails before rollout. The technology looks very promising, but the only safe default with an agentic AI is trust that has been earned and not assumed.