Wikipedia’s steward, the Wikimedia Foundation, is telling AI developers to cease scraping its pages and to do the right thing: pay for low-latency (5ms–200ms) access at volume through Wikimedia Enterprise, its commercial API. The message is clear: If AI models are going to rely on the world’s encyclopedia, then they should give credit to those humans who built it, and their links should contribute funding toward the infrastructure that powers it.

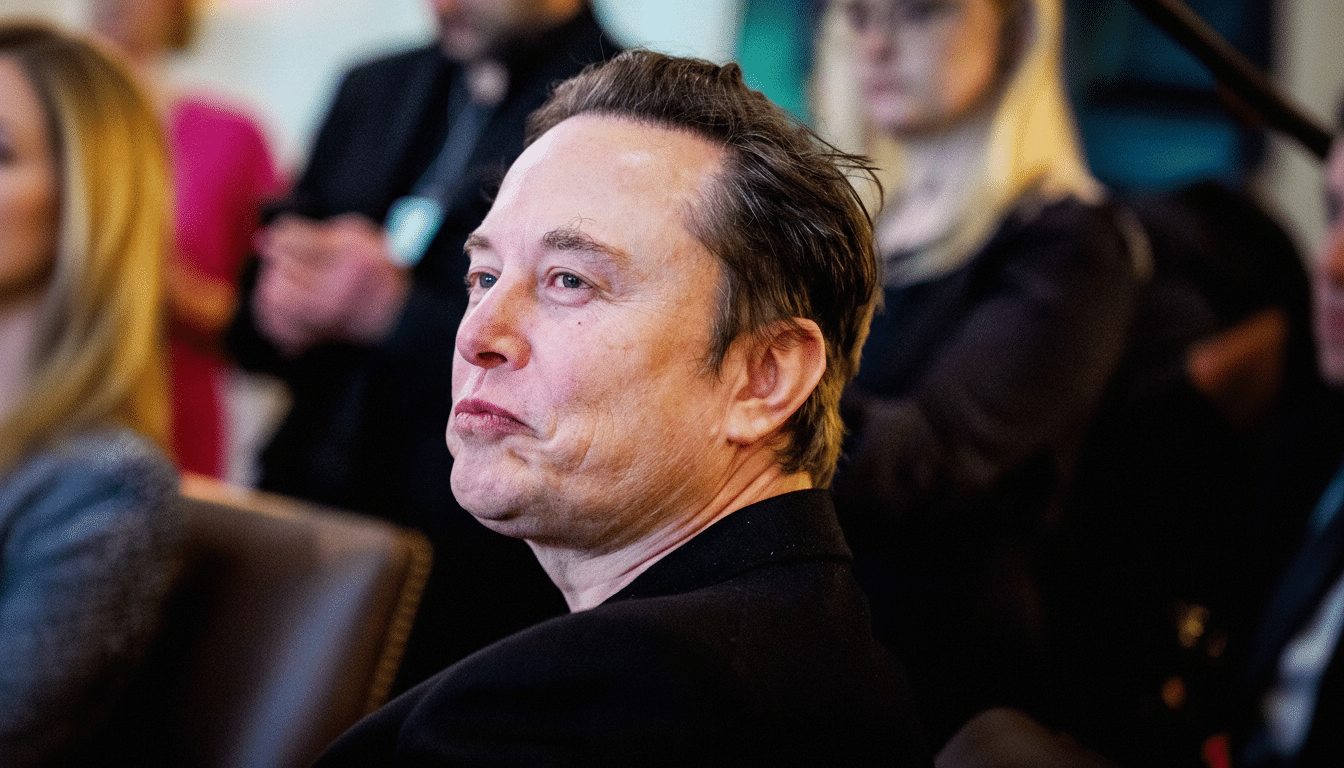

The nonprofit said AI companies needed to “use content responsibly” and it had two essentials — clear attribution and using its paid, high-throughput data services. The change is one part of an effort by the organization to get better at bot detection as it grapples with a sometimes-invisible cost of the AI boom: increasing machine traffic, declining human visits, and ever more stress on servers that were first built for people.

Why Wikipedia is drawing a line on scraping and bots

And while legitimate “human page views” were down 8 percent year-over-year, Wikimedia reported that recent surges in traffic occurred thanks to bots pretending to be human. That drop matters. Fewer human visits means fewer readers who click through to confirm facts, fewer volunteers to fix typos and factual errors, and fewer small-dollar donors supporting the site’s nine-figure operating budget.

Scraping at scale also imposes demands on infrastructure and frequently leaves behind the provenance required for responsible reuse: version histories, edits, licensing signals. The Foundation isn’t making a threat of legal action; it’s drawing a line in the sand between rights to cooperative access that preserves the commons and beneficial patterns versus extractive systems that tear down resources.

What Wikimedia Enterprise offers to major data users

Wikimedia Enterprise serves large consumers of Wikipedia and Wikidata. It serves complex JSON, bulk snapshots, and real-time change feeds, with service-level guarantees and monitoring. In practice, though, that translates to fewer missing pages, fewer stale facts, and a clear path for attributing specific versions of content — such as in training sets, retrieval-augmented generation, or as citation features in AI products.

Among the first to sign on have been organizations like Google and the Internet Archive, suggesting that major platforms see value in paying for reliability and stewardship. For AI teams, Enterprise means lower operational risk — no more cat-and-mouse of evading rate limits, no more stealth bans, and much less post-processing to clean up scraped HTML into model-ready data.

The AI age, attribution, and open licenses

Wikipedia is CC BY-SA, which enforces attribution and share-alike for any derivative works, along with other features of the GFDL. How those responsibilities translate to model training is a live legal debate. But the Foundation’s stance makes sense: platforms can show sources, link to article revisions, and surface links back to editors’ work. Open sourcing enhances user confidence and also reflects the community expectations which made Wikipedia reliable to begin with.

A few AI products have already tried using citations in answers. Consistent, machine-readable feeds make that simpler to do, allowing for features such as inline references, model audits, and lineage of content — features regulators and enterprise customers increasingly expect.

The Move Away From Unrestricted Scraping

On the web, owners of data are clamping down on access. Reddit announced a paid API, then also an agreement with a leading search provider. Stack Overflow introduced an enterprise data product and signed licensing agreements with the makers of models. News organizations have sued over unlicensed training. Wikimedia is trying a different approach: it’s asking for collaboration rather than court battles, but making explicit that the freewheeling era of anonymous, high-volume scraping is done.

Wikimedia has also improved its bot detection, catching actors who attempted to “evade detection.” The cost curve is inverting for AI companies — clean, reliable data through an API has become cheaper and more secure than fragile scraping pipelines that can fail without warning.

What it means for AI builders using Wikipedia content

Teams working with generative models who are training or deploying should plan to include costs for Wikimedia Enterprise and consider attribution in product design.

To do so, best practices will involve consuming page revision IDs, tracking provenance in data structures like embeddings and knowledge graphs, and rendering references that guide users back to the underlying article. For RAG systems, referring to the specific snapshot reduces hallucinations and allows users to verify claims.

This is also risk management. The metered model limits legal exposure, minimizes downtime, and helps retain a critical closeness with the world’s most popular reference source. The broader industry trend is clear: reliable, licensable data is becoming a first-class input for competitive AI systems.

Wikipedia’s own AI plans to support volunteer editors

The Foundation has said it will employ AI to assist, not supplant, its volunteer editors — speeding translations, triaging vandalism, and disposing of repetitive tasks. The aim is to make it easier to contribute while still placing editorial judgment in human hands.

The ask for AI companies is comparably practical. If you profit from the commons, respect it: pay something — or, if nothing more, recognition (attribution). It’s a sustainable compromise that keeps knowledge open, true, and accessible to anyone who still clicks through to read the sources.