OpenAI is hiring a Head of Preparedness, a senior leader whose remit is to pre-emptively identify and mitigate risks from frontier AI systems, including model-enabled cybersecurity, biological misuse, and the wide-ranging harms of mental health incidents. This search follows CEO Sam Altman’s public statements that current-generation models are creating challenges from assisting with vulnerability detection to users’ well-being.

They would be responsible for upgrading the company’s Preparedness Framework, which defines a roadmap for deploying and developing novel capabilities. It will detail how each novel capability is monitored, tested, and gated before hitting the market.

As more and more capabilities are added to large models that can write code, bodies of tools, and sophisticated instruction-following, deployment risks grow beyond what simple testing can address. Security researchers have demonstrated that Charlie Workflows to enhance their ability to find and exploit bugs in controlled environments can be scripted via models in less time, and red-team exercises at major tech conferences showed how chatbots can be “jailbroken” when faced with unusual inputs. Meanwhile, front-line clinicians and leading academics have warned that models, if unrestricted, can accidentally reinforce delusions and encourage isolation. Such safety cases have become the basis of criminal and civil lawsuits in the United States.

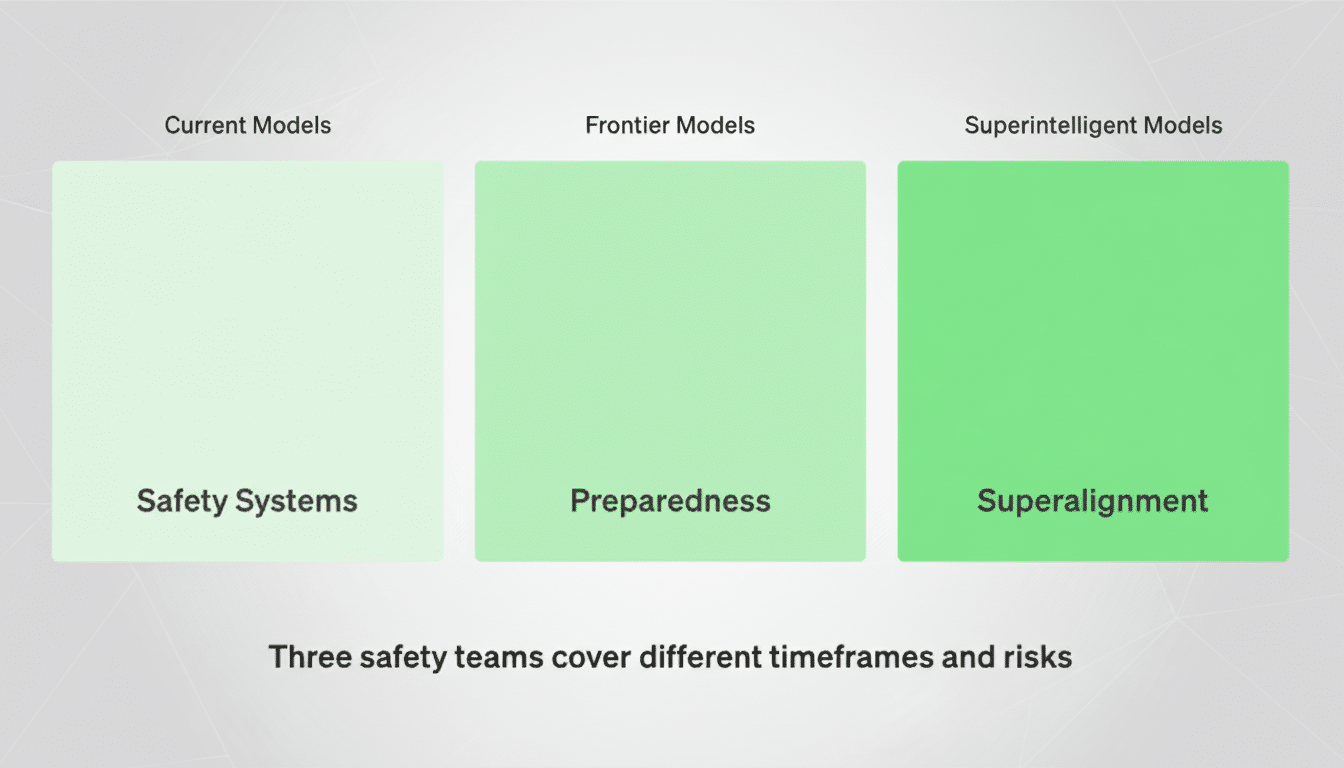

In sum, Preparedness in this context is like reliability engineering of an electrical grid or air traffic control system—where faults are engineering facts you have to work around, because the alternative is too terrible to contemplate. It encompasses scenario planning, formative “model evaluations” that are safety-critical, and large-scale preparatory safety cases. Incubators like NIST and the UK’s AI Safety Institute have formalized frameworks of such evaluations for three domains of model-supported workflows: cybersecurity, biosecurity, and persuasion risk. Labs developing new capabilities can now use and evolve those protocols.

Vectored From:

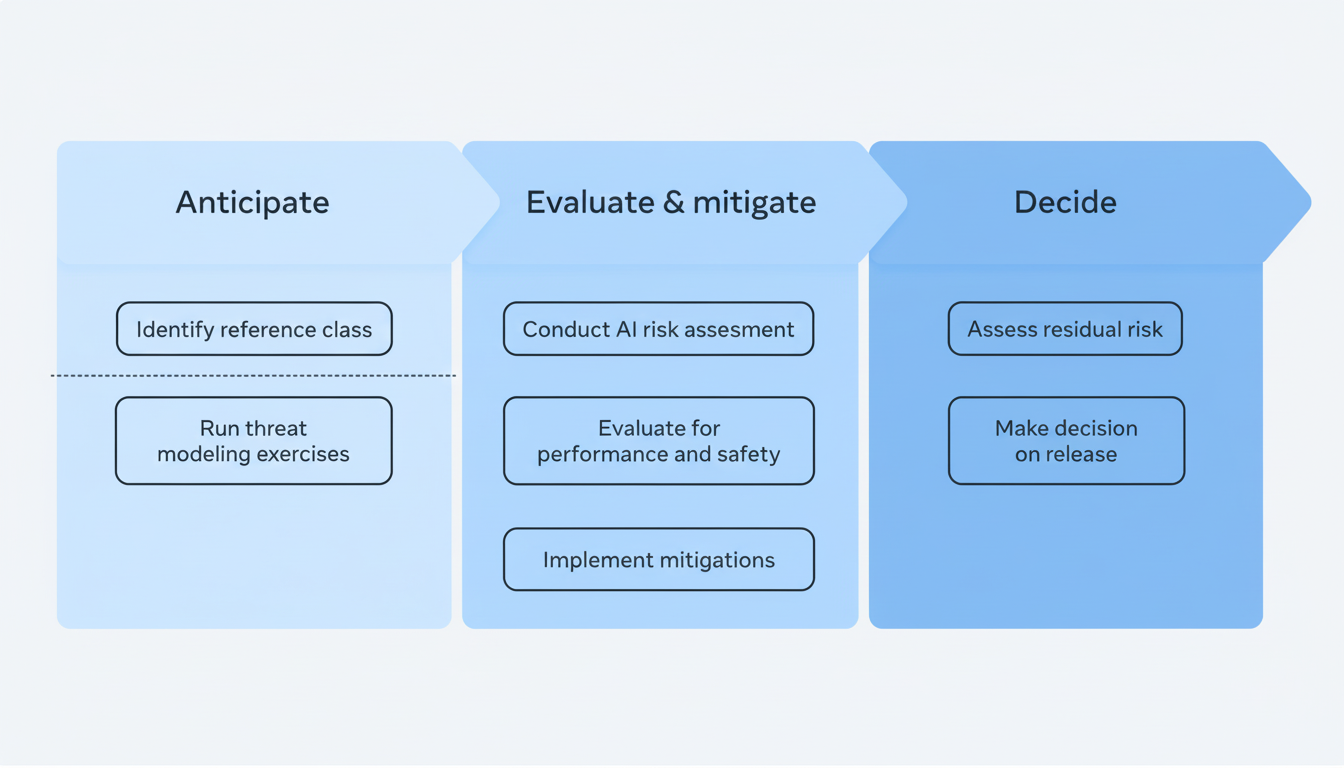

As OpenAI describes it, the Head of Preparedness will execute the company’s strategy for “frontier capabilities that create new risks of severe harm.” In practice, that translates to building end-to-end risk pipelines: threat modeling for potential misuse pathways; automated evals testing exploit generation, biological design assistance, and uncontrolled tool use; and external red-teaming validation. The OSO lead will translate those learnings back into release gates and IRs, with clear remediation standards for training teams or those shipping new systems.

Expect the role to also manage cross-functional governance: auditing data and tooling that could amplify risky capabilities, establishing thresholds for escalating reviews, and documenting safety cases in a way regulators and independent assessors can understand. This might involve constraining some agentic features until their sandboxing and rate quotas meet a threshold, or delaying features that push bio lab assistance above safe guardrails until independent evals verify the magnitude of risk reduction.

OpenAI’s safety organization is in flux amid leadership change

OpenAI established its preparedness team in 2023 to study catastrophic and near-term risks, including from phishing through more advanced cyber and bio threats. Leadership has spun around since then: the previous head, Aleksander Madry, moved to a role focused on AI reasoning, and other safety figures have taken new assignments or left. At the same time, OpenAI updated its Preparedness Framework to say that it will reassess safety standards if a rival releases a high-risk model without equivalent protections — an acknowledgment of actual competitive pressure with implications for whether absolute risks continue to look like absolute risk thresholds in the heat of competition.

Similar approaches are crystallizing in other labs. Anthropic is, to the best of our knowledge, the first industrial application (other than Google DeepMind’s red-teaming programs and partnerships with government testbeds) that formalizes capability evaluations related to actual deployment decisions as part of a responsible scaling policy. Independent entities like RAND, MITRE, and academic centers such as Stanford and Carnegie Mellon are developing domain-specific tests for cyber and bio scenarios that labs can build into internal review gates.

What a strong Head of Preparedness will focus on at OpenAI

The best candidates probably combine depth in security or biosecurity with experience in safety engineering and product judgment. Focus will be on: developing adversarial test suites that grow with model size and tool use; tripwires for abrupt capability cliffs; and trade-offs between user utility versus attack surface. They will need to be credible with outside experts, public health agencies as well as CISA-aligned defenders and academic labs, while still remaining independent enough to stop or reshape releases in the event of failed testing.

Activation can’t just be on readiness to fire. Post-deployment telemetry, incident disclosure norms, and quick patching are the table stakes today. Draw from mature industries: the team could post postmortems, grow bug bounty-style programs for model risk, and conduct “disaster drills” simulating coordinated misuse. Who can say no at each gate will eventually decide whether preparedness is advisory or decisive.

What to watch next as OpenAI hires a Head of Preparedness

The hiring will be a signal of how much weight OpenAI assigns to preparedness in its product life cycle. And look for whether the framework’s thresholds are made public, whether external audits are done with major releases, and whether the company commits to shared testing with institutions such as NIST and the UK AI Safety Institute. With software models moving fast, the Head of Preparedness will be held to one thing more than any other: Can he turn principled risk policy into operational guardrails that hold under pressure?