NotebookLM announces the release of a major update with tailored “Document Priority” to balance study, professional studies, or research work without having an option many people consider essential.

The tool still doesn’t do a completely consistent job preserving or displaying the full fidelity of PDFs — images, tables, schematics, you name it — inside its own interface. Yet the enthusiasm persists. Here’s why that paradox is logical.

The Secret Reason Why NotebookLM Is So Sticky

The core appeal is precision. NotebookLM is fully conditioned on the documents you supply, which reduces hallucinations in a significant way compared to free-range chatbots. The Stanford HAI AI Index has already identified retrieval-augmented approaches as one of the most effective methods for restraining model-inventiveness in a large language model, and NotebookLM’s “your sources only” concept gives all study enthusiasts a usable prototype of that protection.

Speed is the second draw. Load a syllabus, a contract, or a product spec, pose an incisive question, and receive a sourced answer in seconds. For knowledge workers, the few minutes shaved off each lookup add up to hours saved per week. Many teams say it cuts down the time-consuming first pass through long documents, allowing them to concentrate on higher-order judgment.

Then there’s convenience. It takes in Google Drive files with a minimum of fuss, so anyone can spin up a space to do targeted research without having to deal with databases or build out a bespoke retrieval fabric. The barrier for making your own private, trusted assistant over a niche corpus is depressingly low.

Context features round things out. NotebookLM can organize notes, outline your work, and keep discussions going about your readings. For reading, study, and internal documentation, that linearity can be more valuable than raw model horsepower.

Lastly, the problem it solves is huge. Gartner has said between 80 and 90 percent of company data, in general, is unstructured — most of it being held captive by way of documents. The idea of transforming that sprawl into chat-ready knowledge — without letting it loose on the open web — is a mighty one.

The Single Basic Feature It Still Doesn’t Have

For PDFs, the content-to-text conversion for NotebookLM is currently stripping out the visuals whenever it’s processed. The upshot: citations that take you to the correct passage but are unable to display key visual elements — icons on a device’s screen, for example, or a circuit diagram — or never show detailed critical information at all in the first place, such as a Kaplan–Meier plot, a balance sheet table, or a specific symbol used in an accepted standard. Alt text will sometimes be displayed, but the image won’t most of the time.

That’s a dealbreaker for visual work, which uses a lot of graphics. A homeowner loading appliance manuals will be unsure which button the instructions refer to. A group of engineers misplaces the schematic that explains one step in a troubleshooting process. Anatomy is hard; where did that labeled figure tying terminology to location go, the med student wonders.

What makes this gap even more mystifying is that it’s uneven by format. It’s pretty easy to dig into the full source with web pages or Google Docs. Ingesting PDFs often feels like the original layout is a distant memory, requiring users to have an open viewer in order to reference it.

Why Users Put Up With the PDF Support Gap

In practice, the 80/20 rule applies. A significant portion of everyday questions are text-first: definitions, compliance clauses, due dates, step-by-step instructions, or quoted passages. NotebookLM is actually very good at that slice, which makes it “good enough” to do a surprisingly large amount of work even when the visuals are absent.

There’s also a very powerful trust dividend. Since the system only operates within the scope of your own documents, users feel more confident beginning with a suggestion. That trust — earned the hard way in the age of AI hallucinations — purchases much forgiveness for UI deficiencies.

And the workaround, though cumbersome, is simple: just keep a PDF open in Acrobat or another reader for those times when an image or table counts. For most, time saved on everything else dwarfs the frictions of that side-by-side workflow.

What Google Has Said and What Actually Matters Now

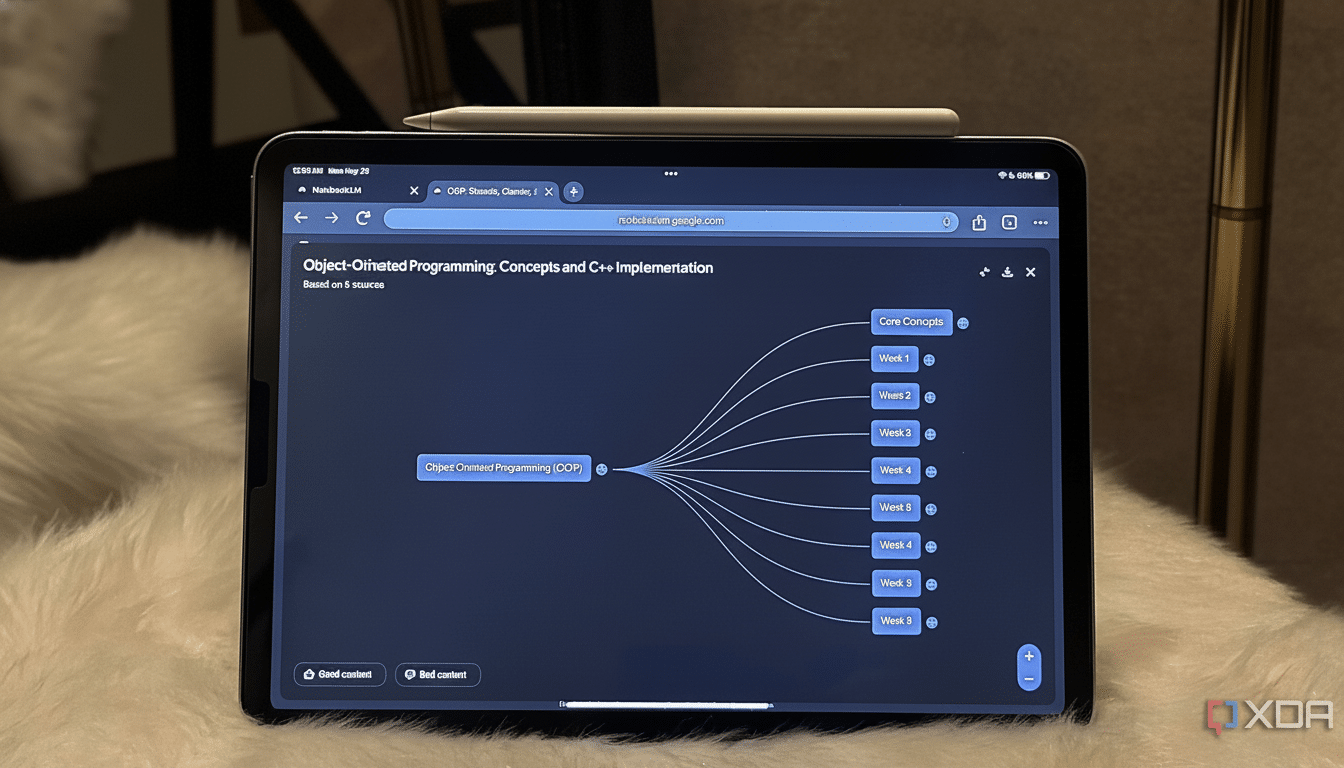

NotebookLM, according to Google, is acquiring multimodal understanding for PDFs — meaning the model can understand images in tandem with text. That’s good for understanding, but these visuals are only part of the experiential question, which is one of transparency and fidelity: will users be able to view, click on, and maximize those visuals straight from the answer, or at least jump to that particular page in the original file?

Competitors give clues to the kinds of bars users should expect. Adobe’s assistant uses native PDFs and includes page-precise previews. Microsoft’s Copilot can base answers on files in SharePoint or OneDrive with live links. Research-oriented readers like Zotero and Readwise Reader prioritize full-fidelity viewing with AI gravy on top. NotebookLM is, of course, not required to implement all the features here reported. However, such a system should have an effective way to keep and surface what professionals depend on:

- figures

- tables

- formulas

- symbols

The fixes themselves aren’t wildly inventive:

- Page thumbnails referencing citations

- Inline embedded images with callouts and captions

- Improved table rendering

- The ability to perform OCR on equations

- A clear “Open in Drive” path

Together, they would turn NotebookLM from a lightning-fast text explainer into a full document workspace.

The Bottom Line for AI Study Tools and Workflows

People like NotebookLM because it has the most common jobs to be done (quick, low-friction answers it produces from what’s already lying around), and not much else. Users have tolerated a glaring PDF limitation at a tenable service level.

But the ceiling is higher. In a world in which critical knowledge is conveyed through charts, diagrams, and equations parsed from full-fidelity PDFs, not having this capability pretty much equates to table stakes. If Google narrows that gap with clear, click-to-move source views, then NotebookLM might go from beloved assistant to must-have workspace for the heaviest of technical and academic lifting.