Uber is creating a new unit called AV Labs to collect and package real-world driving data for autonomous vehicle partners, signaling a deeper pivot into enabling robotaxi deployment rather than building its own. The company plans to run Uber-owned cars outfitted with sensor rigs, then deliver standardized, labeled datasets and analytics to more than 20 partners, including Waymo, Waabi, and Lucid. Uber says the data will be free at the outset, with the intent to accelerate progress across the ecosystem.

Why Data Is The New Fuel For Robotaxis Development

Across the industry, developers are shifting from highly engineered rule sets to learning-based systems that improve with scale and diversity of experience. That change puts a premium on granular, real-world data: human driving behavior, dense urban edge cases, tricky weather, and the rare interactions that simulations struggle to anticipate. Even leaders with large fleets can’t be everywhere at once, and gaps show up in the wild—recent public incidents involving misinterpretation of school bus stops and lane closures underscore how hard “long-tail” events remain.

Uber’s pitch is that it can systematically target those gaps. The company’s ride-hail footprint gives it an on-ramp to select neighborhoods, intersections, and corridors across 600 cities where partners want exposure. While simulation and synthetic data are improving, researchers at organizations like MIT CSAIL and the Allen Institute have repeatedly noted that rare, high-value scenarios still benefit from curated real-world captures—precisely the niche AV Labs aims to serve.

How AV Labs Will Operate And Deliver Training Data

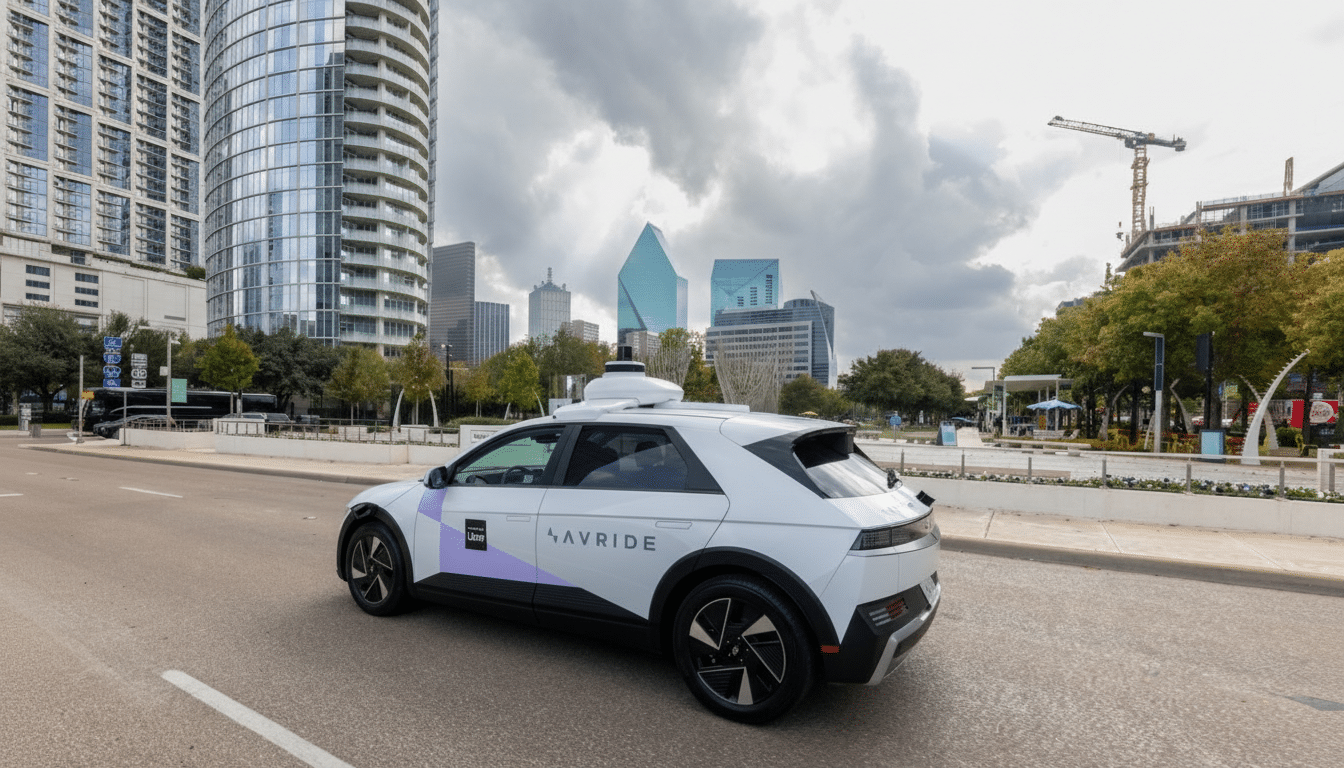

Instead of reviving its in-house robotaxi program, Uber will deploy conventionally driven vehicles equipped with cameras, lidar, radar, high-precision GPS, and compute. The data won’t ship to partners raw. AV Labs plans to apply a “semantic understanding” layer—think synchronized sensor fusion plus labels for actors, intent cues, road elements, and traffic control states—so partners receive machine-learning-ready inputs compatible with their training stacks.

Uber also expects to run partner autonomy stacks in “shadow mode” inside AV Labs vehicles. In this setup, the human-driven car and the partner software both generate decisions; any divergence is logged. Those deltas help identify failure modes, refine policies, and nudge models toward more human-like driving. Tesla popularized a version of this approach using its customer fleet, but AV Labs will focus on targeted missions rather than sheer volume.

Early phases will likely start small—prototypes first, then gradual city-by-city rollouts as hardware is hardened and annotation pipelines stabilize. Uber says the team could scale to a few hundred employees within a year, with a staged vehicle deployment following. The company emphasizes that productization comes after it lays the data foundation and aligns schemas with partner requirements.

What Partners Stand To Gain From Uber’s AV Labs

For established players, AV Labs offers targeted coverage in new geographies without diverting core fleets. For startups, it’s a shortcut around the cold start problem: you can iterate on autonomy stacks with richer data before scaling your own vehicles. A consistent, cross-city dataset can also reduce labeling overhead and sharpen generalization—an area where academic work and California DMV disengagement trends both point to the value of broader exposure across contexts.

Crucially, the service addresses operational blind spots: night-time curb behaviors, construction hand signals, oddball vehicle types, and school zones where policy sensitivity is critical. Structured captures of these scenarios, delivered with high-quality labels and precise timing alignment, are often worth more than bulk highway miles.

Business Model And Governance Questions Ahead

Uber says it won’t charge initially, framing the project as ecosystem infrastructure. Longer term, monetization could come via subscriptions for scenario packs, prioritized collection campaigns, or tools that integrate directly with partners’ training pipelines. That raises familiar questions: who owns derivative models trained on pooled data, how is competitive separation assured, and what are the rules for retention and reuse across customers?

Regulators will also watch closely. NHTSA has sharpened reporting around automated driving system data and road testing, while cities increasingly require permits for high-sensor rigs even when human-driven. Privacy and civil liberties groups will expect strict policies on face and plate handling, geofencing around sensitive sites, and clear retention limits. Uber’s history—ending its self-driving push after the 2018 fatality and later selling its ATG unit to Aurora—suggests AV Labs will adopt a cautious, compliance-first posture with safety drivers and transparent auditing.

The Competitive Landscape For AV Data Services

Tesla’s fleet-scale data engine remains unmatched in raw volume, and Waymo continues to publish extensive safety analyses and operational miles from driverless service zones. AV Labs carves out a different lane: on-demand, partner-specific data capture tied to Uber’s geographic reach and operational know-how. If executed well, it could function like a neutral utility—less about owning autonomy and more about accelerating it for everyone else.

If partners adopt the service, expect faster iteration cycles, more robust handling of messy city realities, and potentially fewer public stumbles as robotaxis expand. The near-term milestone is straightforward: get the sensor kits stable, prove the annotation quality, and deliver useful “delta” insights from shadow mode. After that, the real test will be whether curated miles translate into safer, smoother driverless rides at scale.