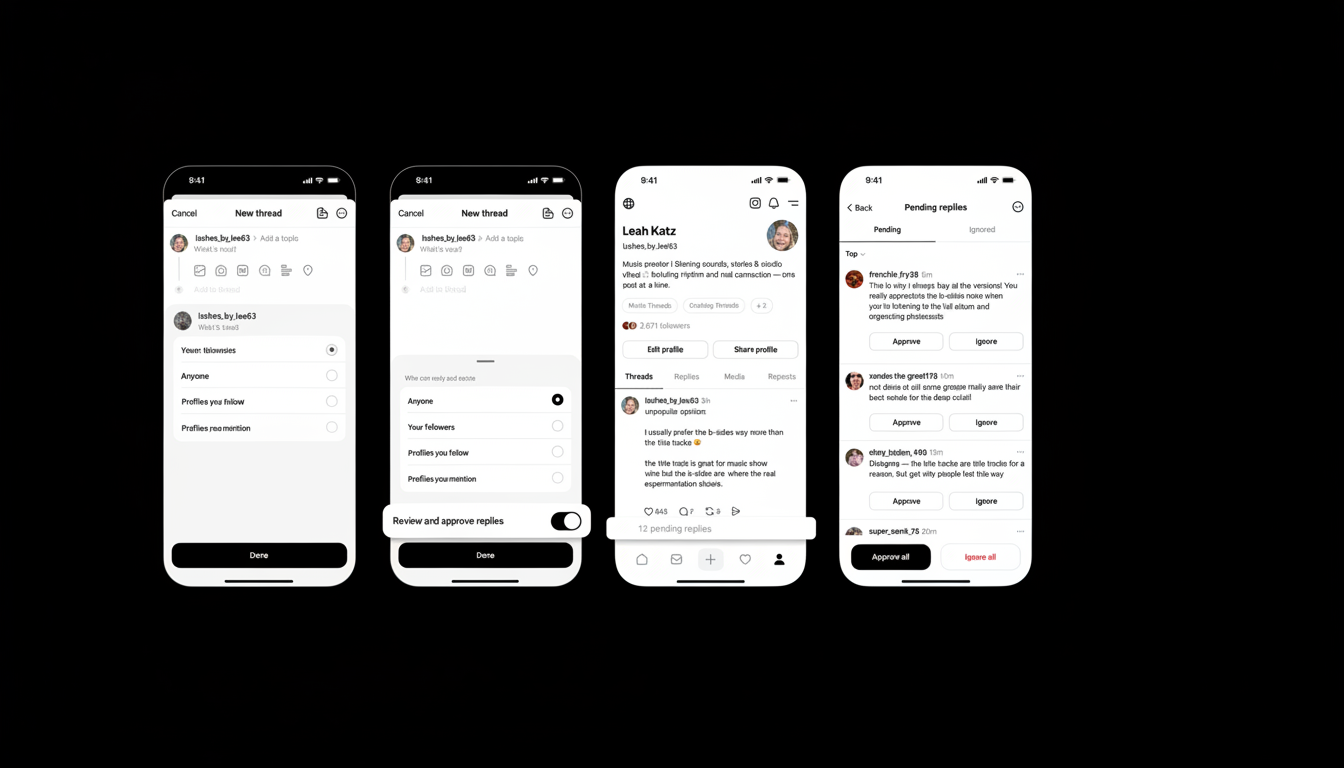

Threads has announced a new feature that gives users more control over their discussions. Post authors can now approve replies before they are posted, alongside new Activity feed filters to surface the replies that matter. Encouraging interactions with people users don’t follow and making it easier for post owners to filter mean replies is Threads’ way of making public conversations safer without locking them up.

How reply approvals work and what they are not

The new feature operates as follows: responses are placed in a queue for the post owner to clear before they can be displayed to others. This isn’t the same as restricting which accounts can and cannot respond; instead, it empowers the original post owner to moderate the discourse as it happens.

- How reply approvals work and what they are not

- Comparisons with controls on X, Instagram, and YouTube

- Public spaces on social networks can be punishing

- Expanded Activity feed filters for prioritizing replies

- Growth context, monetization, and moderation implications

- Adoption, usability, and considerations for everyday users

As a result, there’s less possibility of a conversation being derailed by trolls, proxy accounts, or bad-faith actors. As a means to enhance dialogue safety and make platforms more palatable from this perspective, this is a big choice. Threads is also enabling pre-moderation on open replies, which implies that broad-based involvement can now be supported with less fear of poor communication.

Comparisons with controls on X, Instagram, and YouTube

At present, X lets authors limit who can reply (for example, followers-only) or disable replies, and it offers a “Hide reply” option after a reply has been posted. Instagram provides additional comment controls, including Hidden Words. YouTube includes “Hold for Review” in its moderation pane.

Public spaces on social networks can be punishing

The ADL’s 2024 report found 58% of users experienced online harassment in the past year, and Pew Research has consistently documented widespread exposure to abusive content. Tools that front-load control can be the difference between hosting a productive exchange and walking away entirely.

Reply approvals are especially useful for:

- Journalists, scientists, and public officials running open Q&As

- Creators dealing with coordinated trolling

- Brands fielding high-stakes product discussions

The ability to keep threads open while filtering noise helps elevate genuine questions and on-topic debate. There’s a trade-off: stronger controls can create quieter threads that miss unexpected perspectives. The success of this feature will hinge on nuanced use—curating for quality without over-pruning dissent or critique.

Expanded Activity feed filters for prioritizing replies

Threads is also expanding Activity feed filters so you can scan replies from people you follow or jump straight to ones that mention you. These options join existing views like Verified, Quotes, and Reposts, giving high-traffic accounts a faster way to prioritize signal over noise.

For example, a creator launching a new series could quickly triage replies from long-time followers, then sweep for mentions to catch urgent asks, and finally dive into the broader reply queue. Combined with approvals, it’s a workflow that reduces context switching and missed feedback.

Growth context, monetization, and moderation implications

The timing is strategic. Meta told investors that Threads had reached roughly 150 million daily active users and more than 400 million monthly users. As usage scales, so do the challenges of moderation and the expectations from advertisers seeking safe environments for brand messages.

Threads has already rolled out ads globally and is moving toward video ads. Stronger reply controls help protect conversation quality and reduce adjacency risks—factors that matter when video formats drive higher engagement but also amplify the visibility of toxic replies.

Approvals put the author in charge, but transparency will be key. Users will watch for:

- How pending and rejected replies are handled

- Whether authors can set consistent policies across posts

- How effectively automated systems flag obvious spam before it hits queues

Adoption, usability, and considerations for everyday users

The broader product direction is consistent: Threads recently introduced communities and disappearing ghost posts, and it is testing topic-level tuning so people can nudge the algorithm toward or away from content types. These are signals of a platform betting on user agency rather than one-size-fits-all feeds.

Adoption will come down to friction. If approvals are easy to toggle and manage—especially for accounts that receive thousands of replies—the feature could become a default for public figures and brands. Expect third-party moderation partners and analytics tools to push for APIs and bulk actions if queues grow large.

For everyday users, the win is simpler: keep threads open, keep control, and track conversations. If Threads’ balance is sustainable at large scale, it could prevail in one of social media’s most challenging settings—open dialogue that remains constructive.