Federal safety regulators are investigating Tesla’s Full Self-Driving system following a string of reports that it runs red lights and ignores lane markings. The National Highway Traffic Safety Administration on Monday announced that it had opened an investigation into FSD (Supervised) and previous generations of the software, known as FSD (Beta), installed on some 2.9 million Tesla vehicles in the United States.

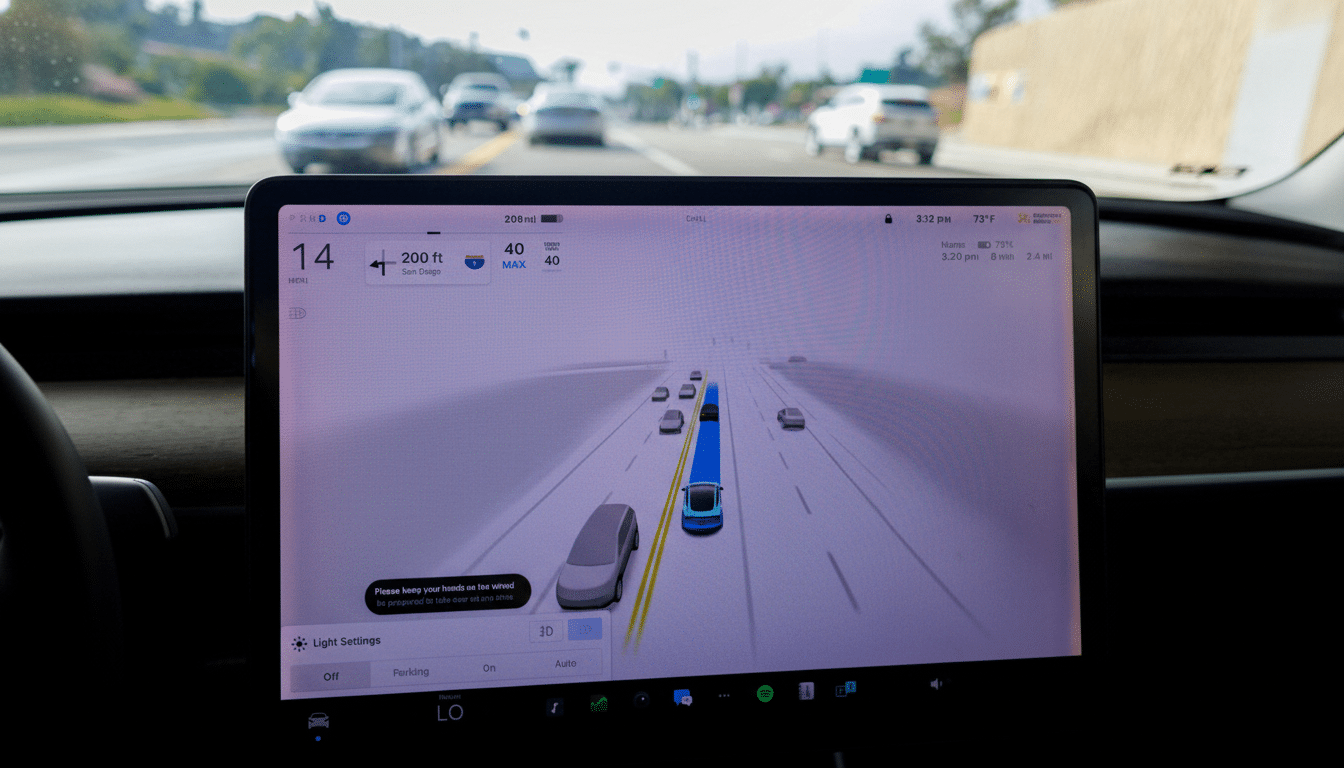

Key complaints revolve around two danger categories: cars running red lights or moving before the green light illuminates, and vehicles swerving into wrong lanes — at times over double-yellow lines or during wrong-way turns against posted signs. The probe will focus on whether the system adequately senses traffic signals and lane boundaries, for example, and if it alerts human drivers early enough — or provides them with enough time to take over.

What the Federal Probe Includes on Tesla FSD Safety

According to filings from NHTSA’s Office of Defects Investigation, the agency has recorded 18 consumer complaints and one media report chronicling red-light events with FSD-equipped Teslas. Investigators are looking into six crashes connected to those reports, four of which involved injuries.

Lane-positioning concerns fall into two buckets. ODI also references two reports from Tesla, 18 consumer complaints, and two media stories of vehicles entering the wrong lane. In addition, the agency cites four reports from Tesla, six complaints, and one media account of vehicles not paying attention to lane markings at all. The investigation will examine how FSD identifies, displays, and responds to traffic lights and whether recent software updates have included regressions.

Investigators are also examining whether the system’s restrictions addressing driver inattention and engagement are sufficient — and whether notices and requests to take over give a person enough time to correct unexpected behavior. That question has arisen in previous Tesla safety cases and is a focus of federal oversight.

Level 2 Automation and Driver Oversight Requirements

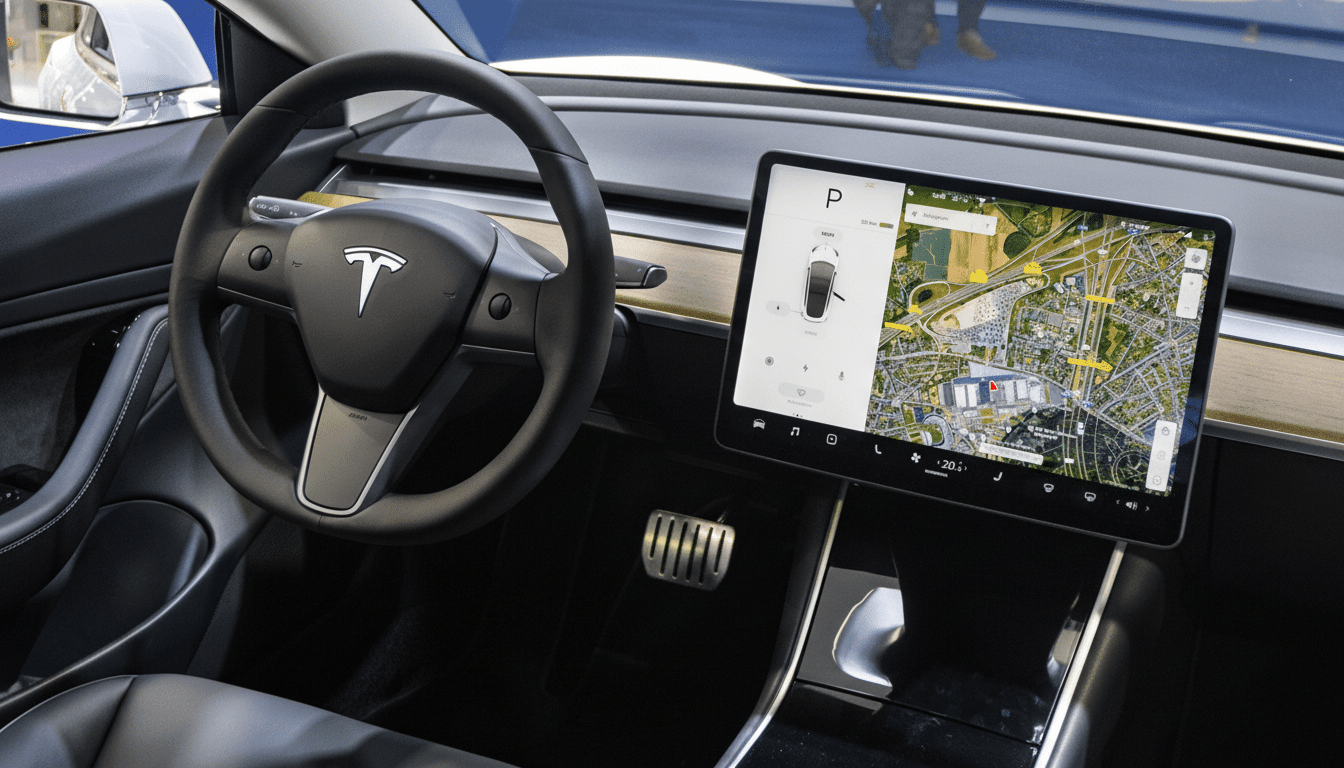

Tesla’s FSD is SAE Level 2 driver assistance. It can handle steering, acceleration, braking and navigation autonomously — but the human driver is always in charge. Even in test robotaxi operations in cities such as Austin and San Francisco, properly trained safety drivers are employed to oversee the system.

Regulators and safety researchers have issued repeated warnings about partial automation lulling drivers into over-trust. The Insurance Institute for Highway Safety has recently introduced ratings of “safeguards” on partial automation and found big holes across the industry, including with Tesla’s driver monitoring. NHTSA has also overseen a wide-ranging Tesla recall to improve Autosteer supervision through software as part of its oversight, and it is still watching real-world results after the update.

Why Signals and Lanes Can Throw Driverless Tech for a Loop

Intersections are also one of the most challenging environments for autonomous systems. Large vehicles can obstruct traffic signals, glare can wash them out, and they may be misread with flashing or tilted lights. Lane markings, meanwhile, are frequently faded or temporarily diverted by construction — or unusual in the case of vintage road layouts. Even the most sophisticated perception models can get tripped up by these edge cases.

Tesla’s camera-only approach — which it calls Tesla Vision — forgoes lidar and, in many markets, radar. Although vision-based systems have been making rapid strides courtesy of end-to-end neural nets, work from academia by research groups like MIT AgeLab and UC Berkeley’s PATH program demonstrates that redundant sensors can improve performance in poor lighting, rain, or heavy occlusion. The National Transportation Safety Board has long recommended that, to prevent misuse, automakers make automation contingent on rigorous driver monitoring and clear-cut limits for where systems can operate.

What to Look For When Using Tesla FSD on Public Roads

FSD users should consider it assistance, not automation. Keep hands on the wheel and eyes forward, and be ready to take over — especially at complicated intersections, in construction areas, near lane splits, or when road paint is faded or confusing. Should the system stumble or misunderstand a signal, or wander in lane-centered excursions, disengage and take control.

Keep software current, and report anomalies to NHTSA and the manufacturer — detailed feedback helps investigators establish patterns.

If a vehicle feels funky or behaves in unexpected ways (for example, brakes don’t seem responsive or power cuts out), call your dealership with concerns. If you’ve done that in the past few months, it may be time to reach out again.

Drivers should also know that FSD can disengage suddenly, and they will be responsible for traffic infractions and safe operation of the vehicle.

What Comes Next in the NHTSA Tesla FSD Investigation

The agency’s process typically starts with a preliminary evaluation and can progress to an engineering analysis before deciding whether a safety defect exists. Possible outcomes range from closing the probe to ordering a recall that, in Tesla’s case, would probably be delivered over the air. If changes are mandated, look for traffic-signal detection, lane-keeping logic, and driver-warning refinements.

The inquiry comes at a time of heightened industry focus on automated driving. State regulators such as the California Department of Motor Vehicles have scrutinized claims that companies are marketing vehicles capable of assisting drivers, while recent incidents with other robotaxi operators have focused attention on safety in cities. Brand aside, the regulatory rudder now has a clear directive: automated systems need to demonstrate capabilities that can accommodate the messy realities of intersections and changing lane geometry — all while keeping drivers truly engaged behind the wheel.