T-Mobile is rolling out a network-powered Live Translation feature that turns any phone call into a two-way interpreter session—no app, no special handset, just the carrier’s 5G. The service, introduced as a free beta, uses agentic AI running at the network edge to translate more than 50 languages in real time, with activation as simple as dialing 87 during a call.

The pitch is straightforward: remove the friction of downloads, logins, and device compatibility so translation is available the instant it’s needed. Only one participant has to be on T-Mobile’s 5G network for the feature to work, broadening who can benefit from it on everyday voice calls.

How T-Mobile Live Translation Works on 5G Network

Instead of processing speech on the phone, T-Mobile offloads recognition, translation, and synthesis to its “5G Advanced” infrastructure. In networking terms, this resembles multi-access edge computing: by running AI models closer to users, latency drops and translations can arrive fast enough to keep a natural back-and-forth rhythm. The company describes its stack as agentic AI, meaning the system coordinates multiple steps—speaker detection, language identification, translation, and output—without user micromanagement.

The star of the user experience is simplicity. During a call, subscribers can dial 87 to toggle translation. That code-based trigger keeps the feature compatible with feature phones and avoids wake-word misfires that have dogged voice assistants. In early access, T-Mobile says it will support 50+ languages; while the full list wasn’t disclosed, expect common pairs like English–Spanish, English–Mandarin, and English–Arabic based on industry norms for call-translation services.

Availability starts with a limited beta for selected users before a wider launch later this year. Pricing is still to be determined, with T-Mobile indicating details will arrive closer to commercial release.

Why This Could Matter at Scale for Everyday Calls

Network-native translation could bring language support to tens of millions of routine calls that never touch an app. T-Mobile says its 5G covers more than 330 million people in the U.S., and the carrier has well over 100 million lines. Even small adoption rates would translate into a sizable volume of interpreted conversations.

Demand is not hypothetical. According to the U.S. Census Bureau’s American Community Survey, more than 20% of U.S. residents speak a language other than English at home. For small businesses, healthcare front desks, school administrators, or contractors coordinating with suppliers, shaving setup time and uncertainty off a multilingual call can be the difference between a closed loop and a missed connection. Travelers dealing with hotels or customer support abroad stand to benefit, too.

Consider a field service technician who needs to confirm parts with a non-English-speaking customer, or a government benefits office returning calls from recent immigrants. A translation layer available via a short code could lower barriers without forcing either party to learn a new app workflow.

Privacy and Data Handling for Live Translation

Real-time, network-level translation raises predictable questions about data retention. T-Mobile says it does not save call recordings or transcripts from Live Translation. Instead, performance metrics such as accuracy and latency are measured during the session and discarded when it ends. The company also says it is not holding on to transcripts to train models after the fact.

Skepticism is understandable given the broader industry’s history with customer data. Carriers in the U.S. have faced scrutiny from regulators and privacy advocates over the past decade. For Live Translation to earn trust, clear disclosures, opt-in controls, and independent validation will matter as much as raw translation quality. As with any automated interpreter, the feature shouldn’t replace professionals in high-stakes medical or legal contexts where misinterpretations carry real risk.

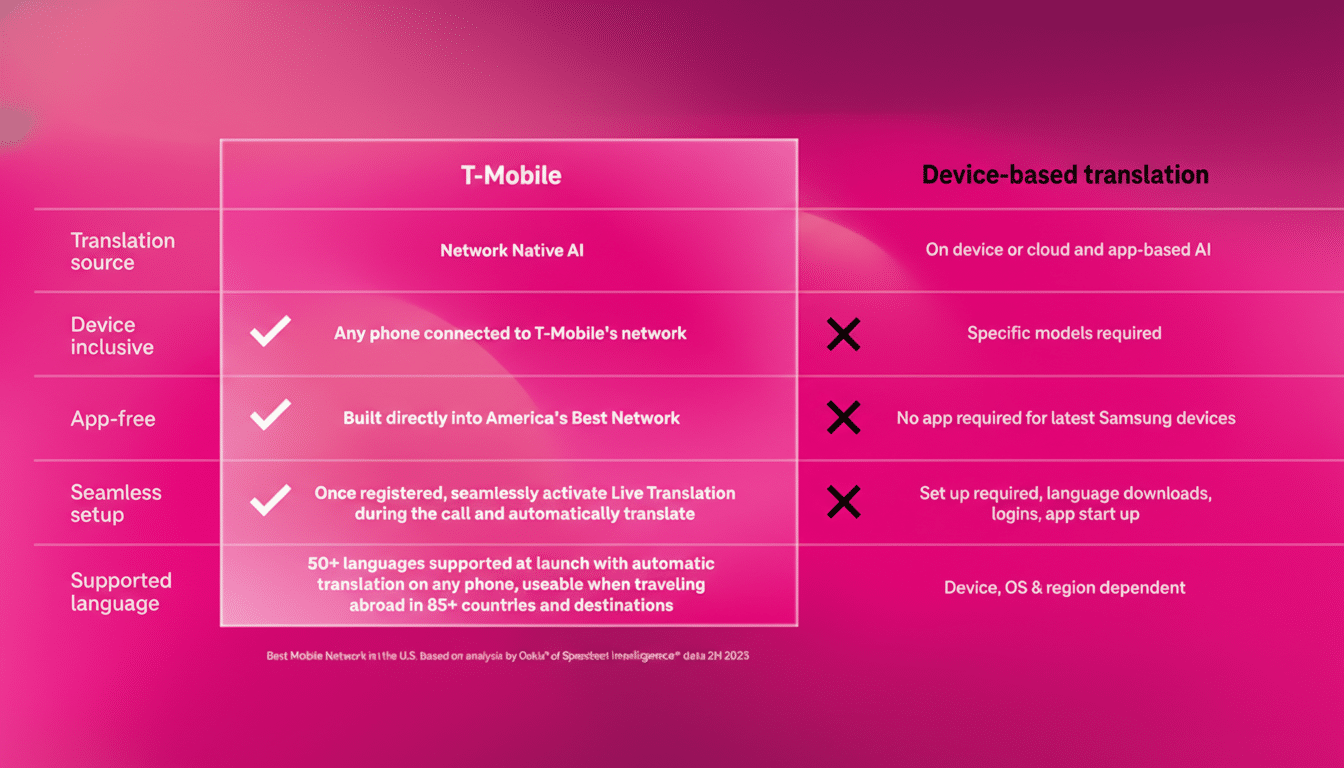

How It Compares To App-Based Translators

T-Mobile’s differentiator is ubiquity: it works on ordinary voice calls, even if the other party is on a different carrier or a legacy device. That’s a different bet than app ecosystems. Apple’s Translate app and Google’s Interpreter Mode can translate speech on-device, sometimes fully offline, which reduces privacy exposure and can deliver sub-second responses face to face. Microsoft has also offered call translation through Skype’s cloud-based Translator.

The trade-off is control versus convenience. On-device systems excel when both parties are physically together or agree to use the same app. T-Mobile’s network approach, if latency and accuracy hold up, makes translation a default capability baked into the dialer—no negotiation required. It is also well timed with 3GPP’s 5G Advanced specifications that emphasize lower latency and better handling of AI workloads at the edge.

What to Watch as the Beta Expands to More Users

Three metrics will tell the story: latency, accuracy, and reliability under load. Short, natural pauses between speakers should remain intact; idioms and domain-specific terms must resolve sensibly; and quality should remain stable at peak hours. Transparent reporting on those metrics—ideally vetted by third parties—would help users and enterprises gauge readiness.

Pricing and plan eligibility will also shape adoption. If T-Mobile positions Live Translation as a broadly available utility rather than a top-tier perk, it could become a signature 5G experience—one that showcases how the network itself can be the application.

For now, the idea is refreshingly simple: dial a number, bridge a language gap, get on with your call. If execution matches ambition, T-Mobile’s move could push real-time translation from a clever demo to a standard feature of everyday voice service.